| 编辑推荐: |

| 本文来自于segmentfault,文章主要介绍了Scrapy框架是什么,Scrapy的架构流程图,以及Scrapy框架的安装,希望对您能有所帮助。 |

|

Scrapy 是用Python实现一个为爬取网站数据、提取结构性数据而编写的应用框架。

一、Scrapy框架简介

Scrapy是一个为了爬取网站数据,提取结构性数据而编写的应用框架。 可以应用在包括数据挖掘,信息处理或存储历史数据等一系列的程序中。

其最初是为了 页面抓取 (更确切来说, 网络抓取 )所设计的, 也可以应用在获取API所返回的数据(例如

Amazon Associates Web Services ) 或者通用的网络爬虫。

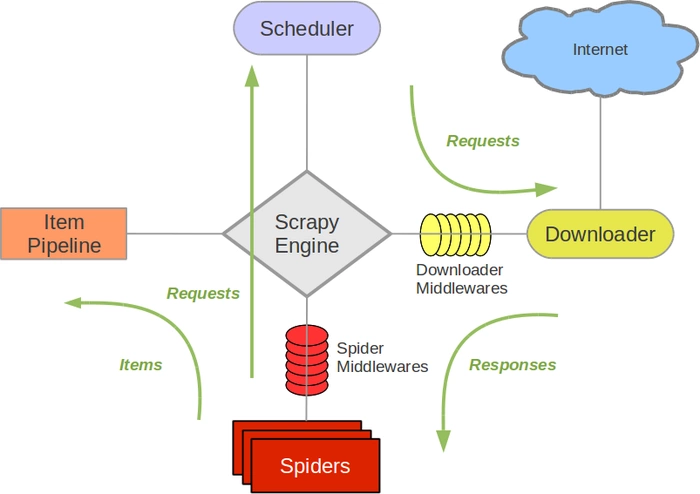

二、架构流程图

接下来的图表展现了Scrapy的架构,包括组件及在系统中发生的数据流的概览(绿色箭头所示)。 下面对每个组件都做了简单介绍,并给出了详细内容的链接。数据流如下所描述。

1、组件

Scrapy Engine

引擎负责控制数据流在系统中所有组件中流动,并在相应动作发生时触发事件。 详细内容查看下面的数据流(Data

Flow)部分。

调度器(Scheduler)

调度器从引擎接受request并将他们入队,以便之后引擎请求他们时提供给引擎。

下载器(Downloader)

下载器负责获取页面数据并提供给引擎,而后提供给spider。

Spiders

Spider是Scrapy用户编写用于分析response并提取item(即获取到的item)或额外跟进的URL的类。

每个spider负责处理一个特定(或一些)网站。 更多内容请看 Spiders 。

Item Pipeline

Item Pipeline负责处理被spider提取出来的item。典型的处理有清理、 验证及持久化(例如存取到数据库中)。

更多内容查看 Item Pipeline 。

下载器中间件(Downloader middlewares)

下载器中间件是在引擎及下载器之间的特定钩子(specific hook),处理Downloader传递给引擎的response。

其提供了一个简便的机制,通过插入自定义代码来扩展Scrapy功能。更多内容请看 下载器中间件(Downloader

Middleware) 。

Spider中间件(Spider middlewares)

Spider中间件是在引擎及Spider之间的特定钩子(specific hook),处理spider的输入(response)和输出(items及requests)。

其提供了一个简便的机制,通过插入自定义代码来扩展Scrapy功能。更多内容请看 Spider中间件(Middleware)

。

2、数据流(Data flow)

Scrapy中的数据流由执行引擎控制,其过程如下:

引擎打开一个网站(open a domain),找到处理该网站的Spider并向该spider请求第一个要爬取的URL(s)。

引擎从Spider中获取到第一个要爬取的URL并在调度器(Scheduler)以Request调度。

引擎向调度器请求下一个要爬取的URL。

调度器返回下一个要爬取的URL给引擎,引擎将URL通过下载中间件(请求(request)方向)转发给下载器(Downloader)。

一旦页面下载完毕,下载器生成一个该页面的Response,并将其通过下载中间件(返回(response)方向)发送给引擎。

引擎从下载器中接收到Response并通过Spider中间件(输入方向)发送给Spider处理。

Spider处理Response并返回爬取到的Item及(跟进的)新的Request给引擎。

引擎将(Spider返回的)爬取到的Item给Item Pipeline,将(Spider返回的)Request给调度器。

(从第二步)重复直到调度器中没有更多地request,引擎关闭该网站。

3、事件驱动网络(Event-driven networking)

Scrapy基于事件驱动网络框架 Twisted 编写。因此,Scrapy基于并发性考虑由非阻塞(即异步)的实现。

关于异步编程及Twisted更多的内容请查看下列链接:

三、4步制作爬虫

新建项目(scrapy startproject xxx):新建一个新的爬虫项目

明确目标(编写items.py):明确你想要抓取的目标

制作爬虫(spiders/xxsp der.py):制作爬虫开始爬取网页

存储内容(pipelines.py):设计管道存储爬取内容

四、安装框架

这里我们使用 conda 来进行安装:

或者使用 pip 进行安装:

查看安装:

spider scrapy

-h

Scrapy 1.4.0 - no active project

Usage:

scrapy <command> [options] [args]

Available commands:

bench Run quick benchmark test

fetch Fetch a URL using the Scrapy downloader

genspider Generate new spider using pre-defined

templates

runspider Run a self-contained spider (without

creating a project)

settings Get settings values

shell Interactive scraping console

startproject Create new project

version Print Scrapy version

view Open URL in browser, as seen by Scrapy

[ more ] More commands available when run

from project directory

Use "scrapy <command> -h" to

see more info about a command |

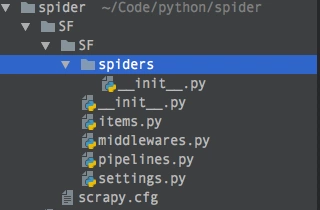

1.创建项目

spider scrapy

startproject SF

New Scrapy project 'SF', using template directory

'/Users/kaiyiwang/anaconda2/lib/python2.7/site-packages/scrapy/templates/project',

created in:

/Users/kaiyiwang/Code/python/spider/SF

You can start your first spider with:

cd SF

scrapy genspider example example.com

spider |

使用 tree 命令可以查看项目结构:

SF tree

.

├── SF

│ ├── __init__.py

│ ├── items.py

│ ├── middlewares.py

│ ├── pipelines.py

│ ├── settings.py

│ └── spiders

│ └── __init__.py

└── scrapy.cfg |

2.在spiders 目录下创建模板

spiders scrapy

genspider sf "https://segmentfault.com"

Created spider 'sf' using template 'basic' in

module:

SF.spiders.sf

spiders |

这样,就生成了一个项目文件 sf.py

# -*- coding:

utf-8 -*-

import scrapy

from SF.items import SfItem

class SfSpider(scrapy.Spider):

name = 'sf'

allowed_domains = ['https://segmentfault.com']

start_urls = ['https://segmentfault.com/']

def parse(self, response):

# print response.body

# pass

node_list = response.xpath("//h2[@class='title']")

# 用来存储所有的item字段的

# items = []

for node in node_list:

# 创建item字段对象,用来存储信息

item = SfItem()

# .extract() 将xpath对象转换为 Unicode字符串

title = node.xpath("./a/text()").extract()

item['title'] = title[0]

# 返回抓取到的item数据,给管道文件处理,同时还回来继续执行后边的代码

yield.item

#return item

#return scrapy.Request(url)

#items.append(item)

|

命令:

# 测试爬虫是否正常,

sf为爬虫的名称

scrapy check sf

s

# 运行爬虫

scrapy crawl sf |

3.item pipeline

当 item 在Spider中被收集之后,它将会被传递到 item Pipeline, 这些 item

Pipeline 组件按定义的顺序处理 item.

每个 Item Pipeline 都是实现了简单方法的Python 类,比如决定此Item是丢弃或存储,以下是

item pipeline 的一些典型应用:

验证爬取得数据(检查item包含某些字段,比如说name字段)

查重(并丢弃)

将爬取结果保存到文件或者数据库总(数据持久化)

编写 item pipeline

编写 item pipeline 很简单,item pipeline

组件是一个独立的Python类,其中 process_item()方法必须实现。

from scrapy.exceptions

import DropItem

class PricePipeline(object):

vat_factor = 1.15

def process_item(self, item, spider):

if item['price']:

if item['price_excludes_vat']:

item['price'] = item['price'] * self.vat_factor

return item

else:

raise DropItem("Missing price in %s"

% item) |

4.选择器(Selectors)

当抓取网页时,你做的最常见的任务是从HTML源码中提取数据。

Selector 有四个基本的方法,最常用的还是Xpath

xpath():传入xpath表达式,返回该表达式所对应的所有节点的selector list 列表。

extract(): 序列化该节点为Unicode字符串并返回list

css():传入CSS表达式,返回该表达式所对应的所有节点的selector list 列表,语法同

BeautifulSoup4

re():根据传入的正则表达式对数据进行提取,返回Unicode 字符串list 列表

Scrapy提取数据有自己的一套机制。它们被称作选择器(seletors),因为他们通过特定的 XPath

或者 CSS 表达式来“选择” HTML文件中的某个部分。

XPath 是一门用来在XML文件中选择节点的语言,也可以用在HTML上。 CSS 是一门将HTML文档样式化的语言。选择器由它定义,并与特定的HTML元素的样式相关连。

Scrapy选择器构建于 lxml 库之上,这意味着它们在速度和解析准确性上非常相似。

XPath表达式的例子:

/html/head/title:

选择<HTML>文档中<head>标签内的<title>元素

/html/head/title/text(): 选择上面提到的<title>元素的问题

//td: 选择所有的<td> 元素

//div[@class="mine"]:选择所有具有 class="mine"

属性的 div 元素 |

五、爬取招聘信息

1.爬取腾讯招聘信息

爬取的地址:http://hr.tencent.com/positio...

1.1 创建项目

> scrapy

startproject Tencent

You can start your first spider with:

cd Tencent

scrapy genspider example example.com |

需要抓取网页的元素:

我们需要爬取以下信息:

职位名:positionName

职位链接:positionLink

职位类型:positionType

职位人数:positionNumber

工作地点:workLocation

发布时点:publishTime

在 items.py 文件中定义爬取的字段:

# -*- coding:

utf-8 -*-

# Define here the models for your scraped items

#

# See documentation in:

# http://doc.scrapy.org/en/latest/topics/items.html

import scrapy

# 定义字段

class TencentItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

# 职位名

positionName = scrapy.Field()

# 职位链接

positionLink = scrapy.Field()

# 职位类型

positionType = scrapy.Field()

# 职位人数

positionNumber = scrapy.Field()

# 工作地点

workLocation = scrapy.Field()

# 发布时点

publishTime = scrapy.Field()

pass |

1.2 写spider爬虫

使用命令创建

Tencent scrapy

genspider tencent "tencent.com"

Created spider 'tencent' using template 'basic'

in module:

Tencent.spiders.tencent |

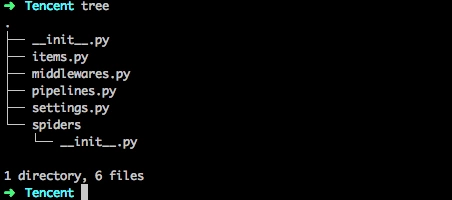

生成的 spider 在当前目录下的 spiders/tencent.py

Tencent tree

.

├── __init__.py

├── __init__.pyc

├── items.py

├── middlewares.py

├── pipelines.py

├── settings.py

├── settings.pyc

└── spiders

├── __init__.py

├── __init__.pyc

└── tencent.py |

我们可以看下生成的这个初始化文件 tencent.py

# -*- coding:

utf-8 -*-

import scrapy

class TencentSpider(scrapy.Spider):

name = 'tencent'

allowed_domains = ['tencent.com']

start_urls = ['http://tencent.com/']

def parse(self, response):

pass |

对初识文件tencent.py进行修改:

# -*- coding:

utf-8 -*-

import scrapy

from Tencent.items import TencentItem

class TencentSpider(scrapy.Spider):

name = 'tencent'

allowed_domains = ['tencent.com']

baseURL = "http://hr.tencent.com/position.php?&start="

offset = 0 # 偏移量

start_urls = [baseURL + str(offset)]

def parse(self, response):

# 请求响应

# node_list = response.xpath("//tr[@class='even']

or //tr[@class='odd']")

node_list = response.xpath("//tr[@class='even']

| //tr[@class='odd']")

for node in node_list:

item = TencentItem() # 引入字段类

# 文本内容, 取列表的第一个元素[0], 并且将提取出来的Unicode编码 转为

utf-8

item['positionName'] = node.xpath("./td[1]/a/text()").extract()[0].encode("utf-8")

item['positionLink'] = node.xpath("./td[1]/a/@href").extract()[0].encode("utf-8")

# 链接属性

item['positionType'] = node.xpath("./td[2]/text()").extract()[0].encode("utf-8")

item['positionNumber'] = node.xpath("./td[3]/text()").extract()[0].encode("utf-8")

item['workLocation'] = node.xpath("./td[4]/text()").extract()[0].encode("utf-8")

item['publishTime'] = node.xpath("./td[5]/text()").extract()[0].encode("utf-8")

# 返回给管道处理

yield item

# 先爬 2000 页数据

if self.offset < 2000:

self.offset += 10

url = self.baseURL + self.offset

yield scrapy.Request(url, callback = self.parse)

#pass

|

写管道文件 pipelines.py:

# -*- coding:

utf-8 -*-

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES

setting

# See: http://doc.scrapy.org/en/latest/topics/item-pipeline.html

import json

class TencentPipeline(object):

def __init__(self):

self.f = open("tencent.json", "w")

# 所有的item使用共同的管道

def process_item(self, item, spider):

content = json.dumps(dict(item), ensure_ascii

= False) + ",\n"

self.f.write(content)

return item

def close_spider(self, spider):

self.f.close() |

管道写好之后,在 settings.py 中启用管道

# Configure

item pipelines

# See http://scrapy.readthedocs.org/en/latest/topics/item-pipeline.html

ITEM_PIPELINES = {

'Tencent.pipelines.TencentPipeline': 300,

} |

运行:

> scrapy

crawl tencent

File "/Users/kaiyiwang/Code /python/spider

/Tencent/Tencent/spiders/tencent.py", line

21, in parse

item['positionName'] = node.xpath("./td[1]/a/text()").

extract()[0].encode("utf-8")

IndexError: list index out of range |

请求响应这里写的有问题,Xpath或应该为这种写法:

# 请求响应

# node_list = response.xpath("//tr[@class='even']

or //tr[@class='odd']")

node_list = response.xpath("//tr[@class='even']

| //tr[@class='odd']") |

然后再执行命令:

执行结果文件 tencent.json :

{"positionName":

"23673-财经运营中心热点运营组编辑", "publishTime":

"2017-12-02", "positionLink":

"position_detail.php?id=32718&keywords=&tid=0&lid=0",

"positionType": "内容编辑类", "workLocation":

"北京", "positionNumber": "1"},

{"positionName": "MIG03-腾讯地图高级算法评测工程师(北京)",

"publishTime": "2017-12-02",

"positionLink": "position_detail.php?id=30276&keywords=&tid=0&lid=0",

"positionType": "技术类", "workLocation":

"北京", "positionNumber": "1"},

{"positionName": "MIG10-微回收渠道产品运营经理(深圳)",

"publishTime": "2017-12-02",

"positionLink": "position_detail.php?id=32720&keywords=&tid=0&lid=0",

"positionType": "产品/项目类",

"workLocation": "深圳", "positionNumber":

"1"},

{"positionName": "MIG03-iOS测试开发工程师(北京)",

"publishTime": "2017-12-02",

"positionLink": "position_detail.php?id=32715&keywords=&tid=0&lid=0",

"positionType": "技术类", "workLocation":

"北京", "positionNumber": "1"},

{"positionName": "19332-高级PHP开发工程师(上海)",

"publishTime": "2017-12-02",

"positionLink": "position_detail.php?id=31967&keywords=&tid=0&lid=0",

"positionType": "技术类", "workLocation":

"上海", "positionNumber": "2"} |

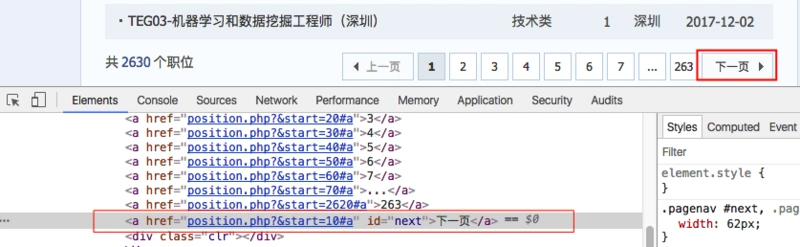

1.3 通过下一页爬取

我们上边是通过总的页数来抓取每页数据的,但是没有考虑到每天的数据是变化的,所以,需要爬取的总页数不能写死,那该怎么判断是否爬完了数据呢?其实很简单,我们可以根据下一页来爬取,只要下一页没有数据了,就说明数据已经爬完了。

我们通过 下一页 看下最后一页的特征:

下一页的按钮为灰色,并且链接为 class='noactive'属性了,我们可以根据此特性来判断是否到最后一页了。

# 写死总页数,先爬 100

页数据

"""

if self.offset < 100:

self.offset += 10

url = self.baseURL + str(self.offset)

yield scrapy.Request(url, callback = self.parse)

"""

# 使用下一页爬取数据

if len(response.xpath("//a[@class='noactive'

and @id='next']")) == 0:

url = response.xpath("//a[@id='next']/@href").extract()[0]

yield scrapy.Request("http://hr.tencent.com/"

+ url, callback = self.parse) |

修改后的tencent.py文件:

# -*- coding:

utf-8 -*-

import scrapy

from Tencent.items import TencentItem

class TencentSpider(scrapy.Spider):

# 爬虫名

name = 'tencent'

# 爬虫爬取数据的域范围

allowed_domains = ['tencent.com']

# 1.需要拼接的URL

baseURL = "http://hr.tencent.com/position.php?&start="

# 需要拼接的URL地址的偏移量

offset = 0 # 偏移量

# 爬虫启动时,读取的URL地址列表

start_urls = [baseURL + str(offset)]

# 用来处理response

def parse(self, response):

# 提取每个response的数据

node_list = response.xpath("//tr[@class='even']

| //tr[@class='odd']")

for node in node_list:

# 构建item对象,用来保存数据

item = TencentItem()

# 文本内容, 取列表的第一个元素[0], 并且将提取出来的Unicode编码 转为

utf-8

print node.xpath("./td[1]/a/text()").extract()

item['positionName'] = node.xpath("./td[1]/a/text()").extract()[0].encode("utf-8")

item['positionLink'] = node.xpath("./td[1]/a/@href").extract()[0].encode("utf-8")

# 链接属性

# 进行是否为空判断

if len(node.xpath("./td[2]/text()")):

item['positionType'] = node.xpath("./td[2]/text()").extract()[0].encode("utf-8")

else:

item['positionType'] = ""

item['positionNumber'] = node.xpath("./td[3]/text()").extract()[0].encode("utf-8")

item['workLocation'] = node.xpath("./td[4]/text()").extract()[0].encode("utf-8")

item['publishTime'] = node.xpath("./td[5]/text()").extract()[0].encode("utf-8")

# yield的重要性,是返回数据后还能回来接着执行代码,返回给管道处理,如果为return

整个函数都退出了

yield item

# 第一种写法:拼接URL,适用场景:页面没有可以点击的请求链接,必须通过拼接URL才能获取响应

"""

if self.offset < 100:

self.offset += 10

url = self.baseURL + str(self.offset)

yield scrapy.Request(url, callback = self.parse)

"""

<

# 第二种写法:直接从response获取需要爬取的连接,并发送请求处理,直到连接全部提取完(使用下一页爬取数据)

if len(response.xpath("//a[@class='noactive'

and @id='next']")) == 0:

url = response.xpath("//a[@id='next']/@href").extract()[0]

yield scrapy.Request("http://hr.tencent.com/"

+ url, callback = self.parse)

#pass |

OK,通过 根据下一页我们成功爬完招聘信息的所有数据。

1.4 小结

爬虫步骤:

1.创建项目 scrapy project XXX

2.scarpy genspider xxx "http://www.xxx.com"

3.编写 items.py, 明确需要提取的数据

4.编写 spiders/xxx.py, 编写爬虫文件,处理请求和响应,以及提取数据(yield item)

5.编写 pipelines.py, 编写管道文件,处理spider返回item数据,比如本地数据持久化,写文件或存到表中。

6.编写 settings.py,启动管道组件ITEM_PIPELINES,以及其他相关设置

7.执行爬虫 scrapy crawl xxx

有时候被爬取的网站可能做了很多限制,所以,我们请求时可以添加请求报头,scrapy

给我们提供了一个很方便的报头配置的地方,settings.py 中,我们可以开启:

# Crawl responsibly

by identifying yourself (and your website) on

the user-agent

USER_AGENT = 'Tencent (+http://www.yourdomain.com)'

User-AGENT = "Mozilla/5.0 (Macintosh; Intel

Mac OS X 10_11_6)

AppleWebKit/537.36 (KHTML, like Gecko)

Chrome/62.0.3202.94 Safari/537.36"

# Override the default request headers:

DEFAULT_REQUEST_HEADERS = {

'Accept': 'text/html,application/xhtml+xml, application/xml;q=0.9,*/*;q=0.8',

'Accept-Language': 'en',

} |

scrapy 最大的适用场景是爬取静态页面,性能非常强悍,但如果要爬取动态的json数据,那就没必要了。

|