| 编辑推荐: |

| 来源csdn

,文章通过美国官方网站的几个案例详细讲解了Python数据分析,介绍较为详细,更多内容请参阅下文。 |

|

import json

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt |

1.USA.gov Data from Bitly

此数据是美国官方网站从用户那搜集到的匿名数据。

path='datasets/bitly_usagov/example.txt'

data=[json.loads(line) for line in open(path)]

df=pd.DataFrame(data) |

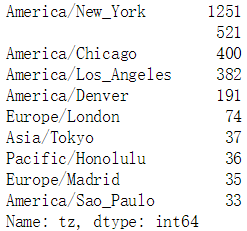

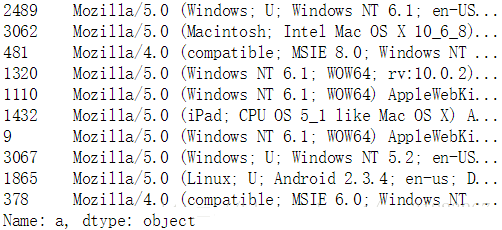

tz字段包含的是时区信息。

| df.loc[:,'tz'].value_counts()[:10] |

根据info()与value_counts()的返回结果来看,tz列存在缺失值与空值,首先填充缺失值,然后处理空值:

clean_tz=df.loc[:,'tz'].fillna('missing')

clean_tz.loc[clean_tz=='']='unkonwn'

clean_tz.value_counts()[:5] |

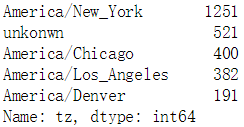

plt.clf()

subset=clean_tz.value_counts()[:10]

subset.plot.barh()

plt.show() |

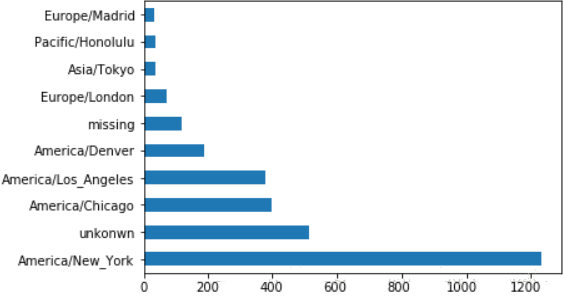

a字段包含的是浏览器、设备与应用等信息。

假设我们需要统计windows与非windows的相关量,我们要抓取a字段中的’Windows’字符串。因为a字段同样存在缺失值,这里我们选择丢弃缺失值:

clean_df=df[df.loc[:,'a'].notnull()]

mask=clean_df.loc[:,'tz']==''

clean_df.loc[:,'tz'].loc[mask]='unkonwn'

mask=clean_df.loc[:,'a'].str.contains('Windows')

clean_df.loc[:,'os']=np.where(mask,'Windows','not

Windows')

clean_df.drop('a',axis=1,inplace=True)

|

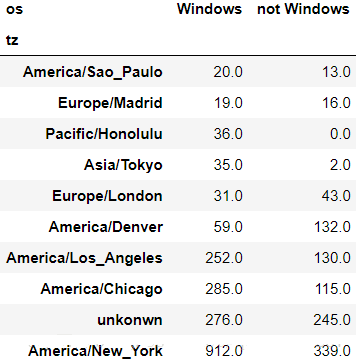

by_tz_os=clean_df.groupby(['tz','os'])

tz_os_counts=by_tz_os.size().unstack().fillna(0)

indexer=tz_os_counts.sum(axis=1).argsort() #返回排序后的索引列表

tz_os_counts_subset=tz_os_counts.take(indexer[-10:])

#取得索引列表的后十条

tz_os_counts_subset

|

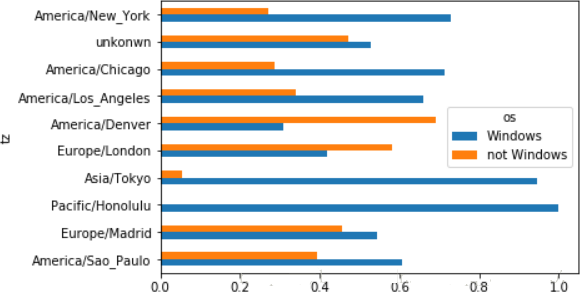

plt.clf()

tz_os_counts_subset.plot.barh()

plt.show()

|

因为不同地区的数量差异悬殊,如果我们要更清楚得查看系统差异,还需要将数据进行归一化:

tz_os_counts_subset_norm = tz_os_counts_subset.values / tz_os_counts_subset.sum (axis=1).values.reshape (10,1)

#转换成numpy数组来计算百分比

tz_os_counts_subset_norm= pd.DataFrame (tz_os_counts_subset_norm,

index= tz_os_counts_subset.index,

columns= tz_os_counts_subset.columns)

|

plt.clf()

tz_os_counts_subset_norm.plot.barh()

plt.show() |

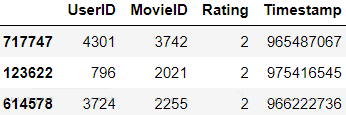

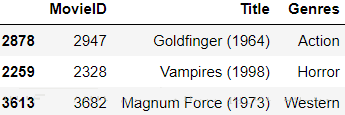

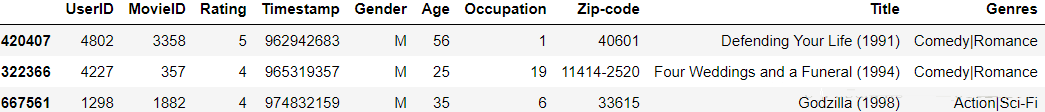

# MovieLens

rating_col=['UserID','MovieID','Rating','Timestamp']

user_col=['UserID','Gender','Age','Occupation','Zip-code']

movie_col=['MovieID','Title','Genres']

ratings=pd.read_table ('datasets/movielens/ratings.dat', header=None,sep='::',names=rating_col,engine='python')

users=pd.read_table ('datasets/movielens/users.dat', header=None,sep='::',names=user_col,engine='python')

movies=pd.read_table ('datasets/movielens/movies.dat', header=None,sep='::',names=movie_col,engine='python')

|

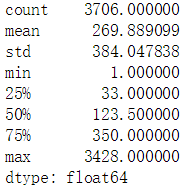

data=pd.merge(pd.merge(ratings,users),movies)

data.sample(3) |

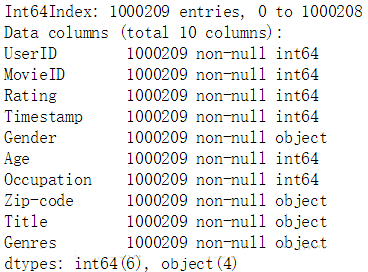

加入需要获得不同性别对于各电影的平均打分,使用透视表就可以直接得到结果:

mean_ratings= data.pivot_table ('Rating',index='Title', columns='Gender', aggfunc='mean')

mean_ratings[:5] |

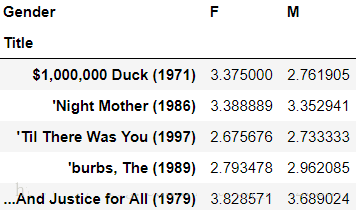

电影中会存在冷门作品,我们看一下评分数据中各电影被评价的次数都有多少:

by_title=data.groupby('Title').size()

by_title.describe() |

我们以二分位点为分割线,取出评分数量在二分位点之上的电影:

mask=by_title>=250

#注意by_title是一个Series

active_titles=by_title.index[mask]

mean_ratings=mean_ratings.loc[active_titles,:] |

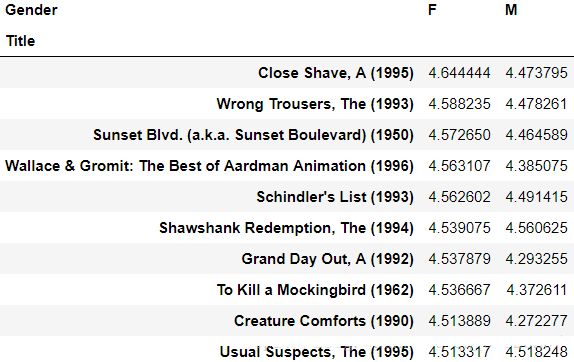

下面列出女性观众最喜爱的电影:

top_female_tarings= mean_ratings.sort_values (by='F',ascending=False)[:10]

top_female_tarings |

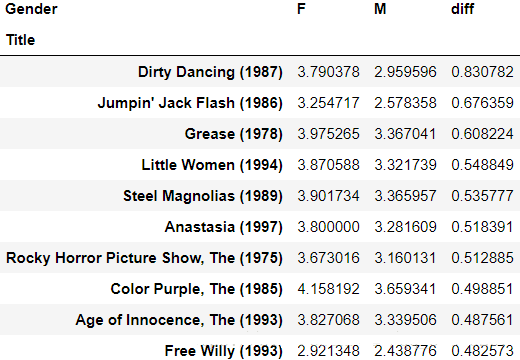

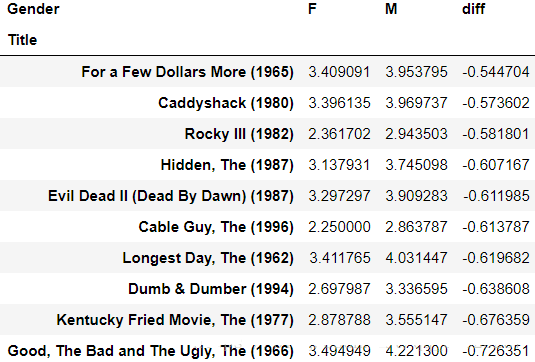

下面来看一下男女对于各影片的评分差异:

mean_ratings.loc[:,'diff'] =mean_ratings.loc[:,'F']-mean_ratings.loc[:,'M']

sorted_by_diff=mean_ratings.sort_values(by='diff',ascending=False)

sorted_by_diff[:10]

|

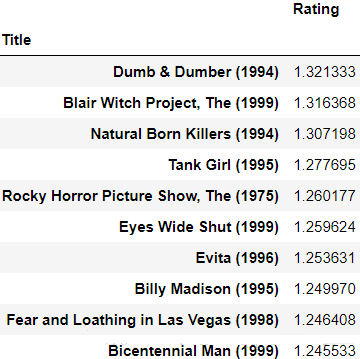

接下来我们统计那些评分争议较大的影片,rating的方差越大说明争议越大:

rating_std=data.pivot_table ('Rating',index='Title',aggfunc='std' ).loc[ active_titles,:]

rating_std.sort_values(by= 'Rating',ascending=False)[:10]

|

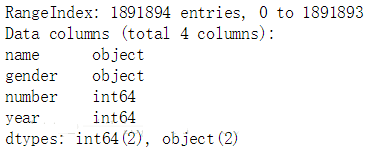

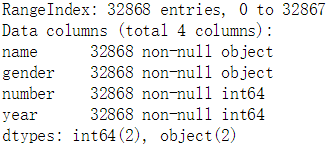

# US Baby Names

years=range(1880,2017)

subsets=[]

column=['name','gender','number']

for year in years:

path='datasets/babynames/yob{}.txt'.format(year)

df=pd.read_csv(path,header=None,names=column)

df.loc[:,'year']=year #此处注意year这一列的值为整数类型

subsets.append(df)

names=pd.concat(subsets,ignore_index=True) #拼接多个df并重新编排行号

|

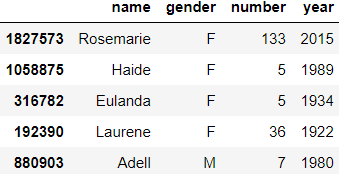

我们先根据此数据来大致观察一下每年的男女出生情况:

birth_by_gender=pd.pivot_table (names,values='number', index='year', columns='gender',aggfunc='sum')

plt.clf()

birth_by_gender.plot(title='Total births by sex

and year')

plt.show()

|

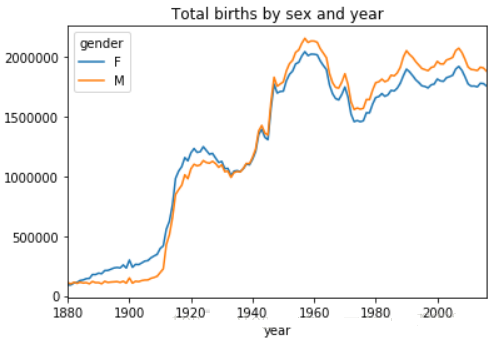

我们在数据中增加一个比例系数,这个比例能显示某个名字在这一年内占某个性别的比例:

def add_prop(group):

group.loc[:,'prop']= group.loc[:,'number']/group.loc [:,'number'].sum()

return group

|

names_with_prop=names.groupby(['year','gender']).apply(add_prop)

#注意groupby与pivot_table的区别

names_with_prop.groupby(['year','gender'])['prop'].sum()[:6]

#正确性检查,注意groupby与pivot_table的区别

|

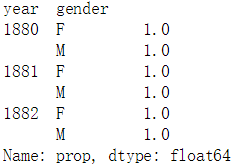

下面取出按year与gender分组后的最受欢迎的前100个名字:

def get_top(group,n=100):

return group.sort_values(by='number',ascending=False)[:n]

|

groupby_obj=names_with_prop.groupby(['year','gender'])

top100=groupby_obj.apply(get_top)

top100.reset_index(drop=True,inplace=True) #丢弃因分组产生的行索引

top100[:5]

|

接下来我们使用这些最常见的名字来做更深入的分析:

total_birth=pd.pivot_table(top100,values='number', index='year', columns='name')

total_birth.fillna (0,inplace=True) |

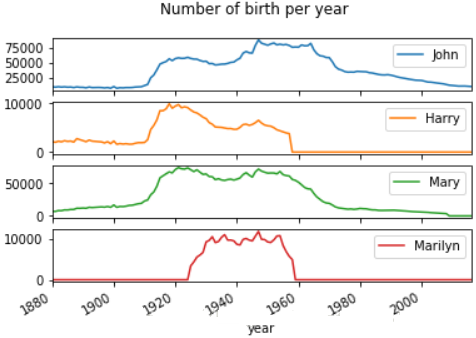

我们选取几个非常具有代表性的名字,来观察这些名字根据年份的变化趋势:

subset=total_birth.loc[:,['John','Harry','Mary','Marilyn']]

subset.plot(subplots=True,title='Number of birth

per year')

plt.show() |

可以看出这几个名字在特定的时期出现了井喷现象,但越靠近现在的时间段,这些名字出现的频率越低,这可能说明家长们给宝宝起名字不再随大流。下面来验证这个想法:

基本思想是使用名字频率的分位数,数据的分位数能大致体现出数据的分布,如果数据在某一段特别密集,则某两个分位数肯定靠的特别近,或者分位数的序号会偏离标准值非常远。

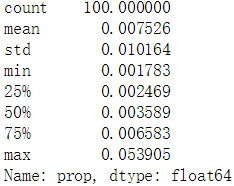

先以男孩为例,取两个年份来简单验证下以上猜想:

boys=top100[top100.loc[:,'gender']=='M']

boys[boys.loc[:,'year']==1940].sort_values (by='prop').loc[:,'prop'].describe() |

由上述数据可以看到,prop的最大值为0.05,说明最常见的名字的可观测率为5%,而且prop的均值处于[75%,max]区间内,说明绝大多数的新生儿共享一个很小的名字池。

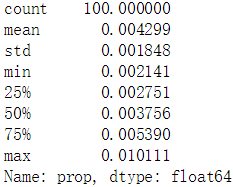

| boys[boys.loc[:,'year'] ==2016].sort_values(by='prop' ).loc[:,'prop'].describe() |

在2016年,prop的最大值降到了0.01,均值处于[50%,75%]区间内,这说明新生儿的取名更多样化了。

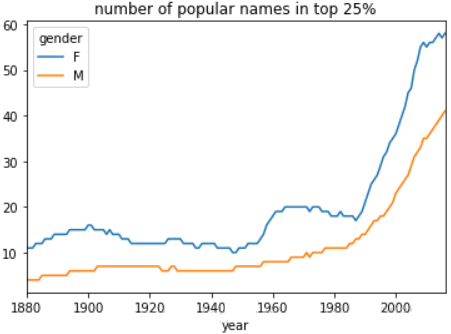

下面我们来计算占据新生儿前25%的名字数量:

def get_quantile_index(group,q=0.25):

group=group.sort_values(by='prop',ascending=False)

sorted_arr=group.loc[:,'prop'].cumsum().values

index=sorted_arr.searchsorted(0.25)+1 #0为起始的索引

return index

|

diversity=top100.groupby (['year','gender']).apply (get_quantile_index)

diversity=diversity.unstack()

|

plt.clf()

diversity.plot(title='number of popular names

in top 25%')

plt.show()

|

可以明显看出时间线越靠近现在,前25%的新生儿名字数量也越多,这确实说明家长们给宝宝起名字更多样化了。并且还注意到女孩名字的数量总是多于男孩。

下面分析名字的最后一个字母:

get_last_letter=lambda

x:x[-1]

last_letters=names.loc[:,'name'].map(get_last_letter)

#返回一个Series

last_letters.name='last_letter'

letter_table=pd.pivot_table (names,values='number' ,index=last_letters,columns= ['gender','year'],aggfunc='sum')

letter_table.fillna(0,inplace=True)

|

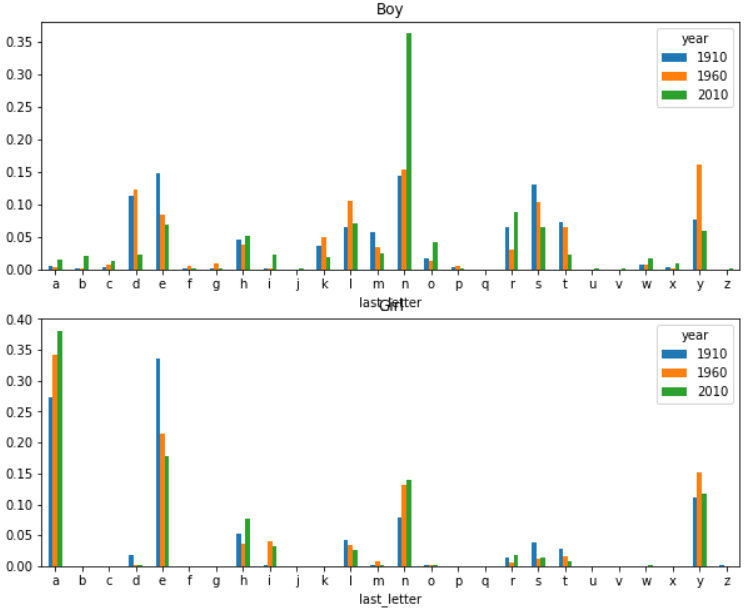

取出三个年份来进行粗略分析:

subset=letter_table.reindex (columns=[1910,1960,2010], level='year')

#重索引

subset.fillna(0,inplace=True)

letter_prop_subset=subset/subset.sum(axis=0)

|

plt.clf()

fig,axes=plt.subplots(2,1,figsize=(10,8))

letter_prop_subset.loc [:,'M'].plot (kind='bar',rot=0,ax=axes[0],title='Boy')

letter_prop_subset.loc [:,'F'].plot (kind='bar',rot=0,ax=axes[1],title='Girl')

plt.show()

|

从上面的粗略分析可以看到几个明显的情况:

- 在boy的数据里,以字母n为结尾的名字在1960年后出现了爆炸式增长

- 对girl而言,字母a结尾的名字较常见,而字母e结尾的名字则越来越少

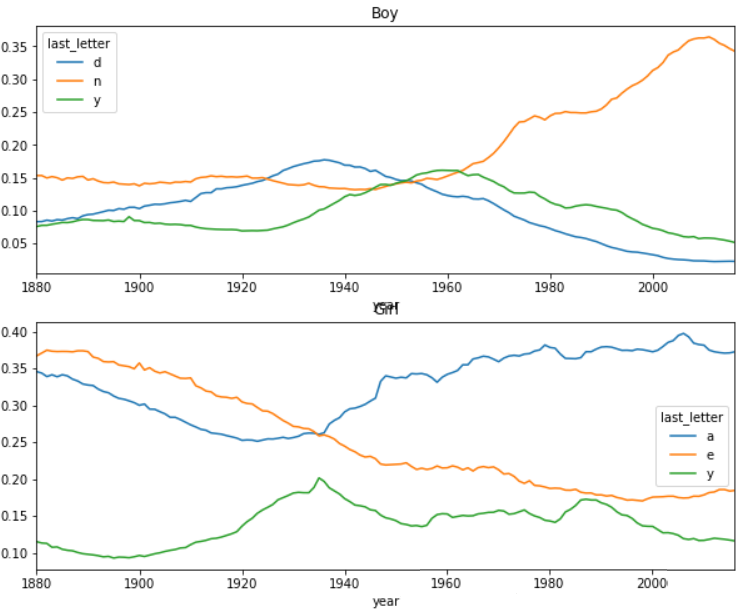

下面分别针对boy与girl挑选出最常见的名字尾字母,绘制出这些字母以随时间的变化曲线:

letter_prop=letter_table/letter_table.sum(axis=0)

boy_letter=letter_prop.loc[['d','n','y'],'M']

boy_letter_ts=boy_letter.T

girl_letter=letter_prop.loc[['a','e','y'],'F']

girl_letter_ts=girl_letter.T

|

plt.clf()

fig,axes=plt.subplots(2,1,figsize=(10,8))

boy_letter_ts.plot(ax=axes[0],title='Boy')

girl_letter_ts.plot(ax=axes[1],title='Girl')

plt.show()

|

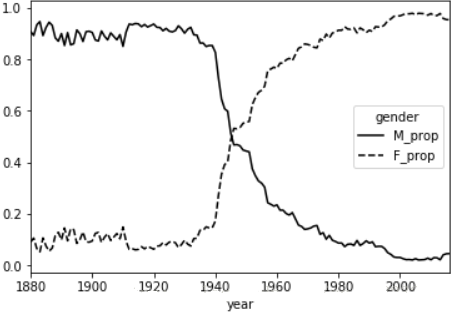

根据一个有趣的发现,表明有些男孩的名字正逐渐转向被更多的女孩使用,比如说Lesley和Leslie,下面就筛选出包含lesl的名字来验证这个说法:

uni_names=names.loc[:,'name'].unique()

#返回一个numpy数组

uni_names=pd.Series(uni_names)

mask=uni_names.str.lower().str.contains('lesl')

#ser->str->ser->str-bool_ser

lesl=uni_names[mask]

|

mask=names.loc[:,'name'].isin(lesl)

lesl_subset=names[mask] |

lesl_table=pd.pivot_table

(lesl_subset, values='number', index='year', columns='gender',

aggfunc='sum')

lesl_table.fillna(0,inplace=True)

lesl_table.loc[:,'M_prop']= lesl_table.loc[:,'M']/lesl_table.sum(axis=1)

lesl_table.loc[:,'F_prop']= lesl_table.loc[:,'F']/lesl_table.sum(axis=1)

|

plt.clf()

lesl_table.loc[:, ['M_prop','F_prop']].plot( style={'M_prop':'k-','F_prop':'k--'})

plt.show()

|

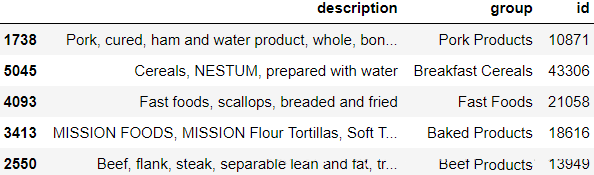

USDA Food Database

db=json.load(open('datasets/usda_food/database.json'))

len(db) |

6636

这里每个条目包含的信息太多,不给出截图了。

可以看到数据中每个条目包含以下信息:

- description

- group

- id

- manufacturer

- nutrients:营养成分,字典的列表

- portions

- tags

因为nutrients项是一个字典的列表,如果将db直接转化为dataframe的话这一项就会被归到一个列中,非常拥挤。为了便于理解,创建两个df,一个包含除了nutrients之外的食物信息,而另一个包含id与nutrients信息,然后再将两者根据id合并。

keys=['description','group','id']

food_df=pd.DataFrame(db,columns=keys) |

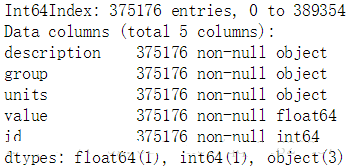

subsets=[]

for item in db:

id=item['id']

df=pd.DataFrame(item['nutrients'])

df.loc[:,'id']=id

subsets.append(df)

nutrients_df=pd.concat(subsets,ignore_index=True)

nutrients_df.drop_duplicates(inplace=True)

|

观察到两个表中出现了同样的列索引,为了合并表时不出现矛盾,更改列索引名称:

fd_col_map={

'description':'food',

'group':'fd_cat'

}

food_df=food_df.rename(columns=fd_col_map)

nt_col_map={

'description':'nutrient',

'group':'nt_cat'

}

nutrients_df=nutrients_df.rename(columns=nt_col_map)

|

print('{}\n{}'.format (food_df.columns,nutrients_df.columns))

|

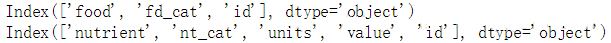

| data=pd.merge(food_df,nutrients_df,on='id',how='outer')

|

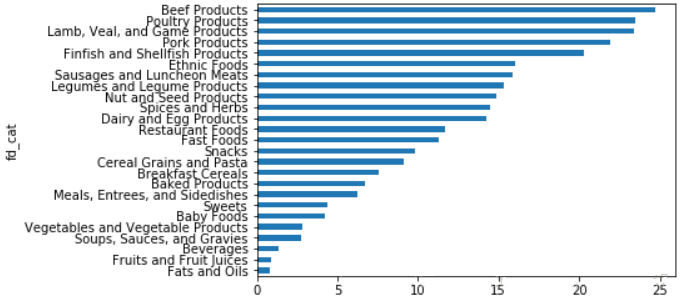

注意这个表中,唯一具有统计意义的值是value列,其余都是描述性信息。

假设现在需要统计哪种食物类别拥有的营养量均值,可以先将表对nutrient与fd_cat进行分组,再进行排序输出:

| nt_result=data.loc [:,'value'].groupby ([data.loc[:,'nutrient'], data.loc[:,'fd_cat']]).mean() |

plt.clf()

nt_result.loc ['Protein'].sort_values().plot(kind='barh')

#按蛋白质含量均值绘制图形

plt.show() |

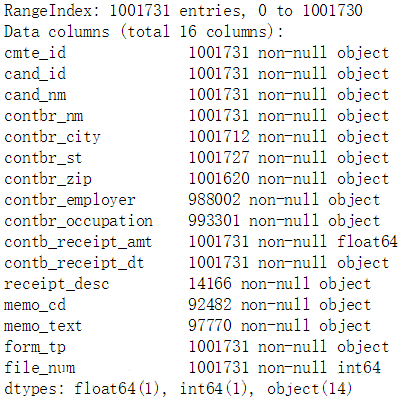

2012 Federal Election Commission Database

| fec=pd.read_csv('datasets/fec/P00000001-ALL.csv',low_memory=False)

#避免警告 |

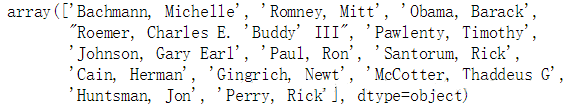

注意到数据中没有候选人所属的党派这一信息,所以可以考虑人为加上这一信息。首先统计出数据中有多少位候选人:

| fec.loc[:,'cand_nm'].unique() |

nm2pt={

'Bachmann, Michelle': 'Republican',

'Romney, Mitt': 'Republican',

'Obama, Barack': 'Democrat',

"Roemer,

Charles E. 'Buddy' III": 'Republican',

'Pawlenty, Timothy': 'Republican',

'Johnson, Gary Earl': 'Republican',

'Paul, Ron': 'Republican',

'Santorum, Rick': 'Republican',

'Cain, Herman': 'Republican',

'Gingrich, Newt': 'Republican',

'McCotter, Thaddeus G': 'Republican',

'Huntsman, Jon': 'Republican',

'Perry, Rick': 'Republican',

}

fec.loc[:,'cand_pt']=fec.loc[:,'cand_nm'].map(nm2pt)

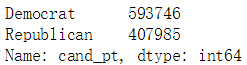

|

fec.loc[:,'cand_pt'].value_counts()

|

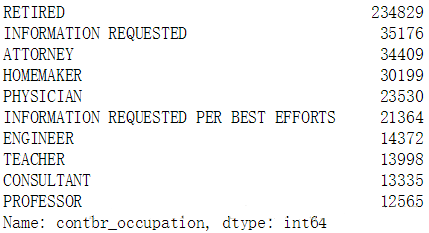

据说有一个现象,律师会倾向于捐给民主党,而经济人士会倾向于捐给共和党,下面就来验证这一说法:

fec.loc[:,'contbr_occupation'].value_counts()[:10]

|

occ_map={

'INFORMATION REQUESTED PER BEST EFFORTS':'UNKNOW',

'INFORMATION REQUESTED':'UNKNOW',

'C.E.O.':'CEO' #这一条是在后面分析中发现的项

}

f=lambda x:occ_map.get(x,x) #获取x对应的value,如果没有对应的value则返回x

|

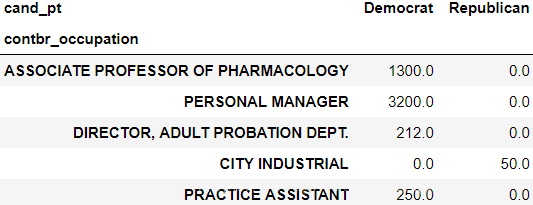

fec.loc[:,'contbr_occupation'] =fec.loc [:,'contbr_occupation'].map(f)

by_occupation=pd.pivot_table (fec,values ='contb_receipt_amt', index= 'contbr_occupation', columns='cand_pt',aggfunc='sum')

by_occupation.fillna(0,inplace=True)

by_occupation.sample(5)

|

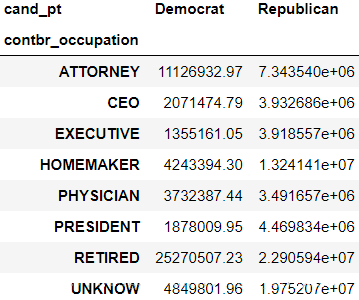

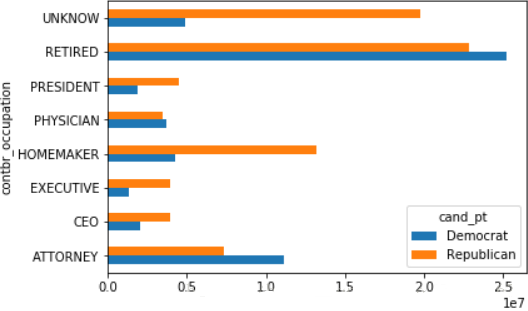

看出捐献金额分布的极度不平衡,我们只选出总数大于5e6的条目:

mask=by_occupation.sum(axis=1)>5e6

over5mm=by_occupation[mask]

over5mm |

plt.clf()

over5mm.plot(kind='barh')

plt.show() |

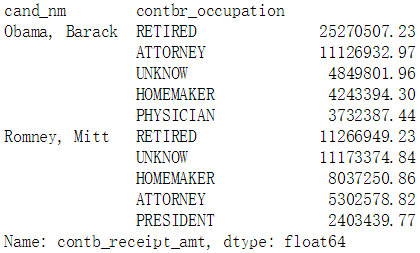

下面我们对Obama Barack与Romney Mitt的数据进行分析:

mask=fec.loc[:,'cand_nm'].isin (['Obama,

Barack','Romney, Mitt'])

fec_subset=fec[mask] |

假设需要分别统计出对这两个人支持最大的各职业,可以这样做:

def get_top(group,key,n=5):

totals=group.groupby(key)['contb_receipt_amt'].sum()

return totals.nlargest(n) |

grouped=fec_subset.groupby('cand_nm')

grouped.apply(get_top,'contbr_occupation',5) |

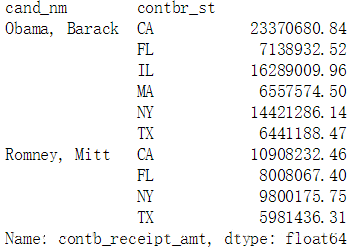

下面看各州对两人的支持情况:

by_stat=fec_subset.groupby(['cand_nm','contbr_st'])['contb_receipt_amt'].sum(axes=0)

mask=by_stat>5e6

by_stat=by_stat[mask] |

|