| 编辑推荐: |

| 本文来自csdn,本文主要介绍了大数据Spark简介与hadoop集群搭建,scala安装以及Spark安装以及配置,希望对您的学习有所帮助。 |

|

Spark 是 Apache 顶级项目里面最火的大数据处理的计算引擎,它目前是负责大数据计算的工作。包括离线计算或交互式查询、数据挖掘算法、流式计算以及图计算等。

spark生态系统

核心组件如下:

Spark Core:包含Spark的基本功能;尤其是定义RDD的API、操作以及这两者上的动作。其他Spark的库都是构建在RDD和Spark

Core之上的。

Spark SQL:提供通过Apache Hive的SQL变体Hive查询语言(HiveQL)与Spark进行交互的API。每个数据库表被当做一个RDD,Spark

SQL查询被转换为Spark操作。对熟悉Hive和HiveQL的人,Spark可以拿来就用。

Spark Streaming:允许对实时数据流进行处理和控制。很多实时数据库(如Apache Store)可以处理实时数据。Spark

Streaming允许程序能够像普通RDD一样处理实时数据。

MLlib:一个常用机器学习算法库,算法被实现为对RDD的Spark操作。这个库包含可扩展的学习算法,比如分类、回归等需要对大量数据集进行迭代的操作。之前可选的大数据机器学习库Mahout,将会转到Spark,并在未来实现。

GraphX:控制图、并行图操作和计算的一组算法和工具的集合。GraphX扩展了RDD API,包含控制图、创建子图、访问路径上所有顶点的操作。

由于这些组件满足了很多大数据需求,也满足了很多数据科学任务的算法和计算上的需要,Spark快速流行起来。不仅如此,Spark也提供了使用Scala、Java和Python编写的API;满足了不同团体的需求,允许更多数据科学家简便地采用Spark作为他们的大数据解决方案

spark的存储层次

spark不仅可以将任何的hadoop分布式文件系统上的文件读取为分布式数据集,也可以支持其他支持hadoop接口的系统,比如本地文件、亚马逊S3、Hive、HBase等。

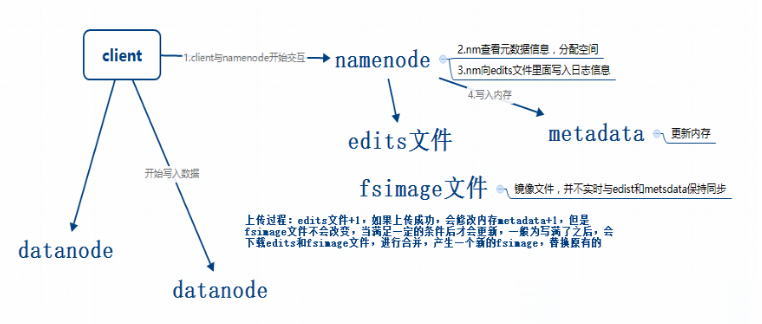

下图为hadoop与节点之间的关系:

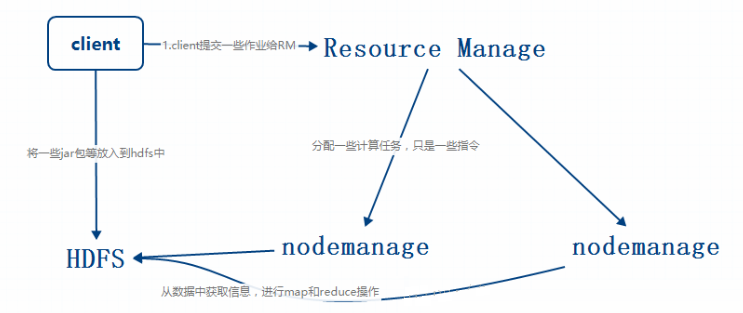

spark on yarn

Apache Hadoop YARN (Yet Another Resource Negotiator,另一种资源协调者)是一种新的

Hadoop 资源管理器,它是一个通用资源管理系统,可为上层应用提供统一的资源管理和调度.YARN

分层结构的本质是 ResourceManager。这个实体控制整个集群并管理应用程序向基础计算资源的分配。ResourceManager

将各个资源部分(计算、内存、带宽等)精心安排给基础 NodeManager(YARN 的每节点代理)?Hadoop2版本以上,引入YARN之后,不仅仅可以使用MapReduce,还可以引用spark等等计算?

1.hadoop集群搭建(master+slave01)

集群机器准备

<1>在VMware中准备了两台ubuntu14.04的虚拟机,修改主机名为master,slave01,并且两台机器的主机名以及ip如下(根据自己所在网络环境修改):

<2>修改master和slave01的/etc/hosts文件如下:

127.0.0.1 localhost

192.168.1.123 master

192.168.1.124 slave01 |

通过ping命令测试两台主机的连通性

配置ssh无密码访问集群

<1>分别在两台主机上运行一下命令

ssh-keygen -t

dsa -P '' -f ~/.ssh/id_dsa

cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

|

<2>将slave01的公钥id_dsa.pub传给master

| scp ~/.ssh/id_dsa.pub

itcast@master:/home/itcast/.ssh/id_dsa.pub.slave01

|

<3>将 slave01的公钥信息追加到 master 的 authorized_keys文件中

| cat id_dsa.pub.slave01

>> authorized_keys |

<4>将 master 的公钥信息 authorized_keys 复制到slave02

的 .ssh 目录下

| scp authorized_keys

itcast@slave01:/home/itcast/.ssh/authorized_keys

|

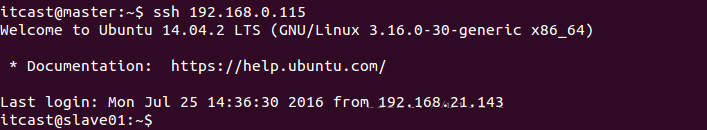

ssh到slave01上:

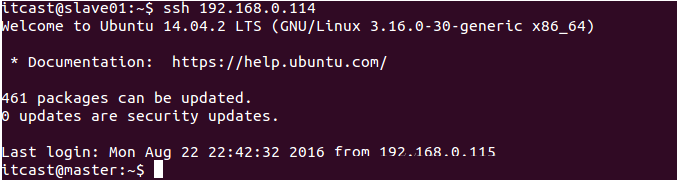

ssh到master上:

jdk与hadoop安装包安装

<1>使用jdk_u780版本的安装包,所有环境统一解压到/opt/software/目录下,分别在master和slave01中添加环境变量:

export JAVA_HOME=/opt/software/java/jdk1.7.0_80

export JRE_HOME=/opt/software/java/jdk1.7.0_80/jre

export CLASSPATH=.:$JAVA_HOME/lib:$JRE_HOME/lib:$CLASSPATH

export PATH=$PATH:$JAVA_HOME/bin:$JRE_HOME/bin |

<2>使用Hadoop-2.6.0本的安装包,所有环境统一解压到/opt/software/目录下,分别在master和slave01中添加环境变量,记住将bin目录添加到PATH中:

| export HADOOP_HOME=/opt/software/hadoop/hadoop-2.6.0

|

1.2.配置hadoop环境

集群配置

<1>在/opt/software/hadoop/hadoop-2.6.0/etc/hadoop目录下修改hadoop-env.sh

增加如下配置:

export JAVA_HOME=/opt/software/java/jdk1.7.0_80

export HADOOP_PREFIX=/opt/software/hadoop/hadoop-2.6.0

|

<2>修改core-site.xml,tmp目录需要提前创建好

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://master:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/software/hadoop/hadoop-2.6.0/tmp</value>

</property>

</configuration> |

<3>修改hdfs-site.xml,指定数据的副本个数

<configuration>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

</configuration> |

<4>修改mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration> |

<5>yarn-env.sh中增加JAVA_HOME的环境

<6>修改yarn-site.xml

<configuration>

<!-- Site specific YARN configuration properties

-->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>master</value>

</property>

</configuration> |

<7>slaves中增加集群主机名

在slave01上做同样的配置

启动hadoop集群

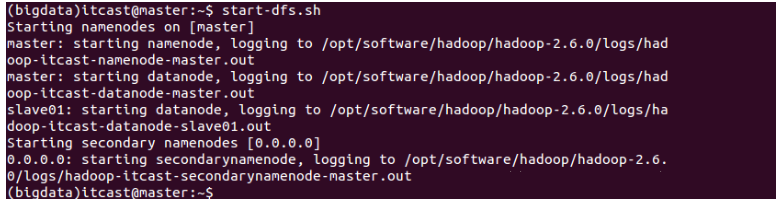

<1>start-dfs.sh,启动namenode和datanode

在master和slave01上使用jps命令查看java进程,也可以在http://master:50070/

上查看

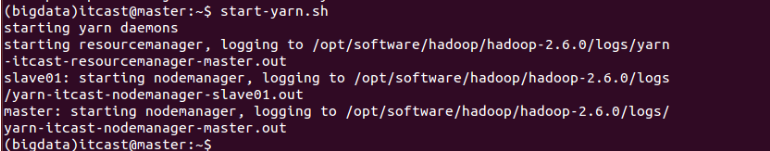

<2>start-yarn.sh,启动 ResourceManager

和 NodeManager,在master和slave01上

使用jps命令查看java进程

如果上述都成功了,那么集群启动就成功了

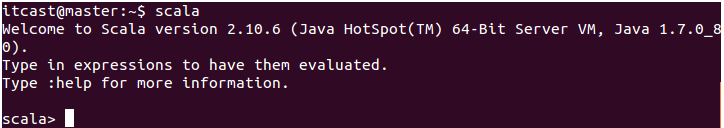

1.3.scala安装

<1>使用scala-2.10.6版本的安装包,同样解压放在/opt/software/scala/下,相关文件夹需自己创建

<2>修改家目录下的.bashrc文件,添加如下环境,记住添加scala的PATH路径:

export SCALA_HOME=/opt/software/scala/scala-2.10.6

export SPARK_HOME=/opt/software/spark/spark-1.6.0-bin-hadoop2.6

|

运行source .barhrc使环境变量生效

<3>同样在slave01上配置

<4>输入scala命令,查看是否生效

1.4.Spark安装以及配置

Spark安装

<1>spark安装包使用spark-1.6.0-bin-hadoop2.6,同样解压到/opt/software/hadoop

<2>修改环境变量文件.bashrc,添加如下内容

export SPARK_HOME=/opt/software/spark/spark-1.6.0-bin-hadoop2.6

#以下是全部的PATH变量

export PATH=$PATH:$JAVA_HOME/bin:$JRE_HOME/bin:$HADOOP

_HOME/sbin:$HADOOP_HOM E/bin:$SCALA_HOME/bin:$SPARK_HOME/bin:$SPARK_HOME/sbin

|

运行source .bashrc使环境变量生效

Spark配置

<1>进入Spark的安装目录下的conf目录,拷贝spark-env.sh.template到spark-env.sh

| cp spark-env.sh.template

spark-env.sh |

编辑spark-env.sh,在其中添加以下配置信息:

export SCALA_HOME=/opt/software/scala/scala-2.10.6

export JAVA_HOME=/opt/software/java/jdk1.7.0_80

export SPARK_MASTER_IP=192.168.0.114

export SPARK_WORKER_MEMORY=1g

export HADOOP_CONF_DIR=/opt/software/hadoop/hadoop-2.6.0/etc/hadoop

|

<2>将slaves.template拷贝到slaves,编辑起内容为:

此配置表示要开启的worker主机?<3>slave01同样参照master配置

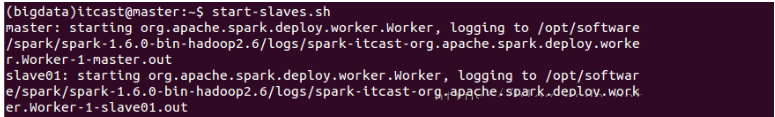

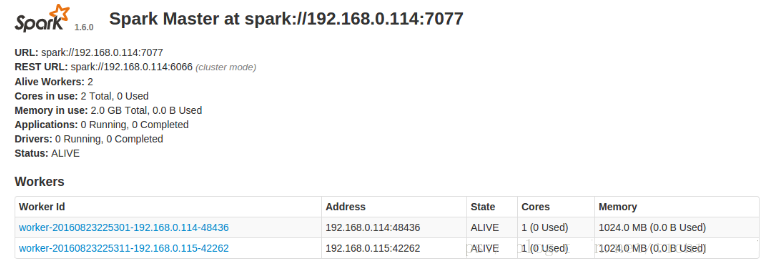

Spark集群启动

<1>启动Master节点,运行start-master.sh,结果如下:

<2>启动所有的worker节点,运行start-slaves.sh,运行结果如下:

<3>输入jps命令查看启动情况

<4>浏览器访问http://master:8080

可查看Spark集群信息

|