| 编辑推荐: |

| 本文来自于cnblogs,文章针对Windows版的eclipse,介绍一种不同的安装方式、导入和使用方式。 |

|

Hadoop Development Tools (HDT)是开发hadoop应用的eclipse插件,http://hdt.incubator.apache.org/介绍了其特点,安装,使用等,针对Windows版的eclipse,介绍一种不同的安装方式、和使用方式。

1 下载HDT

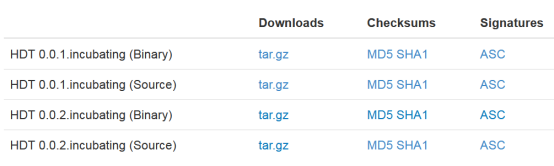

打开:http://hdt.incubator.apache.org/download.html,部分页面:

下载HDT 0.0.2.incubating (Binary)版。点击“tar.gz”,跳转到:

http://www.apache.org/dyn/closer.cgi/incubator/hdt/hdt-0.0.2.incubating/hdt-0.0.2.incubating-bin.tar.gz,部分页面:

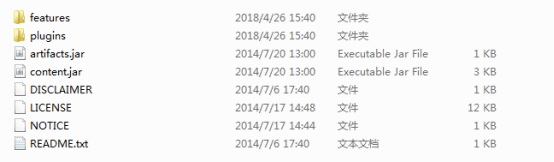

点击红框部分的连接,下载HDT,解压看到文件夹内容:

2 安装HDT插件

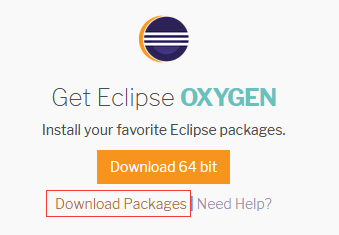

下载当前最新版(eclipse oxygen)

点击Download Packages。

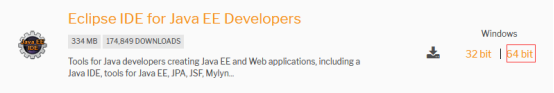

下载64bit版本。文件为:eclipse-jee-oxygen-3a-win32-x86_64.zip,解压:

将HDT的features和plugins中的文件,对应放到上面的文件夹内。

3 下载hadoop并配置环境变量

下载Hadoop

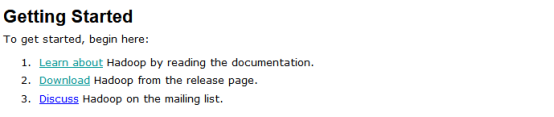

输入网址:http://hadoop.apache.org/,看到下面的部分。

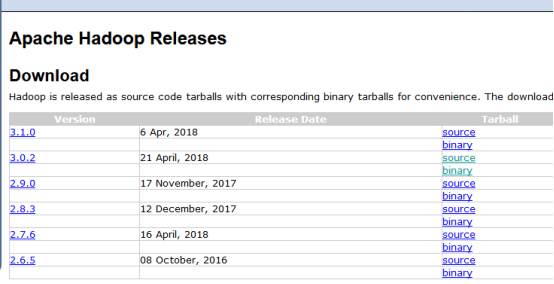

点击Download进入下载页面:

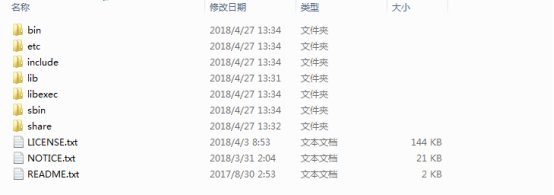

下载2.6.5版本的binary,注意下载的时候选择一个国内的镜像,这样下载的速度会比较快。解压到指定目录,例如:E:\hadoop-2.6.5。文件夹包括:

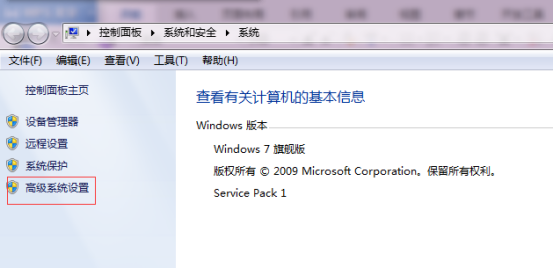

配置环境变量

配置HADOOP_HOME、HADOOP_USER_NAME环境变量、PATH(系统变量)

HADOOP_HOME配置为E:\hadoop-2.6.5,PATH添加%HADOOP_HOME%\bin

Windows下开发

为了能在Windows平台下做开发,还需要两个文件winutils.exe和hadoop.dll

将winutils.exe放在E:\hadoop-2.6.0\bin目录下,将hadoop.dll放在C:\Windows\System32下

4 安装HDT

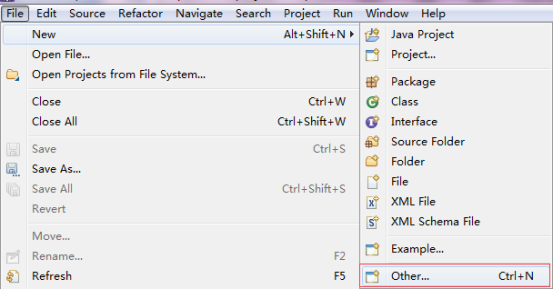

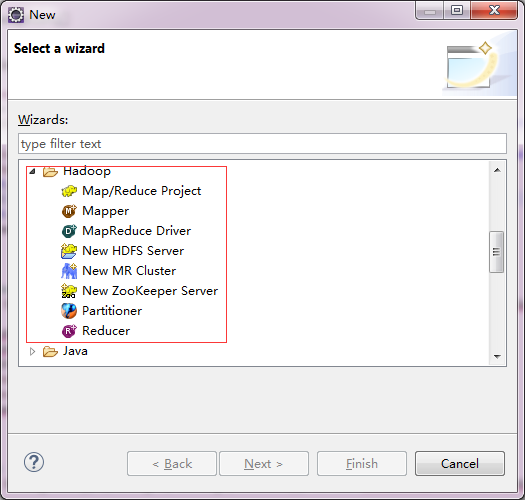

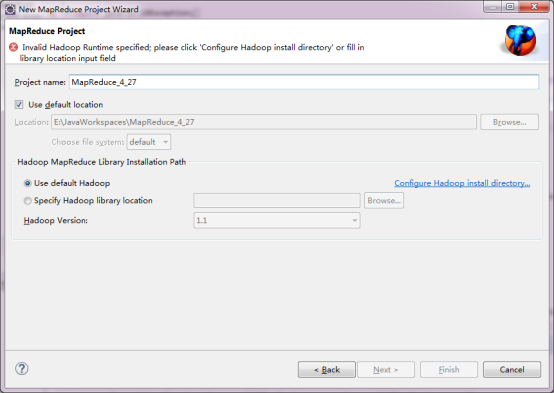

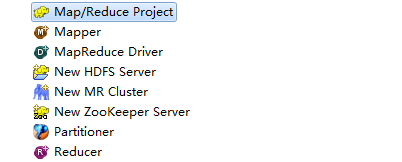

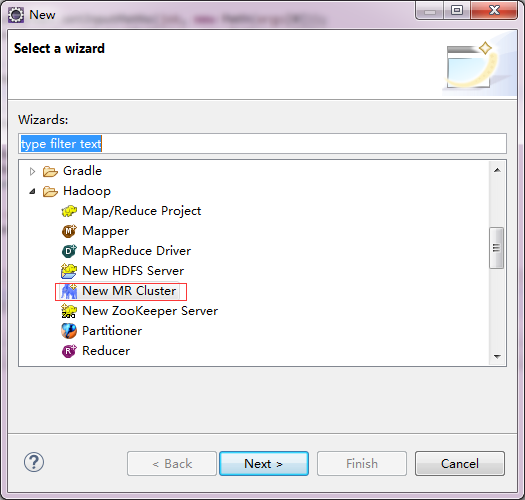

1)点击顺序:File->Other->展开Hadoop,入下面两幅图所示:

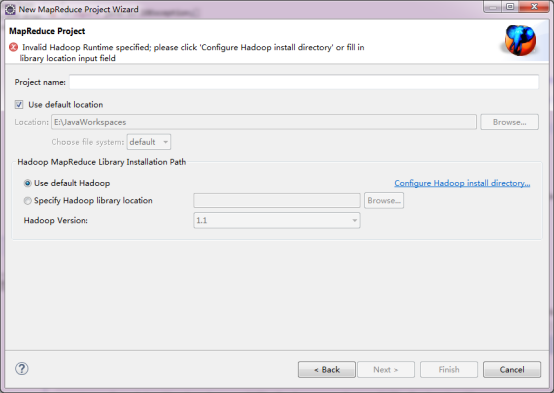

2)选择, 如下图: 如下图:

给项目取一个名称:MapReduce_4_27,并选择“Use default Hadoop”(默认的设置)。

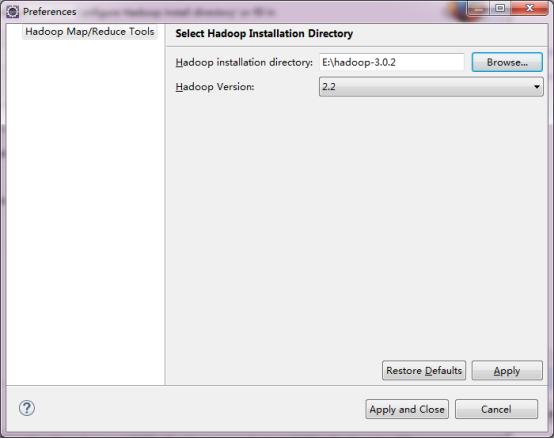

3)配置Hadoop安装目录

点击2)步奏中的进行配置,其中配置的就是刚才hadoop解压文件的路径。

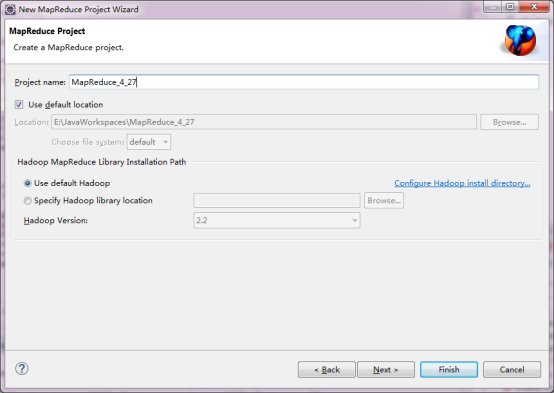

点击“Apply and Close”,显示如下界面:

点击, 显示如下界面: 显示如下界面:

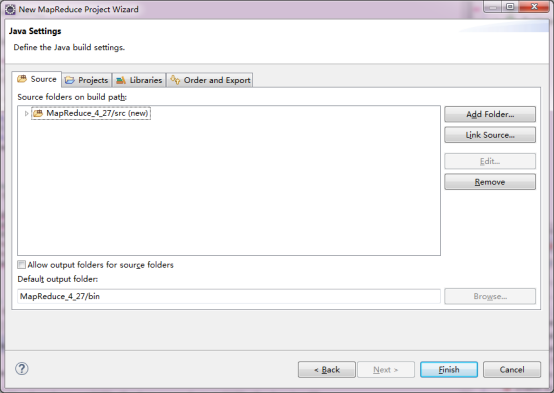

最后点击“Finish”。

4)导入开发包和javadoc文档

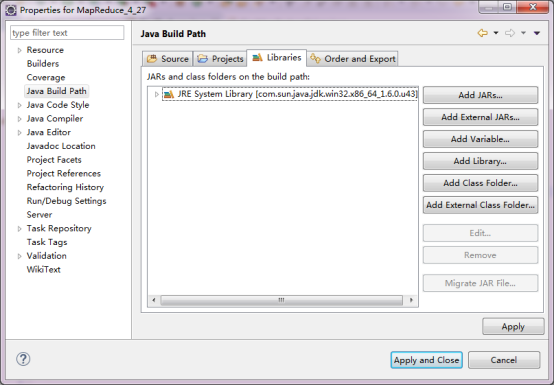

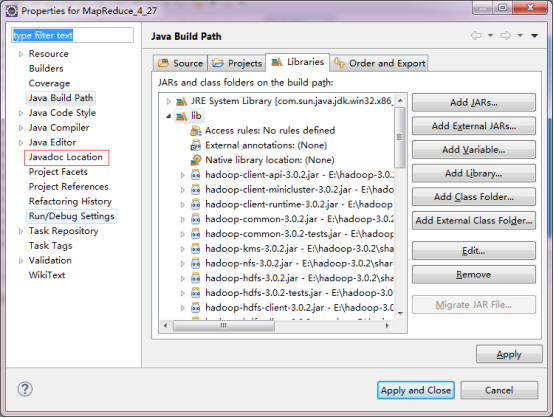

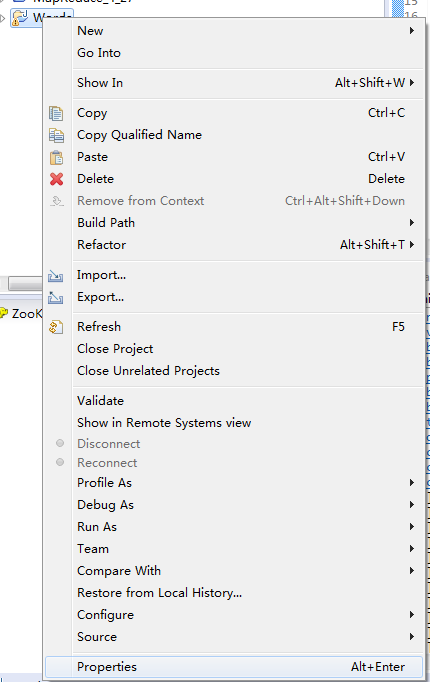

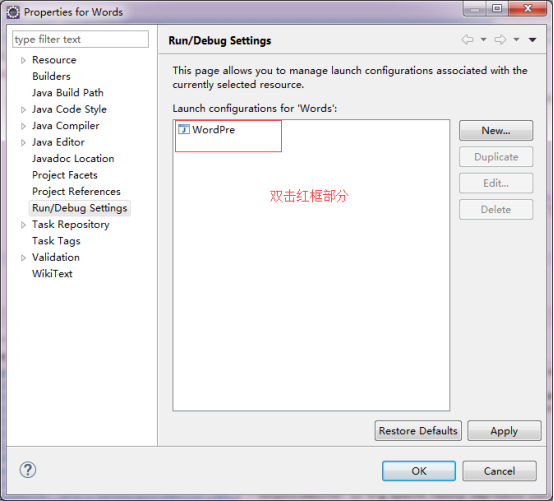

右键->项目属性-> 选择 Property->

在弹出的对话框左侧列表中选择Java Build Path-> 选择Libraries->

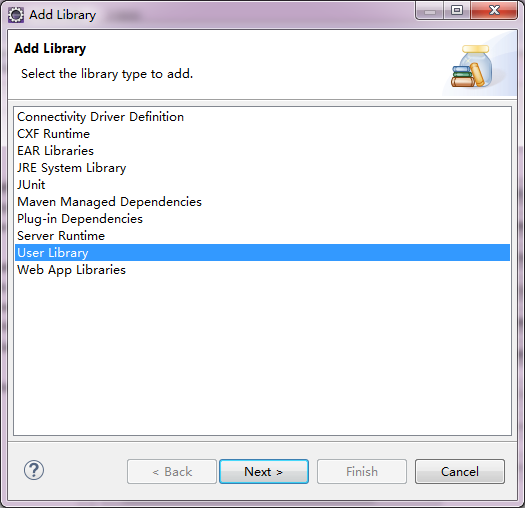

选择Add Library->弹出窗口内选择User Library-> 点击Next->

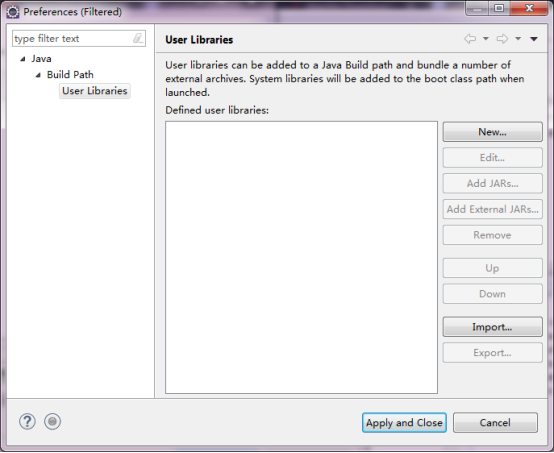

点击User Libraries-> 点击New->在弹出的窗口内输入必要信息->

将必要的jar包添加进去。

所需的开发包在E:\hadoop-3.0.2\share\hadoop,这个文件夹是刚才解压hadoop安装包解压的文件夹。

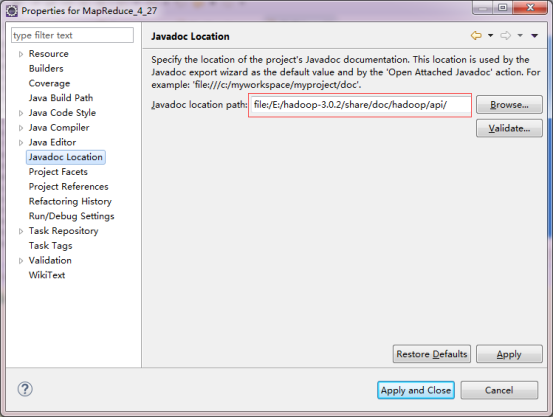

导入doc文档

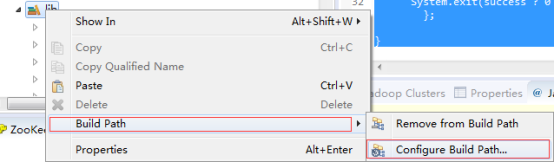

右键lib文件夹->点击Build Path->点击Config Build Path

点击Javadoc Location->点击Browse选择doc文档路径。

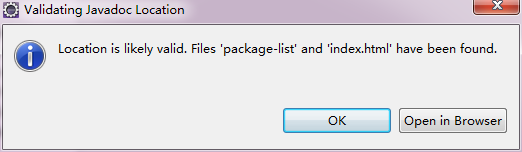

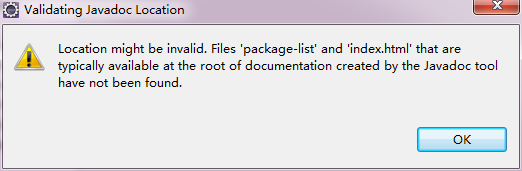

点击validate可以验证是否是正确的路径,下面分别展示了正确的路径和非正确的路径验证信息。

5 使用HDT(MapReduce编程)

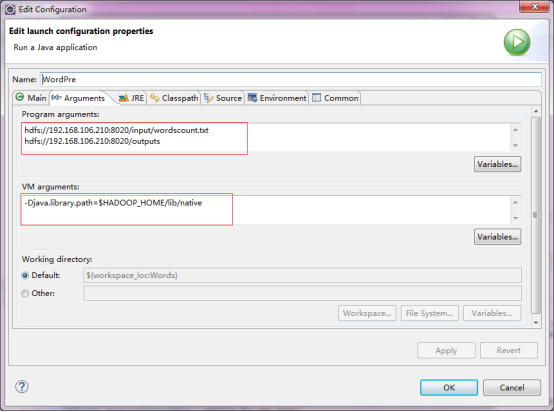

设置JVM参数

创建好Map/Reduce Project后要设置JVM参数设置为:

-Djava.library.path=$HADOOP_HOME/lib/native

Mapper:创建Mapper类的子类

例,模板自动生成的map函数框架

import java.io.IOException;

import org.apache.hadoop.io .IntWritable;

import org.apache.hadoop.io .LongWritable;

import org.apache.hadoop.io .Text;

import org.apache.hadoop.mapreduce .Mapper;

public class Tmap extends Mapper <LongWritable,

Text , Text, IntWritable > {

public void map (LongWritable key, Text value,

Context context) throws IOException, InterruptedException

{

}

} |

Reducer:创建Reducer类的子类

例:模板自动生成的reduce函数框架

import java.io.IOException;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

public class Treduce extends Reducer<Text,

IntWritable, Text, IntWritable> {

public void reduce(Text key, Iterable<IntWritable>

values, Context context)

throws IOException, InterruptedException {

while (values.iterator().hasNext()) {

// replace ValueType with the real type of your

value

// process value

}

}

} |

MapReduce Driver:创建驱动

例:模板自动生成的驱动框架

import org.apache.hadoop.fs

.Path;

import org.apache.hadoop.io .IntWritable;

import org.apache.hadoop.io .Text;

import org.apache.hadoop.mapreduce .Job;

import org .apache .hadoop .mapreduce .lib.input

. File InputFormat ;

import org.apache .hadoop.mapreduce .lib.output.

File OutputFormat;

public class TMR {

public static void main (String[] args) throws

IOException ,InterruptedException , Class NotFound

Exception {

Job job = new Job();

job.setJarByClass ( ... );

job.setJobName ( "a nice name" );

FileInputFormat.setInputPaths (job, new Path(args[0]));

FileOutputFormat.setOutputPath (job, new Path(args[1]));

// TODO: specify a mapper

job. setMapperClass( ... );

// TODO : specify a reducer

job. setReducerClass( ... );

job. setOutputKeyClass(Text.class);

job. setOutputValueClass(IntWritable.class);

boolean success = job.waitForCompletion (true);

System.exit (success ? 0 : 1);

};

} |

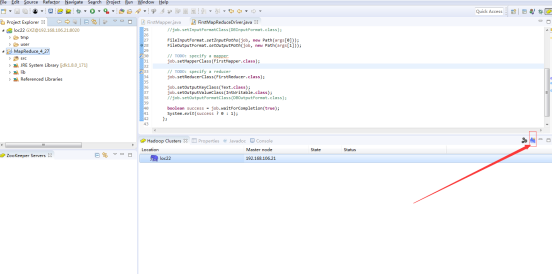

New MR Cluster:集群配置

可以点击下图中的New MR Cluster配置集群

也可以点击eclipse的图标来配置集群:

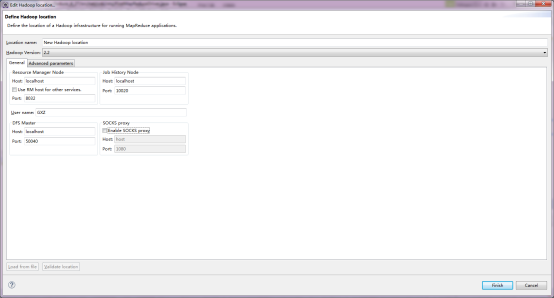

配置页面如下:

Resource Manager Node:配置资源管理节点,对应Hadoop配置文件

DFS Master:配置分布式文件系统主节点,即NameNode节点的端口号。对应配置文件fs.default.name的值。 |