| 第一次听闻Spark是2013年年末,当时笔者对Scala(Spark的编程语言)感兴趣。一段时间之后做了一个有趣的数据科学项目,试图预测泰坦尼克号上的生还情况(Kaggle竞赛项目,通过使用机器学习预测泰坦尼克号上哪些乘客具备更高的生还可能性)。通过该项目可以更深入地理解Spark的概念和编程方式。

在本文Introduction to Apache Spark with Examples and Use

Cases,作者RADEK OSTROWSKI将通过Kaggle竞赛项目“预测泰坦尼克号上的生还情况”带大家深入学习Spark。

以下为译文

第一次听闻Spark是2013年年末,当时笔者对Scala(Spark的编程语言)感兴趣。一段时间之后做了一个有趣的数据科学项目,试图预测泰坦尼克号上的生还情况(Kaggle竞赛项目,通过使用机器学习预测泰坦尼克号上哪些乘客具备更高的生还可能性)。通过该项目可以更深入地理解Spark的概念和编程方式,强推荐想要精进Spark的开发人员拿该项目入手。

如今Spark在众多互联网公司被广泛采用,例如Amazon、eBay和Yahoo等。许多公司拥有运行在上千个节点的Spark集群。根据Spark

FAQ,已知最大的集群有着超过8000个节点。不难看出,Spark是一项值得关注和学习的技术。

本文通过一些实际案例和代码示例对Spark进行介绍,案例和代码示例部分出自Apache Spark官方网站,也有一部分出自《Learning

Spark - Lightning-Fast Big Data Analysis》一书。

什么是 Apache Spark? 初步介绍

Spark是Apache的一个项目,被宣传为"闪电般快速集群计算",它拥有繁荣的开源社区,同时也是目前最活跃的Apache项目。

Spark提供了一个更快更通用的数据处理平台。与Hadoop相比,运行在内存中的程序,Spark的速度可以提高100倍,即使运行在磁盘上,其速度也能提高10倍。去年,Spark在处理速度方面已经超越了Hadoop,仅利用十分之一于Hadoop平台的机器,却以3倍于Hadoop的速度完成了100TB数量级的Daytona

GreySort比赛,成为了PB级别排序速度最快的开源引擎。

通过使用Spark所提供的超过80个高级函数,让更快速地完成编码成为可能。大数据中的"Hello

World!"(编程语言延续下来一个惯例):Word Count程序示例可以说明这一点,同样的逻辑使用Java语言编写MapReduce代码需要50行左右,但在Spark(Scala评议实现)中的实现非常简单:

sparkContext.textFile("hdfs://..."). flatMap(line => line.split(" ")). map(word => (word, 1)).

reduceByKey(_ + _).saveAsTextFile("hdfs://...") |

学习如Apache Spark的另一个重要途径是使用交互式shell (REPL),使用REPL可以交互显示代码运行结果,实时测试每行代码的运行结果,无需先编码、再执行整个作业,如此便能缩短花在代码上的工作时间,同时为即席数据分析提供了可能。

Spark的其他主要功能包括:

目前支持Scala,Java和Python三种语言的 API,并正在逐步支持其他语言(例如R语言);

能够与Hadoop生态系统和数据源(HDFS,Amazon S3,Hive,HBase,Cassandra等)完美集成;

可以运行在Hadoop YARN或者Apache Mesos管理的集群上,也可以通过自带的资源管理器独立运行。

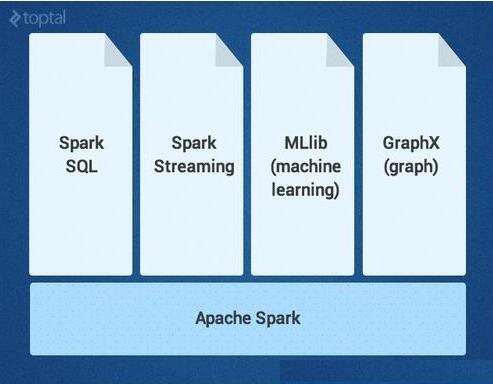

Spark 内核之上还有许多强大的、更高级的库作为补充,可以在同一应用程序中直接使用,目前有SparkSQL,Spark

Streaming,MLlib(用于机器学习)和GraphX这四大组件库,本文将对Spark Core及四大组件库进行详细介绍。当然,还有额外其它的Spark库和扩展库目前也处于开发中。

Spark Core

Spark Core是大规模并行计算和分布式数据处理的基础引擎。它的职责有:

内存管理和故障恢复;

调度、分发和监控集群上的作业;

与存储系统进行交互。

Spark引入了RDD(弹性分布式数据集)的概念,RDD是一个不可变的容错、分布式对象集合,支持并行操作。RDD可包含任何类型的对象,可通过加载外部数据集或通过Driver程序中的集合来完成创建。

RDD支持两种类型的操作:

转换(Transformations)指的是作用于一个RDD上并会产生包含结果的新RDD的操作(例如map,

filter, join, union等)

动作(Actions)指的是作用于一个RDD之后,会触发集群计算并得到返回值的操作(例如reduce,count,first等)

Spark中的转换操作是“延迟的(lazy)”,意味着转换时它们并不立即启动计算并返回结果。相反,它们只是“记住”要执行的操作和待执行操作的数据集(例如文件)。转换操作仅当产生调用action操作时才会触发实际计算,完成后将结果返回到driver程序。这种设计使Spark能够更有效地运行,例如,如果一个大文件以不同方式进行转换操作并传递到首个action操作,此时Spark将只返回第一行的结果,而不是对整个文件执行操作。

默认情况下,每次对其触发执行action操作时,都需要重新计算前面经过转换操作的RDD,不过,你也可以使用持久化或缓存方法在内存中持久化RDD来避免这一问题,此时,Spark将在集群的内存中保留这些元素,从而在下次使用时可以加速访问。

SparkSQL

SparkSQL是Spark中支持SQL语言或者Hive查询语言查询数据的一个组件。它起先作为Apache

Hive 端口运行在Spark之上(替代MapReduce),现在已经被集成为Spark的一个重要组件。除支持各种数据源,它还可以使用代码转换来进行SQL查询,功能十分强大。下面是兼容Hive查询的示例:

// sc is an existing SparkContext.

val sqlContext = new org.apache.spark.sql.hive.HiveContext(sc)

sqlContext.sql("CREATE TABLE IF NOT EXISTS src (key INT, value STRING)")

sqlContext.sql("LOAD DATA LOCAL INPATH 'examples/src/main/resources/kv1.txt' INTO TABLE src")

// Queries are expressed in HiveQL

sqlContext.sql("FROM src SELECT key, value").collect().foreach(println) |

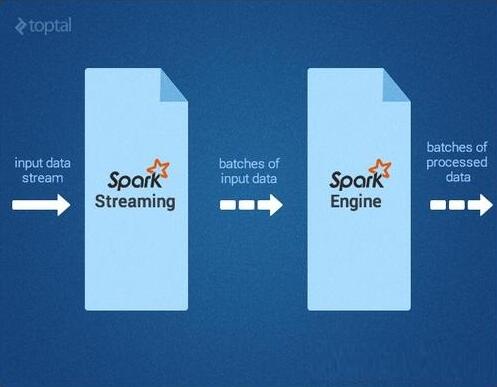

Spark Streaming

Spark Streaming支持实时处理流数据,例如生产环境中的Web服务器日志文件(例如 Apache

Flume和 HDFS/S3),社交媒体数据(例如Twitter)和各种消息队列中(例如Kafka)的实时数据。在引擎内部,Spark

Streaming接收输入的数据流,与此同时将数据进行切分,形成数据片段(batch),然后交由Spark引擎处理,按数据片段生成最终的结果流,如下图所示。

Spark Streaming API与Spark Core紧密结合,使得开发人员可以轻松地同时驾驶批处理和流数据。

MLlib

MLlib是一个提供多种算法的机器学习库,目的是使用分类,回归,聚类,协同过滤等算法能够在集群上横向扩展(可以查阅Toptal中关于机器学习的文章详细了解)。MLlib中的一些算法也能够与流数据一起使用,例如使用普通最小二乘法的线性回归算法或k均值聚类算法(以及更多其他正在开发的算法)。Apache

Mahout(一个Hadoop的机器学习库)摒弃MapReduce并将所有的力量放在Spark MLlib上。

GraphX

GraphX是一个用于操作图和执行图并行操作的库。它为ETL即Extraction-Transformation-Loading、探索性分析和迭代图计算提供了统一的工具。除了内置的图操作之外,它也提供了一个通用的图算法库如PageRank。

如何使用Apache Spark: 事件监测用例

回答了“什么是Apache Spark?”的问题之后,现在回过头来想想哪些类型的问题或者挑战可以使Spark得到更有效的使用。

我最近偶然发现了一篇关于通过分析Twitter流来检测地震的实验,有趣的是,实验结果已经表明使用这种方式通知日本发生地震的速度会比日本气象局更快。即使在文章中他们使用了与本文不同的技术,但我认为这是一个很好的例子,通过使用Spark编写简洁的代码,同时又无需为兼容性、互操作性而编写胶水代码(glue

code)。

首先,我们必须过滤出与“earthquake” 或 “shaking”等相关的tweets消息流,可以很容易地使用Spark

Streaming实现此目的:

TwitterUtils.createStream(...) .filter(_.getText.contains("earthquake") || _.getText.contains("shaking")) |

然后,我们需要对tweets消息流进行语义分析,以确定它们表示的是否是当前正在发生的地震。例如,“Earthquake!”或者“Now

it is shaking”等tweets消息将被视为正面匹配。而像“参加地震会议(Attending

an Earthquake Conference)”或“昨天地震真可怕(The earthquake yesterday

was scary)”等tweets消息则不会被匹配。文章的作者为实现此功能使用了支持向量机(SVM),我们这里也可以这么做,但是也可以尝试使用流式计算实现的版本,下面是使用MLlib生成的代码示例:

// We would prepare some earthquake tweet data and load it in LIBSVM

format.

val data = MLUtils.loadLibSVMFile(sc, "sample_earthquate_tweets.txt")

// Split data into training (60%) and test (40%).

val splits = data.randomSplit(Array(0.6, 0.4), seed = 11L)

val training = splits(0).cache() val test = splits(1) // Run training

algorithm to build the model

val numIterations = 100

val model = SVMWithSGD.train(training, numIterations) // Clear the

default

threshold. model.clearThreshold() // Compute raw scores on the test set.

val scoreAndLabels = test.map { point => val score = model.predict

(point.features) (score, point.label)} // Get evaluation metrics.

val metrics = new BinaryClassificationMetrics(scoreAndLabels)

val auROC = metrics.areaUnderROC()

println("Area under ROC = " + auROC) |

如果我们对该模型的预测率感到满意,我们可以进入下一阶段并在发生地震时作出反应。为了预测一个地震的发生,我们需要在规定的时间窗口内(如文章中所描述的)检测一定数量(即密度)的正向微博。需要注意的是,对于启用Twitter位置服务的tweet消息,我们还会提取地震的位置。有了前面这些知识的铺垫,我们可以使用SparkSQL查询现有的Hive表(存储着对接收地震通知感兴趣的用户)来检索对应用户的电子邮件地址,并向各用户发送个性化的警告邮件,如下所示:

// sc is an existing SparkContext.

val sqlContext = new org.apache.spark.sql.hive.HiveContext(sc)

// sendEmail is a custom function

sqlContext.sql("FROM earthquake_warning_users SELECT firstName,

lastName, city, email") .collect().foreach(sendEmail) |

其他Apache Spark使用示例

Spark的使用场景当然不仅仅局限于对地震的检测。

这里提供关于一个非常适合Spark技术处理的案例速查指南(但肯定没有接近穷尽),这些案例中的场景都面临着大数据普遍存在的速度(Velocity)、多样性(Variety)和容量(Volume)问题。

在游戏行业中,处理和发现来自实时游戏事件流中的隐藏模式,并能够即时对它们做出响应是公司能够产生营收的关键能力,主要目的是为实现玩家留存,定向广告,复杂等级的自动调整等。

在电子商务行业中,实时交易信息可以传递到流聚类算法如k-means或者协同过滤算法如ALS,然后其结果可以与其他非结构化数据源(例如客户评论或者产品评论)相结合,并持续不断地提高和改进推荐算法以适应新的发展趋势。

在金融或安全行业中,Spark技术栈可以应用于欺诈或入侵检测系统、或应用于基于风险的身份验证。Spark可以通过收集大量归档日志,同时结合外部数据源如泄露的数据、受损账户信息(如https://haveibeenpwned.com/)及来自外部连接/请求(如IP地理位置或时间)的数据来达到最好的结果。,

结论

总而言之,Spark帮助降低了具备挑战性和计算密集型的海量实时或离线数据(包括结构化和非结构化数据)处理任务的难度,无缝集成相关复杂功能,如机器学习和图形算法。Spark的大数据处理能力将惠及大众,请尽情尝试!

|