| 编辑推荐: |

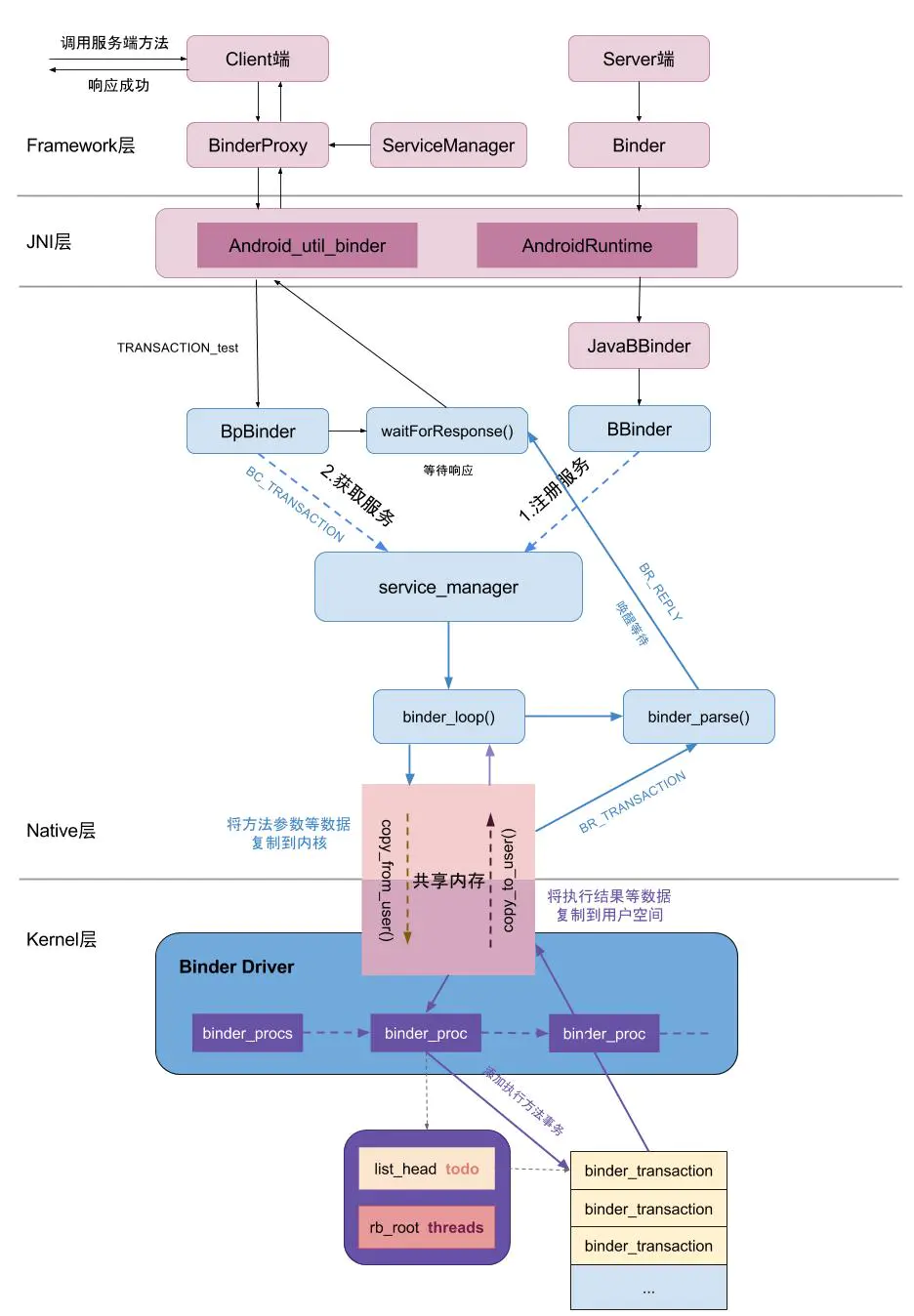

本文主要介绍了Binder架构、基础数据结构、Binder驱动、Native层级的Binder结构、Binder通信机制、Binder进程与线程、ServiceManager查找服务、完整的通信流程

等相关内容。

本文来自于简书,由火龙果软件Anna编辑、推荐。 |

|

Binder

Binder是什么?

Binder是一种进程间通信机制

为什么是Binder?

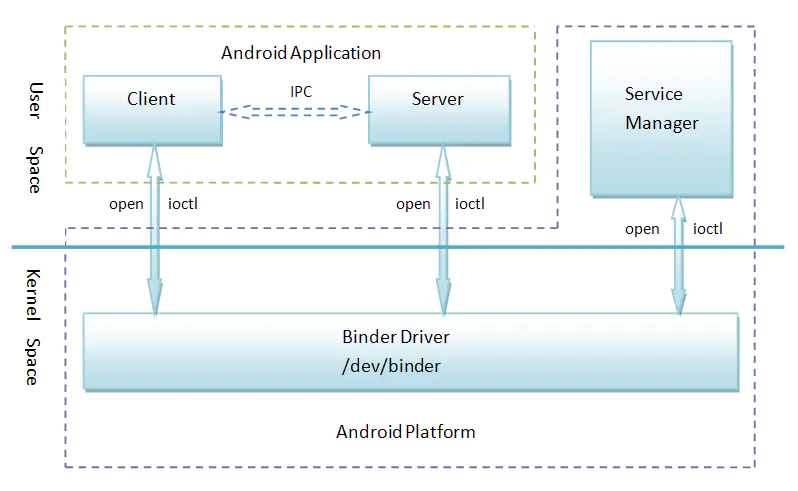

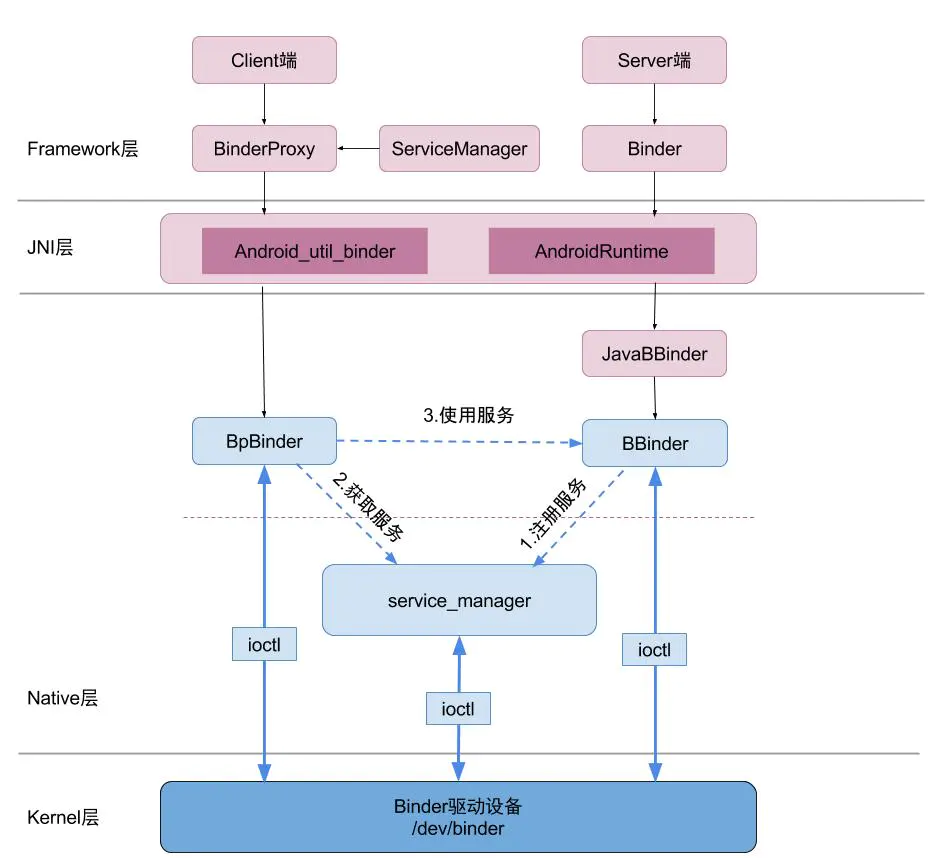

Binder架构

Binder通信机制采用C/S架构,这很重要!!!

@Binder架构|center

Binder框架中主要涉及到4个角色Client、Server、Service Manager及Binder驱动,其中Client、Server、Service

Manager运行在用户空间,Binder驱动运行在内核空间

Client代表客户端进程,Server代表客户端进程提供各种服务,如音视频等

Service Manager用来管理各种系统服务

Binder驱动提供进程间通信的能力

用户空间的Client、Server、ServiceManager通过open、mmap和ioctl等标准文件操作(详见Unix环境编程)来访问/dev/binder,进而实现进程间通信

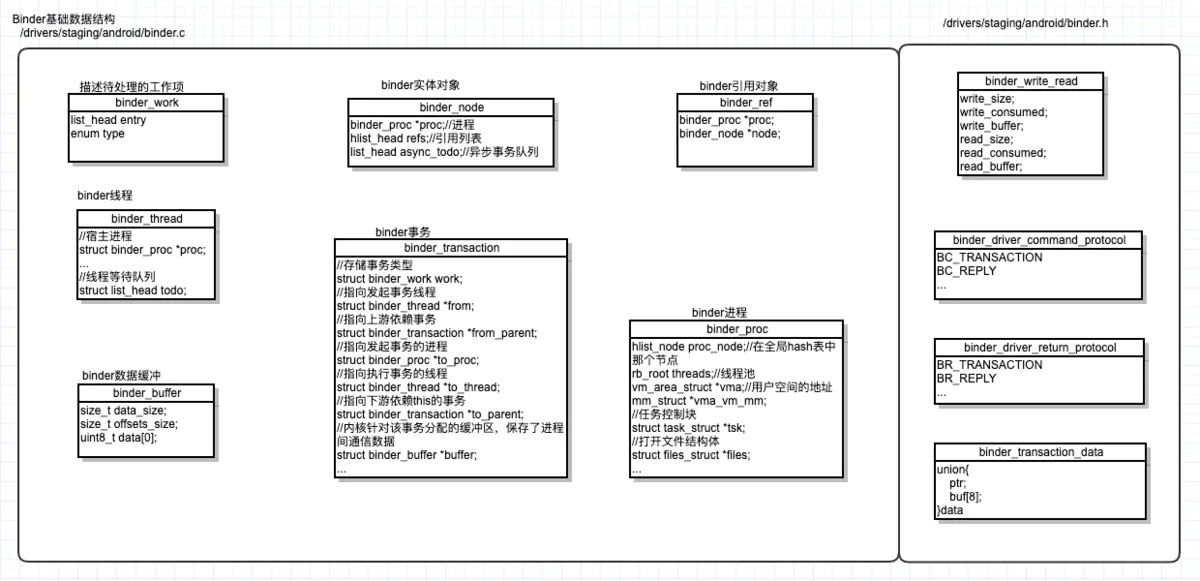

基础数据结构

Binder基础数据

关于Binder基础数据,见上图

Binder驱动

Binder驱动工作图

/kernel/drivers/staging/android/binder.c

device_initcall(binder_init); |

设备初始化时候会调用binder_init进行binder驱动初始化

/kernel/drivers/staging/android/binder.c

//绑定binder驱动操作函数

static const struct file_operations binder_fops

= {

.owner = THIS_MODULE,

.poll = binder_poll,

.unlocked_ioctl = binder_ioctl,

.compat_ioctl = binder_ioctl,

.mmap = binder_mmap,

.open = binder_open,

.flush = binder_flush,

.release = binder_release,

};

//创建misc类型的驱动

static struct miscdevice binder_miscdev = {

.minor = MISC_DYNAMIC_MINOR,

.name = "binder",

.fops = &binder_fops//绑定binder驱动操作函数

};

//binder驱动初始化

static int __init binder_init(void)

{

int ret;

binder_deferred_workqueue = create_singlethread_workqueue("binder");

if (!binder_deferred_workqueue)

return -ENOMEM;

//创建目录/binder

binder_debugfs_dir_entry_root = debugfs_create_dir("binder",

NULL);

if (binder_debugfs_dir_entry_root)

//创建目录/binder/proc

binder_debugfs_dir_entry_proc = debugfs_create_dir("proc",

binder_debugfs_dir_entry_root);

//注册binder驱动

ret = misc_register(&binder_miscdev);

//创建其他文件

if (binder_debugfs_dir_entry_root) {

//创建文件/binder/proc/state

debugfs_create_file("state",

S_IRUGO,

binder_debugfs_dir_entry_root,

NULL,

&binder_state_fops);

//创建文件/binder/proc/stats

debugfs_create_file("stats",

S_IRUGO,

binder_debugfs_dir_entry_root,

NULL,

&binder_stats_fops);

//创建文件/binder/proc/transactions

debugfs_create_file("transactions",

S_IRUGO,

binder_debugfs_dir_entry_root,

NULL,

&binder_transactions_fops);

//创建文件/binder/proc/transaction_log

debugfs_create_file("transaction_log",

S_IRUGO,

binder_debugfs_dir_entry_root,

&binder_transaction_log,

&binder_transaction_log_fops);

//创建文件/binder/proc/failed_transaction_log

debugfs_create_file("failed_transaction_log",

S_IRUGO,

binder_debugfs_dir_entry_root,

&binder_transaction_log_failed,

&binder_transaction_log_fops);

}

return ret;

} |

初始化主要做了两件事情

初始化存储binder存储信息的目录

创建binder设备,并绑定操作函数如binder_open、binder_mmap、binder_ioctl等

设备启动时候,会调用binder_init,主要做两件事情

1.创建/binder/proc目录,之后在这个目录下创建state、stats、transactions、transaction_log、failed_transaction_log文件夹,分别存储进程通信的各种数据

2.注册驱动,并绑定文件操作函数binder_open、binder_mmap、binder_ioctl等,之后就可以通过RPC机制去访问binder驱动

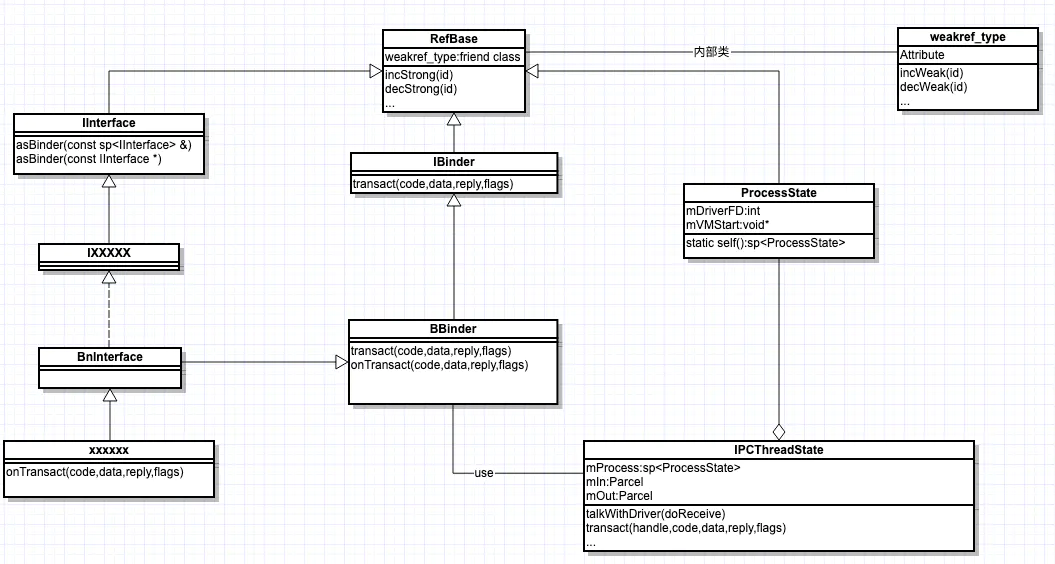

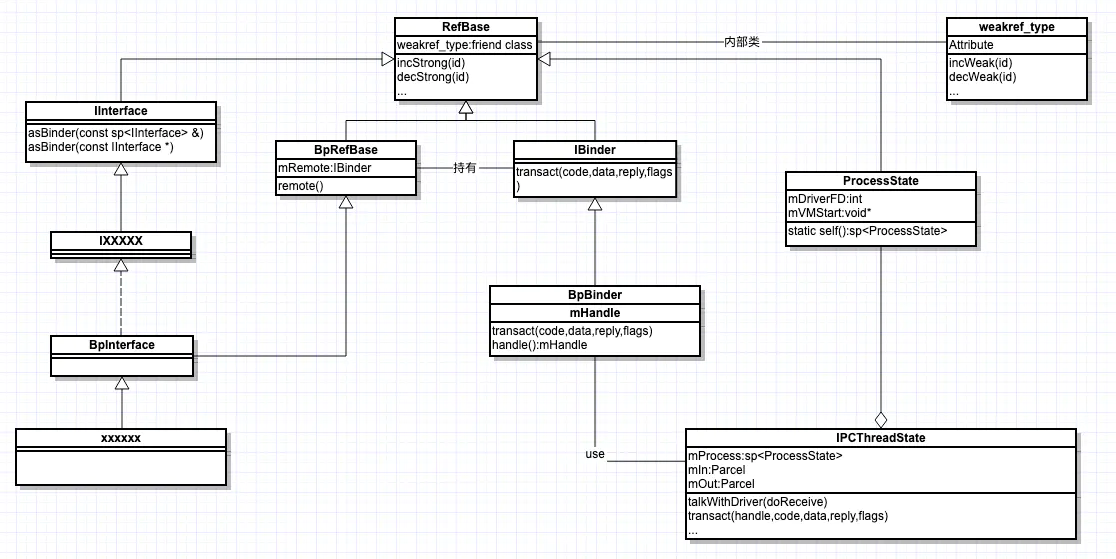

Native层级的Binder结构

@Server组件类图|center

@Client组件类图|center

Binder通信机制

@Binder分层|center

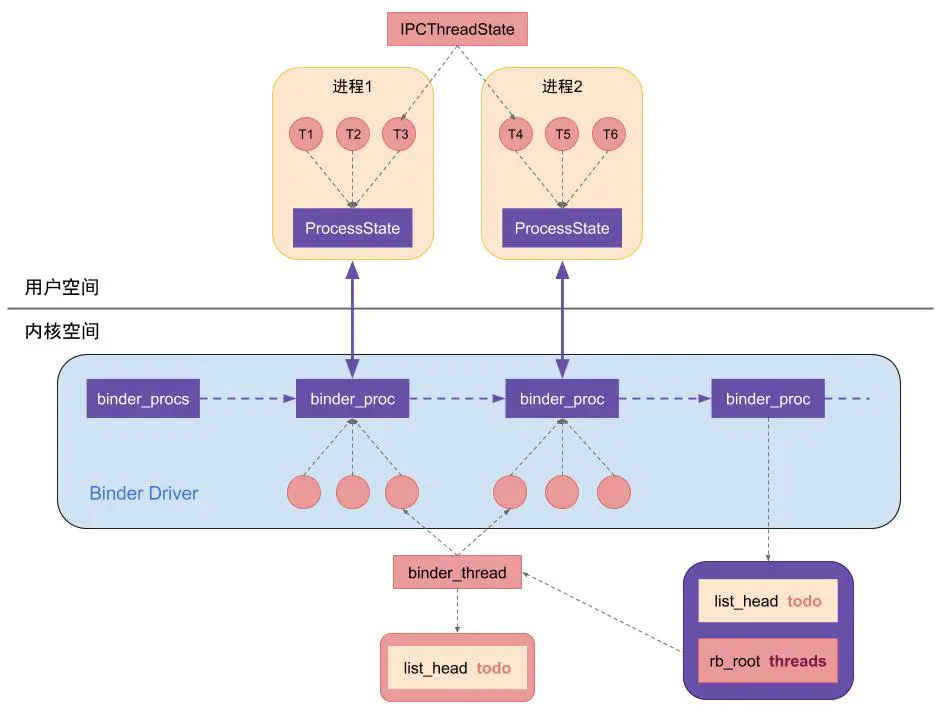

Binder进程与线程

@Binder驱动中的线程与用户空间的线程

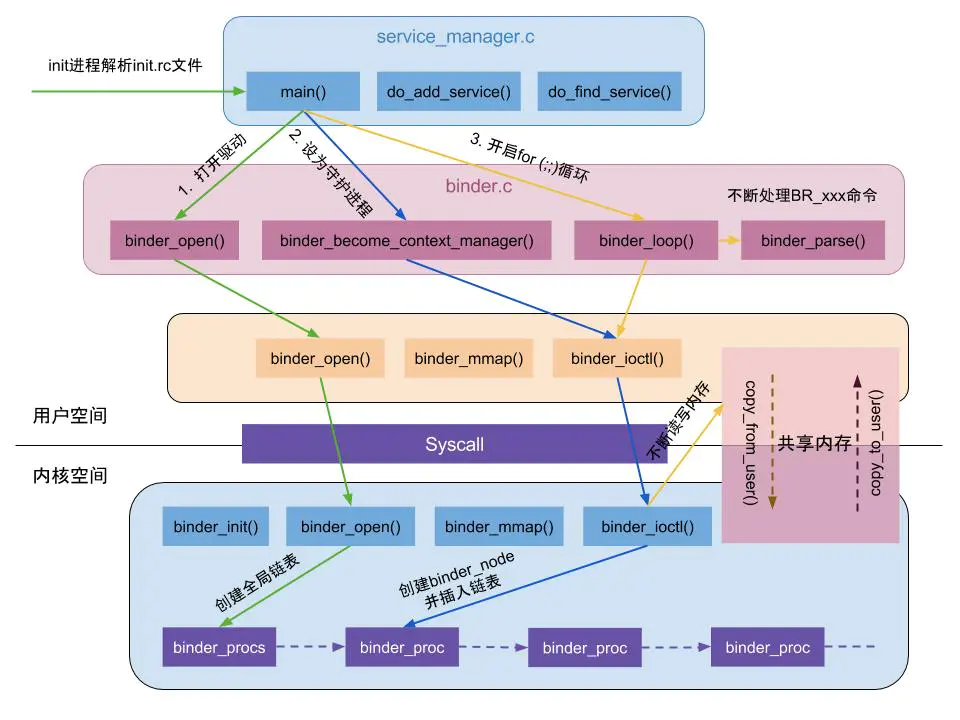

ServiceManager启动

@ServiceManager启动

预备知识补充

ServiceManager启动流程主要分为三个流程

1.以系统服务形式启动service_manager,之后通过在binder_open函数打开驱动设备,驱动层相应的就会创建service_manager对应的binder_proc,并且这是个特殊的service

2.调用binder_become_context_manager通过ioctl调用内核中的binder_ioctl,经过一系列处理后,binder驱动会将这个特殊的binder_node存到静态指针binder_context_mgr_node

static struct

binder_node *binder_context_mgr_node;

...

static int binder_ioctl_set_ctx_mgr (struct

file *filp)

{

...

//注意这里后续两个参数都是0

binder_context_mgr_node = binder_new_node(proc,

0, 0);

...

}

... |

3.调用binder_loop进入循环解析的过程

int main(int

argc, char** argv){

...

//进入循环,等待或处理Client进程的通信请求

binder_loop(bs, svcmgr_handler);

...

} |

这里指定循环处理函数为svcmgr_handler,后续再仔细分析这个函数,先看binder_loop实现

void binder_loop

(struct binder_state *bs, binder_handler func)

{

...

//通信数据

struct binder_write_read bwr;

bwr.write_size = 0;

bwr.write_consumed = 0;

bwr.write_buffer = 0;

readbuf[0] = BC_ENTER_LOOPER;

binder_write(bs, readbuf, sizeof(uint32_t));

for (;;) {

bwr.read_size = sizeof(readbuf);

bwr.read_consumed = 0;

bwr.read_buffer = (uintptr_t) readbuf;

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

...

//解析

res = binder_parse(bs, 0, (uintptr_t) readbuf,

bwr.read_consumed, func);

...

}

}

int binder_write (struct binder_state *bs,

void *data, size_t len)

{

struct binder_write_read bwr;

int res;

bwr.write_size = len;

bwr.write_consumed = 0;

bwr.write_buffer = (uintptr_t) data;

bwr.read_size = 0;

bwr.read_consumed = 0;

bwr.read_buffer = 0;

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

...

return res;

} |

首先创建一个binder_write_read结构体,然后通过binder_write向Binder驱动写入命令协议BC_ENTER_LOOPER,注意这个理输出缓冲区是没有数据的,binder驱动经过一系列处理进入循环状态,之后通过一个死循环来不断的从Binder驱动读取数据,之后交由binder_parse去解析各种协议数据,后续再分析细节

Binder驱动是如何处理交互细节的,我们来看下binder_ioctl_write_read的实现

static int

binder_ioctl_write_read (struct file *filp,

unsigned int cmd, unsigned long arg,

struct binder_thread *thread)

{

...

//从文件句柄取出进程信息

struct binder_proc *proc = filp->private_data;

//命令协议

unsigned int size = _IOC_SIZE(cmd);

...

struct binder_write_read bwr;

//取出bwr进程通信协议载体

if (copy_from_user(&bwr, ubuf, sizeof(bwr)))

{

...

}

//如果有写入数据,就交由binder_thread_write去处理,之后

//通过copy_to_user将数据返还给用户空间

if (bwr.write_size > 0) {

ret = binder_thread_write(proc, thread,

bwr.write_buffer,

bwr.write_size,

&bwr.write_consumed);

...

if (ret < 0) {

bwr.read_consumed = 0;

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

...

goto out;

}

}

//如果有输出数据,则调用binder_thread_read解析,

//之后判断进程的事务队里是否为空,如果不为空就等待执行

if (bwr.read_size > 0) {

ret = binder_thread_read(proc, thread, bwr.read_buffer,

bwr.read_size,

&bwr.read_consumed,

filp->f_flags & O_NONBLOCK);

...

if (!list_empty(&proc->todo))

wake_up_interruptible(&proc->wait);

if (ret < 0) {

//将写出数据返还给用户空间

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

...

goto out;

}

}

...

out:

return ret;

}

|

至于binder_thread_write与binder_thread_read则是处理命令协(binder_driver_command_protocol)与返回协议(binder_driver_return_protocol

)详见/drivers/staging/android/binder.h

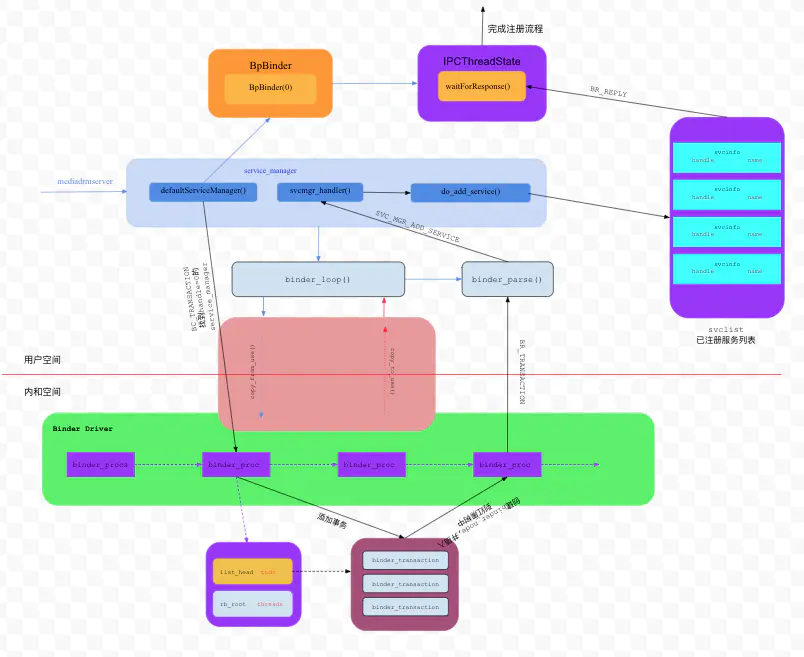

ServiceManager注册服务

@Service的注册流程|center

service的注册流程如上图,这里以media_server的注册为例子去看代码

/frameworks/av/services/

mediadrm/mediadrmserver.cpp

int main()

{

...

sp<ProcessState> proc(ProcessState::self());

sp<IServiceManager> sm = defaultServiceManager();

...

MediaDrmService::instantiate();

ProcessState::self()->startThreadPool();

IPCThreadState::self()->joinThreadPool();

} |

可以看到以用户权限启动mediadrm服务,文件位于/system/bin/mediadrmserver

接下来看main_mediadrmserver.cpp

/frameworks/av/services/

mediadrm/mediadrmserver.cpp

int main()

{

...

sp<ProcessState> proc(ProcessState::self());

sp<IServiceManager> sm = defaultServiceManager();

...

MediaDrmService::instantiate();

ProcessState::self()->startThreadPool();

IPCThreadState::self()->joinThreadPool();

} |

main()中主要做了两件事情:

1.初始化MediaDrmService

2.线程池初始化

先看第一个

/frameworks/av/services/

mediadrm/MediaDrmService.cpp

...

void MediaDrmService::instantiate() {

defaultServiceManager()->addService(

String16("media.drm"), new MediaDrmService());

}

... |

这里面主要通过defaultServiceManager()获取一个BpServiceManager指针然后调用addService,注意这里传入的两个参数“media.drm”和一个MediaDrmService对象

ServiceManager代理对象的获取

先来看defaultServiceManager()调用

/frameworks/native/libs/

binder/Static.cpp

...

sp<IServiceManager> gDefaultServiceManager;

...

/frameworks/native/libs/ binder/ISerivceManager.cpp

...

sp<IServiceManager> defaultServiceManager()

{

if (gDefaultServiceManager != NULL)

return gDefaultServiceManager;

{

...

while (gDefaultServiceManager == NULL) {

gDefaultServiceManager = interface_cast<IServiceManager>(

ProcessState:: self()->getContextObject(NULL));

...

}

}

return gDefaultServiceManager;

}

... |

gDefaultServiceManager就是一个IServiceManger的指针

首次获取时候一定是通过interface_cast<IServiceManager>(ProcessState::self()

->getContextObject(NULL))这一系列method调用获取的,一步步分析

先看ProcessState::self()

/frameworks/native/libs/binder/ProcessState

...

sp<ProcessState> ProcessState::self()

{

Mutex::Autolock _l(gProcessMutex);

if (gProcess != NULL) {

return gProcess;

}

//注意这里的文件name

gProcess = new ProcessState("/dev/binder");

return gProcess;

}

...

|

我赌半包辣条,这个类构造一定持有打开/dev/binder的句柄,不信你看构造声明

//这里是cpp构造函数形式,就是成员变量赋值

ProcessState::ProcessState(const char *driver)

: mDriverName(String8(driver))

, mDriverFD(open_driver(driver))

, mVMStart(MAP_FAILED)

, mThreadCountLock(PTHREAD_MUTEX_INITIALIZER)

, mThreadCountDecrement(PTHREAD_COND_INITIALIZER)

, mExecutingThreadsCount(0)

, mMaxThreads(DEFAULT_MAX_BINDER_THREADS)

, mStarvationStartTimeMs(0)

, mManagesContexts(false)

, mBinderContextCheckFunc(NULL)

, mBinderContextUserData(NULL)

, mThreadPoolStarted(false)

, mThreadPoolSeq(1)

{

if (mDriverFD >= 0) {

// mmap the binder, providing a chunk of virtual

address space to receive transactions.

//注意这里分配的BINDER_VM_SIZE为1016kb,具体见宏定义处

mVMStart = mmap(0, BINDER_VM_SIZE, PROT_READ,

MAP_PRIVATE | MAP_NORESERVE, mDriverFD, 0);

if (mVMStart == MAP_FAILED) {

...

close(mDriverFD);

mDriverFD = -1;

mDriverName.clear();

}

}

...

} |

再来看getContextObject

sp<IBinder>

ProcessState:: getContextObject (const sp<IBinder>&

/*caller*/)

{

//注意这里传入的值为0

return getStrongProxyForHandle(0);

}

...

sp<IBinder> ProcessState:: getStrongProxyForHandle(int32_t

handle)

{

sp<IBinder> result;

...

handle_entry* e = lookupHandleLocked(handle);

if (e != NULL) {

...

b = BpBinder::create(handle);

e->binder = b;

if (b) e->refs = b->getWeakRefs();

result = b;

}else{

...

}

return result;

} |

getContextObject()内部是调用getStrongProxyForHandle(0)来获取一个IBinder指针(这里0其实就是为了后续查找之前ServiceManager启动注册那个特殊的Service组件),可以看到其实这里创建的是一个BpBinder对象,并且他的句柄值是0

我们来看BpBinder的相关调用

frameworks/native/libs/binder/BpBinder.cpp

//handle句柄值 与binder驱动中的binder引用对象进行关联

BpBinder::BpBinder (int32_t handle, int32_t

trackedUid)

//注意这里传入的是0

: mHandle(handle)

//注意这个值为1

, mAlive(1)

, mObitsSent(0)

, mObituaries(NULL)

, mTrackedUid(trackedUid)

{

...

IPCThreadState::self()->incWeakHandle(handle,

this);

}

BpBinder* BpBinder::create(int32_t handle)

{

...

return new BpBinder(handle, trackedUid);

} |

回到defaultServiceManager(),最后interface_cast<IServiceManager>(BpBinder(0))实际上转换成了这个,这个在哪里定义呢?

/frameworks/native/include/

binder/IInterface.h

template<typename INTERFACE>

inline sp<INTERFACE> interface_cast (const

sp<IBinder>& obj)

{

return INTERFACE::asInterface(obj);

}

|

发现他是个内联模板函数,实际上调用的是IServiceManager::asInterface(BpBinder(0)),那么IServiceManager::asInterface在哪里定义呢?

/frameworks/native/libs/binder/

IServiceManager.cpp

IMPLEMENT_META_INTERFACE(ServiceManager, "android.os.IServiceManager"); |

看上面这个宏定义,实际定义在

/frameworks/native/include/binder/IInterface.h

#define IMPLEMENT_META_INTERFACE

(INTERFACE, NAME)

const ::android::String16 I ##INTERFACE::descriptor(NAME);

const ::android::String16&

I##INTERFACE::getInterfaceDescriptor() const

{

return I##INTERFACE::descriptor;

}

::android::sp<I##INTERFACE> I##INTERFACE::asInterface(

const ::android::sp<:: android::IBinder>&

obj)

{

::android::sp<I## INTERFACE> intr;

if (obj != NULL) {

intr = static_cast<I##INTERFACE*>(

obj->queryLocalInterface(

I##INTERFACE::descriptor).get());

if (intr == NULL) {

intr = new Bp##INTERFACE(obj);

}

}

return intr;

}

I##INTERFACE::I##INTERFACE() { }

I##INTERFACE::~I##INTERFACE() { } |

带入转换下,发现我们拿到的其实就是一个BpServiceManager

接下来我们来看后续的调用

BpServiceManager#addService

virtual status_t

addService (const String16& name, const

sp<IBinder>& service,bool allowIsolated,

int dumpsysPriority) {

//这里data表示要写入的数据,reply表示返回的数据

Parcel data, reply;

//存储描述符"android.os.IServiceManager"

data.writeInterfaceToken (IServiceManager::getInterfaceDescriptor());

//存储服务名字"media.drm"

data.writeString16(name);

//存储服务MediaDrmService

data.writeStrongBinder(service);

data.writeInt32(allowIsolated ? 1 : 0);

data.writeInt32(dumpsysPriority);

status_t err = remote()-> transact(ADD_SERVICE_TRANSACTION,

data, &reply);

return err == NO_ERROR ? reply.readExceptionCode()

: err;

} |

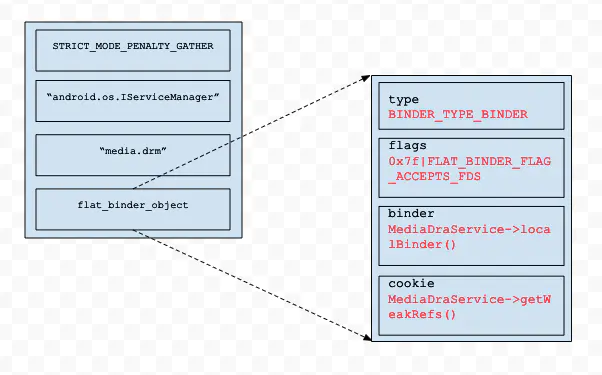

这里实际上把上一步传入的数据通过Parcel存起来,然后调用remote()获取一个BpBinder然后调用其transact,传入的code为ADD_SERVICE_TRANSACTION,经过包装后data中的数据长这样子

@Parcel数据封装|center

记下来我们去看BpBinder#transact

status_t BpBinder::transact(

uint32_t code, const Parcel& data, Parcel*

reply, uint32_t flags)

{

// Once a binder has died, it will never come

back to life.

//构造中mAlive=1,所以这里会走进if

if (mAlive) {

//这里调用的是IPCThreadState#transact

status_t status = IPCThreadState::self()->transact(

mHandle, code, data, reply, flags);

if (status == DEAD_OBJECT) mAlive = 0;

return status;

}

return DEAD_OBJECT;

} |

前面ServiceManager代理对象获取BpBinder时候,mHandle为0,code为ADD_SERVICE_TRANSACTION,data存储binder信息,reply存储要返回的信息,flags查看method定义默认参数为0

接下来分析IPCThreadState#transact

/frameworks/native/libs/binder/IPCThreadState.cpp

status_t IPCThreadState::

transact(int32_t handle,

uint32_t code, const Parcel& data,

Parcel* reply, uint32_t flags)

{

status_t err;

//允许返回中携带文件描述符

flags |= TF_ACCEPT_FDS;

...

//封装BC_TRANSACTION通信请求

err = writeTransactionData (BC_TRANSACTION,

flags, handle, code, data, NULL);

...

//同步or异步,这里是同步调用

if ((flags & TF_ONE_WAY) == 0) {

...

if (reply) {

//向Binder驱动发起BC_TRANSACTION

err = waitForResponse(reply);

} else {

Parcel fakeReply;

err = waitForResponse(&fakeReply);

...

}

...

}else{

err = waitForResponse(NULL, NULL);

}

return err;

} |

上面首先将flags与TF_ACCEPT_FDS做或操作,表示接收文件描述符

之后调用writeTransactionData将数据封装成一个BC_TRANSACTION命令协议,这里是同步调用切需要返回,所以执行到的是waitForResponse(reply),接下来分别来看writeTransactionData、waitForResponse的实现

status_t IPCThreadState::

writeTransactionData (int32_t cmd, uint32_t

binderFlags,

int32_t handle, uint32_t code, const Parcel&

data, status_t* statusBuffer)

{

//对应内核中要求io携带binder_transaction_data结构体

binder_transaction_data tr;

tr.target.ptr = 0; /* Don't pass uninitialized

stack data to a remote process */

tr.target.handle = handle;

tr.code = code;

tr.flags = binderFlags;

tr.cookie = 0;

tr.sender_pid = 0;

tr.sender_euid = 0;

const status_t err = data.errorCheck();

if (err == NO_ERROR) {

tr.data_size = data.ipcDataSize();

tr.data.ptr.buffer = data.ipcData();

tr.offsets_size = d ata.ipcObjectsCount()*sizeof(binder_size_t);

tr.data.ptr.offsets = data.ipcObjects();

} else if (statusBuffer) {

tr.flags |= TF_STATUS_CODE;

*statusBuffer = err;

tr.data_size = sizeof(status_t);

tr.data.ptr.buffer = reinterpret_cast<uintptr_t>

(statusBuffer);

tr.offsets_size = 0;

tr.data.ptr.offsets = 0;

} else {

return (mLastError = err);

}

mOut.writeInt32(cmd);

mOut.write(&tr, sizeof(tr));

return NO_ERROR;

} |

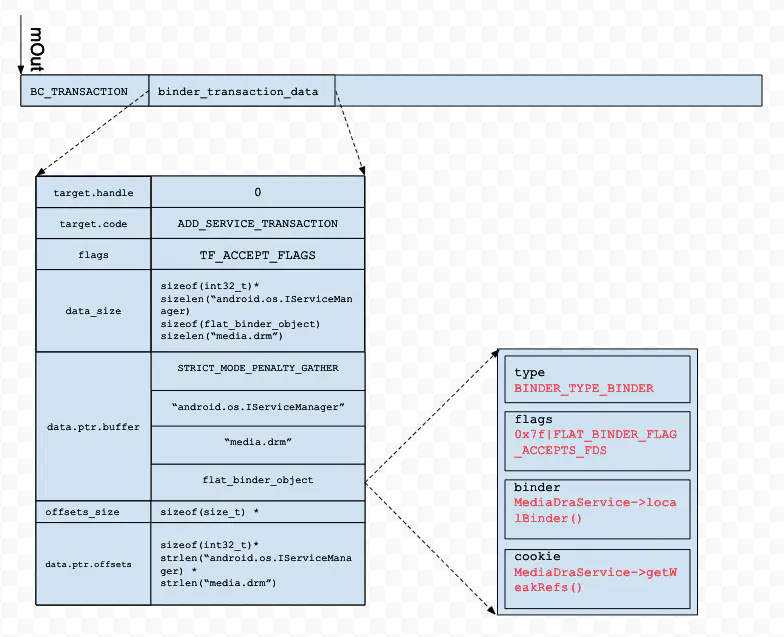

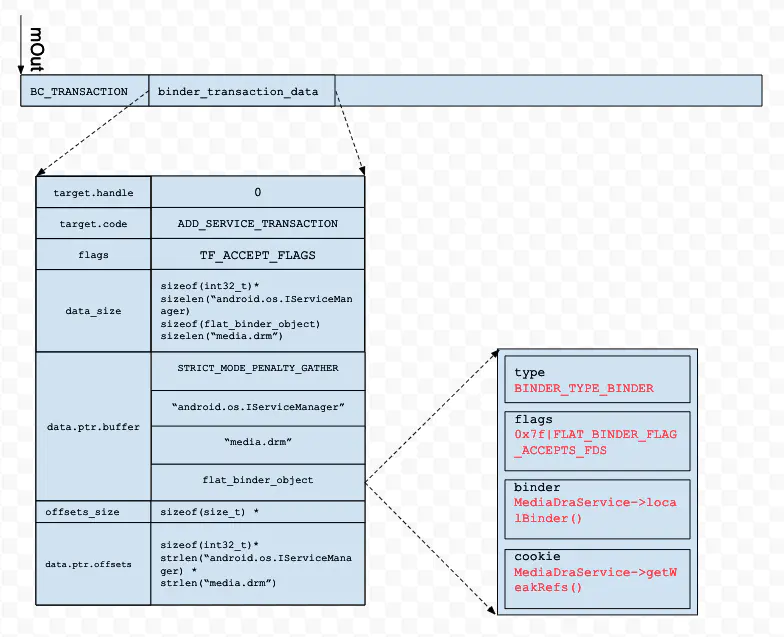

这里主要是创建一个binder_transaction_data,初其始化后将要传输的数据写入到mOut缓冲区中,最终整个数据长这样子

@writeTransactionData|center

数据封装完成以后,我们来看waitForResponse的实现

status_t IPCThreadState::

waitForResponse (Parcel *reply, status_t *acquireResult)

{

uint32_t cmd;

int32_t err;

while (1) {

if ((err=talkWithDriver()) < NO_ERROR) break;

...

//从输出缓冲区取出返回协议命令

cmd = (uint32_t)mIn.readInt32();

...

}

...

return err;

}

|

这里可以是一个死循环,不断的通过talkWithDriver去跟binder驱动通信,然后从输出缓冲区中取出返回协议然后处理返回协议,这里先不看具体的协议处理,先来看talkWithDriver的实现

//默认doReceive

= true

status_t IPCThreadState:: talkWithDriver(bool

doReceive)

{

...

binder_write_read bwr;

// Is the read buffer empty?

const bool needRead = mIn.dataPosition() >=

mIn.dataSize();

// We don't want to write anything if we are

still reading

// from data left in the input buffer and the

caller

// has requested to read the next data.

const size_t outAvail = (!doReceive || needRead)

? mOut.dataSize() : 0;

bwr.write_size = outAvail;

bwr.write_buffer = (uintptr_t)mOut.data();

// This is what we'll read.

if (doReceive && needRead) {

bwr.read_size = mIn.dataCapacity();

bwr.read_buffer = (uintptr_t)mIn.data();

} else {

bwr.read_size = 0;

bwr.read_buffer = 0;

}

...

// Return immediately if there is nothing to

do.

if ((bwr.write_size == 0) && (bwr.read_size

== 0)) return NO_ERROR;

bwr.write_consumed = 0;

bwr.read_consumed = 0;

status_t err;

do{

...

//这里通过io想驱动写入一个binder_write_read结构体

if (ioctl(mProcess->mDriverFD, BINDER_WRITE_READ,

&bwr) >= 0)

err = NO_ERROR;

else

err = -errno;

} while (err == -EINTR);

if (err >= NO_ERROR) {

if (bwr.write_consumed > 0) {

if (bwr.write_consumed < mOut.dataSize())

mOut.remove(0, bwr.write_consumed);

else {

mOut.setDataSize(0);

processPostWriteDerefs();

}

}

if (bwr.read_consumed > 0) {

mIn.setDataSize(bwr.read_consumed);

mIn.setDataPosition(0);

}

...

return NO_ERROR;

}

return err;

} |

这里主要通过io控制命令向Binder驱动写入一个type为BINDER_WRITE_READ,data为binder_write_read,其输出缓冲区为前面mOut中写入的数据

接下来的操作就转到Binder驱动中进行了,需要记住,Clinet进程此时执行到的位置

/platform/drivers/staging/

android/binder.c

static long binder_ioctl (struct file *filp,

unsigned int cmd,

unsigned long arg)

{

int ret;

struct binder_proc *proc = filp->private_data;

struct binder_thread *thread;

unsigned int size = _IOC_SIZE(cmd);

...

//这里根据进程找到对应的thread,如果没找到就创建一个

thread = binder_get_thread(proc);

...

switch (cmd) {

case BINDER_WRITE_READ:

ret = binder_ioctl_write_read (filp, cmd, arg,

thread);

if (ret)

goto err;

break;

...

}

|

Binder驱动中对应的binder_ioctl()会调用,之后会处理cmd为BINDER_WRITE_READ的分支,之后会调用到binder_ioctl_write_read()

static int

binder_ioctl_write_read (struct file *filp,

unsigned int cmd, unsigned long arg,

struct binder_thread *thread)

{

int ret = 0;

//从文件描述符中取出进程地址

struct binder_proc *proc = filp->private_data;

//cmd信息

unsigned int size = _IOC_SIZE(cmd);

void __user *ubuf = (void __user *)arg;

struct binder_write_read bwr;

//cmd校验

if (size != sizeof (struct binder_write_read))

{

ret = -EINVAL;

goto out;

}

//从用户空间中取出binder_write_read结构体

if (copy_from_user(&bwr, ubuf, sizeof(bwr)))

{

ret = -EFAULT;

goto out;

}

...

//输出缓冲区有数据就处理输出缓冲区

if (bwr.write_size > 0) {

//这里是真正处理输出缓冲数据的func

ret = binder_thread_write(proc, thread,

bwr.write_buffer,

bwr.write_size,

&bwr.write_consumed);

trace_binder_write_done(ret);

if (ret < 0) {

bwr.read_consumed = 0;

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto out;

}

}

//输入缓冲区有数据就处理输入缓冲区

if (bwr.read_size > 0) {

//这里是真正处理输出缓冲数据的func

ret = binder_thread_read (proc, thread, bwr.read_buffer,

bwr.read_size,

&bwr.read_consumed,

filp->f_flags & O_NONBLOCK);

trace_binder_read_done(ret);

//如果进程todo队里不为空,说明有事务正在处理,需要等待处理

if (!list_empty(&proc->todo))

wake_up_interruptible(&proc->wait);

if (ret < 0) {

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto out;

}

}

...

//将数据copy回用户空间

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

{

ret = -EFAULT;

goto out;

}

out:

return ret;

} |

前面调用可知,输出缓冲区是有数据,输入缓冲区是没有数据的,所以上面方法执行流程应该是,先调用binder_thread_write去处理输出缓冲区

static int

binder_thread_write (struct binder_proc *proc,

struct binder_thread *thread,

binder_uintptr_t binder_buffer, size_t size,

binder_size_t *consumed)

{

uint32_t cmd;

void __user *buffer = (void __user *) (uintptr_t)binder_buffer;

void __user *ptr = buffer + *consumed;

void __user *end = buffer + size;

while (ptr < end && thread->return_error

== BR_OK) {

//根据cmd取出消费的数据偏移地址

if (get_user(cmd, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

...

switch (cmd) {

...

case BC_TRANSACTION:

case BC_REPLY: {

struct binder_transaction_data tr;

if (copy_from_user(&tr, ptr, sizeof(tr)))

return -EFAULT;

ptr += sizeof(tr);

binder_transaction(proc, thread, &tr, cmd

== BC_REPLY);

break;

...

}

*consumed = ptr - buffer;

}

return 0;

} |

这里是取出cmd,然后处理BC_TRANSACTION时候,再讲binder_transaction_data取出,之后交由binder_transaction去处理

binder_transaction比较长,其实主要分为三个部分

1.初始化目标线程进程

2.封装返回数据binder_transaction_data

3.为binder_transaction_data分配合适的线程or进程

static void

binder_transaction (struct binder_proc *proc,

struct binder_thread *thread,

struct binder_transaction_data *tr, int reply)

{

struct binder_transaction *t;

struct binder_work *tcomplete;

binder_size_t *offp, *off_end;

binder_size_t off_min;

struct binder_proc *target_proc;

struct binder_thread *target_thread = NULL;

struct binder_node *target_node = NULL;

struct list_head *target_list;

wait_queue_head_t *target_wait;

struct binder_transaction *in_reply_to = NULL;

...

uint32_t return_error;

...

//如果是reply走if分支,否则走else分支,这里走else

if (reply) {

...

}else{

//根据句柄找对应的binder_ref、binder_node

if (tr->target.handle) {

struct binder_ref *ref;

ref = binder_get_ref(proc, tr->target.handle);

...

else{

//handle=0,则需要找指向service_manager的binder_node

target_node = binder_context_mgr_node;

...

}

...

//根据binder_node找到对应的进程binder_proc,这里也就是service_manager

target_proc = target_node->proc;

...

//如果是同步请求,尝试寻找一个在等待其他事物执行的线程,tips优化调度

if (!(tr->flags & TF_ONE_WAY) &&

thread->transaction_stack) {

struct binder_transaction *tmp;

tmp = thread->transaction_stack;

...

//找到一个等待别的事务完成的依赖线程

while (tmp) {

if (tmp->from && tmp->from->proc

== target_proc)

target_thread = tmp->from;

tmp = tmp->from_parent;

}

}

}

//有空闲线程就是用空闲线程的等待队列,否则使用进程的事物队列

if (target_thread) {

e->to_thread = target_thread->pid;

target_list = &target_thread->todo;

target_wait = &target_thread->wait;

} else {

target_list = &target_proc->todo;

target_wait = &target_proc->wait;

}

...

//创建binder_transaction结构体,BINDER_WORK_TRANSACTION

//用于向目标进程发送数据

t = kzalloc(sizeof(*t), GFP_KERNEL);

...

binder_stats_created(BINDER_STAT_TRANSACTION);

...

//创建binder_work结构体, BINDER_STAT_TRANSACTION_COMPLETE

//便于向源进程返回数据处理结果

tcomplete = kzalloc(sizeof (*tcomplete), GFP_KERNEL);

...

binder_stats_created (BINDER_STAT_TRANSACTION_COMPLETE);

...

//初始化t

if (!reply && !(tr->flags & TF_ONE_WAY))

t->from = thread;

else

t->from = NULL;

t->sender_euid = task_euid(proc->tsk);

//目标进程

t->to_proc = target_proc;

//目标线程

t->to_thread = target_thread;

//code ADD_SERVICE_TRANSACTION

t->code = tr->code;

//flag = TF_ACCEPT_FLAGS

t->flags = tr->flags;

//优先级

t->priority = task_nice(current);

...

//缓冲区

t->buffer = binder_alloc_buf(target_proc,

tr->data_size,

tr->offsets_size, !reply && (t->flags

& TF_ONE_WAY));

...

t->buffer->allow_user_free = 0;

t->buffer->debug_id = t->debug_id;

t->buffer->transaction = t;

t->buffer->target_node = target_node;

...

//为t分配内核缓冲区

if (target_node)

binder_inc_node(target_node, 1, 0, NULL);

offp = (binder_size_t *)(t->buffer->data

+

ALIGN(tr->data_size, sizeof(void *)));

//数据copy

if (copy_from_user(t->buffer->data, (const

void __user *)(uintptr_t)

tr->data.ptr.buffer, tr->data_size)) {

...

goto err_copy_data_failed;

}

if (copy_from_user(offp, (const void __user

*)(uintptr_t)

tr->data.ptr.offsets, tr->offsets_size))

{

...

goto err_copy_data_failed;

}

...

//处理Binder请求,内核中很多都是地址起止位置操作

off_end = (void *)offp + tr->offsets_size;

off_min = 0;

for (; offp < off_end; offp++) {

struct flat_binder_object *fp;

...

//前面存储的MediaDrmService信息

fp = (struct flat_binder_object *) (t->buffer->data

+ *offp);

off_min = *offp + sizeof (struct flat_binder_object);

switch(fp->type){

case BINDER_TYPE_BINDER:

case BINDER_TYPE_WEAK_BINDER: {

struct binder_ref *ref;

struct binder_node *node =

binder_get_node(proc, fp->binder);

//如果是首次就创建新的binder_node

if (node == NULL) {

node = binder_new_node (proc, fp->binder,

fp->cookie);

...

//设定线程优先级

node->min_priority = fp->flags & FLAT_BINDER_FLAG_PRIORITY_MASK;

//设置是否接收文件描述符

node->accept_fds = !!(fp->flags &

FLAT_BINDER_FLAG_ACCEPTS_FDS);

}

...

//如果是首次就创建对应的binder_ref对象

ref = binder_get_ref_for_node(target_proc, node);

if (ref == NULL) {

return_error = BR_FAILED_REPLY;

goto err_binder_get_ref_for_node_failed;

}

//修改flat_binder_objec.type

if (fp->type == BINDER_TYPE_BINDER)

fp->type = BINDER_TYPE_HANDLE;

else

fp->type = BINDER_TYPE_WEAK_HANDLE;

//设置句柄

fp->handle = ref->desc;

//增加binder_ref的引用计数

binder_inc_ref(ref, fp->type == BINDER_TYPE_HANDLE,

&thread->todo);

...

}break;

...

}

}

//分配事务t的要进入那个栈

if (reply) {

...

binder_pop_transaction (target_thread, in_reply_to);

} else if (!(t->flags & TF_ONE_WAY))

{

...

t->need_reply = 1;

t->from_parent = thread->transaction_stack;

thread->transaction_stack = t;

} else {

...

if (target_node->has_async_transaction) {

target_list = &target_node->async_todo;

target_wait = NULL;

} else

target_node->has_async_transaction = 1;

}

//将binder_transaction_data指针t的类型修改为BINDER_WORK_TRANSACTION

t->work.type = BINDER_WORK_TRANSACTION;

//添加到target_list队列尾部

list_add_tail(&t->work.entry, target_list);

//将binder_work指针tcomplete.type置为 BINDER_WORK_TRANSACTION_COMPLETE

tcomplete->type = BINDER_WORK_TRANSACTION_COMPLETE;

list_add_tail (&tcomplete->entry, &thread->todo);

//这里有两个执行分支

//1.处理类型为BINDER_WORK_TRANSACTION的 binder_transaction_data

//2.处理类型为BINDER_WORK_TRANSACTION_COMPLETE 的binder_work

if (target_wait)

wake_up_interruptible(target_wait);

return;

...

} |

经过上面func以后,binder驱动中就会为MediaDrmService创建对应的binder_node并加入到整个Binder实体对象的红黑树中,接着会分别向Client进程、ServiceManager发送一个BINDER_WORK_TRANSACTION_COMPLETE的binder_work及BINDER_WORK_TRANSACTION的binder_transaction

至此,源线程thread、target_proc或者target_thread会并发的去执行各自todo队列中的任务

先来看源线程,回到binder_ioctl_write_read中,接下来要处理输入缓冲区,对应的调用binder_thread_read

static int

binder_thread_read (struct binder_proc *proc,

struct binder_thread *thread,

binder_uintptr_t binder_buffer, size_t size,

binder_size_t *consumed, int non_block)

{

void __user *buffer = (void __user *) (uintptr_t)binder_buffer;

void __user *ptr = buffer + *consumed;

void __user *end = buffer + size;

int ret = 0;

int wait_for_proc_work;

...

//线程唤醒

while (1) {

uint32_t cmd;

struct binder_transaction_data tr;

struct binder_work *w;

struct binder_transaction *t = NULL;

//检查工作队列,并将待处理项赋值给w

if (!list_empty(&thread->todo)) {

w = list_first_entry(&thread-> todo,

struct binder_work,

entry);

} else if (!list_empty(&proc->todo) &&

wait_for_proc_work) {

w = list_first_entry (&proc->todo, struct

binder_work,

entry);

} else {

/* no data added */

if (ptr - buffer == 4 &&

!(thread->looper & BINDER_LOOPER_STATE_NEED_RETURN))

goto retry;

break;

}

switch (w->type) {

...

case BINDER_WORK_TRANSACTION_COMPLETE: {

cmd = BR_TRANSACTION_COMPLETE;

if (put_user(cmd, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

binder_stat_br(proc, thread, cmd);

...

//删除binder_work,并释放资源

list_del(&w->entry);

kfree(w);

binder_stats_deleted (BINDER_STAT_TRANSACTION_COMPLETE);

} break;

}

done:

*consumed = ptr - buffer;

...

return 0;

} |

这里返回一个BR_TRANSACTION_COMPLETE协议,之后会经过一些列调用会回到IPCThreadState#waitForResponse中

status_t IPCThreadState::waitForResponse

(Parcel *reply, status_t *acquireResult)

{

...

uint32_t cmd;

int32_t err;

while (1) {

...

cmd = (uint32_t)mIn.readInt32();

...

switch (cmd) {

case BR_TRANSACTION_COMPLETE:

if (!reply && !acquireResult) goto finish;

break;

}

...

}

finish:

...

return error;

} |

IPCThreadState::waitForResponse对于BR_TRANSACTION_COMPLETE处理比较简单,就直接返回了

我们来看下target_proc即ServiceManager是怎么接收处理BINDER_WORK_TRANSACTION类型的binder_transaction的,假设ServiceManager之前没有通信,那么他就在Binder驱动中一直等待事务的到来,现在有事务了那么对应的就会调用binder_read_thread

static int

binder_thread_read (struct binder_proc *proc,

struct binder_thread *thread,

binder_uintptr_t binder_buffer, size_t size,

binder_size_t *consumed, int non_block)

{

void __user *buffer = (void __user *) (uintptr_t)binder_buffer;

void __user *ptr = buffer + *consumed;

void __user *end = buffer + size;

...

//线程唤醒

while (1) {

uint32_t cmd;

struct binder_transaction_data tr;

struct binder_work *w;

struct binder_transaction *t = NULL;

//检查工作队列,并将待处理项赋值给w

if (!list_empty(&thread->todo)) {

w = list_first_entry(&thread->todo, struct

binder_work,

entry);

} else if (!list_empty (&proc->todo) &&

wait_for_proc_work) {

w = list_first_entry (&proc->todo, struct

binder_work,

entry);

} else {

/* no data added */

if (ptr - buffer == 4 &&

!(thread->looper & BINDER_LOOPER_STATE_NEED_RETURN))

goto retry;

break;

}

...

switch (w->type) {

case BINDER_WORK_TRANSACTION: {

t = container_of (w, struct binder_transaction,

work);

} break;

...

}

//返回协议的处理,现在target_node指向的是binder_context_mgr_node

if (t->buffer->target_node) {

struct binder_node *target_node = t->buffer->target_node;

tr.target.ptr = target_node->ptr;

tr.cookie = target_node->cookie;

//保存原本的线程优先级,便于后续恢复

t->saved_priority = task_nice(current);

//修改binder驱动中对应proc的线程优先级(模拟Client进程的线程优先级)

if (t->priority < target_node->min_priority

&&

!(t->flags & TF_ONE_WAY))

binder_set_nice(t->priority);

else if (!(t->flags & TF_ONE_WAY) ||

t->saved_priority > target_node->min_priority)

binder_set_nice(target_node->min_priority);

cmd = BR_TRANSACTION;

} else {

...

}

//拷贝code与flags,

//注册服务过程中这里是 ADD_SERVICE_TRANSACTION、TF_ACCEPT_FDS

tr.code = t->code;

tr.flags = t->flags;

if (t->from) {

struct task_struct *sender = t->from->proc->tsk;

tr.sender_pid = task_tgid_nr_ns(sender,

task_active_pid_ns(current));

} else {

...

}

//Binder驱动程序分配给进程的内核缓冲区同时,

//映射了用户的内核地址、用户空间地址

tr.data_size = t->buffer->data_size;

tr.offsets_size = t->buffer->offsets_size;

tr.data.ptr.buffer = (binder_uintptr_t)(

(uintptr_t)t->buffer->data +

proc->user_buffer_offset);

//直接操作offsets

tr.data.ptr.offsets = tr.data.ptr.buffer +

ALIGN(t->buffer->data_size,

sizeof(void *));

//提供一个返回协议数据的缓冲区

if (put_user(cmd, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

if (copy_to_user(ptr, &tr, sizeof(tr)))

return -EFAULT;

ptr += sizeof(tr);

...

list_del(&t->work.entry);

t->buffer->allow_user_free = 1;

//cmd = BR_TRANSACTION && 不是正在处理异步通信请求

//就需要等待该同步进程通信完以后再进行下一步的操作

if (cmd == BR_TRANSACTION && !(t->flags

& TF_ONE_WAY)) {

t->to_parent = thread->transaction_stack;

t->to_thread = thread;

thread->transaction_stack = t;

} else {

t->buffer->transaction = NULL;

kfree(t);

binder_stats_deleted(BINDER_STAT_TRANSACTION);

}

break;

}

...

return 0

} |

这里实际上是返回一个BR_TRANSACTION协议,并且将之前通过binder_transaction传输的数据封装到binder_transaction_data中,由于在service_manager启动中,进入binder_loop时候指定的函数引用为svcmgr_handler,binder_loop中会循环通过ioctl控制命令去与内核交互数据,binder_parse用于解析数据

platform/frameworks/native/cmds

/servicemanager/binder.c

int binder_parse (struct binder_state *bs, struct

binder_io *bio,

uintptr_t ptr, size_t size, binder_handler func)

{

int r = 1;

uintptr_t end = ptr + (uintptr_t) size;

while (ptr < end) {

uint32_t cmd = *(uint32_t *) ptr;

ptr += sizeof(uint32_t);

...

case BR_TRANSACTION: {

struct binder_transaction_data *txn =

(struct binder_transaction_data *) ptr;

...

if (func) {

unsigned rdata[256/4];

struct binder_io msg;

struct binder_io reply;

int res;

bio_init(&reply, rdata, sizeof(rdata), 4);

bio_init_from_txn(&msg, txn);

//调用svcmgr_handler去处理

res = func(bs, txn, &msg, &reply);

if (txn->flags & TF_ONE_WAY) {

binder_free_buffer(bs, txn->data.ptr.buffer);

} else {

//返回注册的结果给binder驱动

binder_send_reply(bs, &reply,

txn->data.ptr.buffer, res);

}

}

break;

}

}

|

这其中有三个结构体binder_io主要存储数据传输,定义如下:

struct binder_io

{

char *data; /* pointer to read/write from */

binder_size_t *offs; /* array of offsets */

size_t data_avail; /* bytes available in data

buffer */

size_t offs_avail; /* entries available in offsets

array */

char *data0; /* start of data buffer */

binder_size_t *offs0; /* start of offsets buffer

*/

uint32_t flags;

uint32_t unused;

}; |

binder_parse中先通过bio_init去初始化reply,然后通过bio_init_from_txn去初始化msg,就是数据对齐的过程及flag设置,这里不再细述

我们重点关注func,也就是svcmgr_handler,先来回顾前面数据那张图

@writeTransactionData|center

接下来再来看svvmgr_handler的实现

uint16_t svcmgr_id[]

= {

'a','n','d','r','o', 'i','d','.','o','s','.',

'I','S','e', 'r','v','i', 'c','e','M', 'a','n','a','g','e','r'

};

...

int svcmgr_handler(struct binder_state *bs,

struct binder_transaction_data *txn,

struct binder_io *msg,

struct binder_io *reply)

{

struct svcinfo *si;

uint16_t *s;

size_t len;

uint32_t handle;

uint32_t strict_policy;

int allow_isolated;

...

strict_policy = bio_get_uint32(msg);

s = bio_get_string16(msg, &len);

...

//svcmgr_id校验,是否为“android.os.IServiceManager”

if ((len != (sizeof(svcmgr_id) / 2)) ||

memcmp(svcmgr_id, s, sizeof(svcmgr_id))) {

fprintf(stderr,"invalid id %s\n",

str8(s, len));

return -1;

}

...

switch(txn->code) {

...

//枚举值对应ADD_SERVICE_TRANSACTION

case SVC_MGR_ADD_SERVICE:

s = bio_get_string16(msg, &len);

if (s == NULL) {

return -1;

}

//取出binder_引用对象的句柄值

handle = bio_get_ref(msg);

allow_isolated = bio_get_uint32(msg) ? 1 : 0;

dumpsys_priority = bio_get_uint32(msg);

if (do_add_service(bs, s, len, handle,

txn->sender_euid, allow_isolated, dumpsys_priority,

txn->sender_pid))

return -1;

break;

}

...

bio_put_uint32(reply, 0);

return 0;

}

|

从msg中取出对应的Service的handle、name,然后调用do_add__service去执行后续的操作

看do_add_service之前我们先来看一个结构体svcinfo

struct svcinfo

{

//指向下一个引用

struct svcinfo *next;

//句柄值

uint32_t handle;

//死亡代理通知

struct binder_death death;

int allow_isolated;

uint32_t dumpsys_priority;

size_t len;

//服务名称

uint16_t name[0];

}; |

在ServiceManager中每一个服务对应一个svcinfo结构体

接下来我们看do_add_service的实现

int do_add_service

(struct binder_state *bs, const uint16_t *s,

size_t len, uint32_t handle, uid_t uid, int

allow_isolated,

uint32_t dumpsys_priority, pid_t spid) {

//存储要注册的服务信息

struct svcinfo *si;

...

//检查权限

if (!svc_can_register(s, len, spid, uid)) {

ALOGE("add_service('%s',%x) uid=%d - PERMISSION

DENIED\n",

str8(s, len), handle, uid);

return -1;

}

/先去找这个服务

si = find_svc(s, len);

if (si) {

if (si->handle) {

...

svcinfo_death(bs, si);

}

si->handle = handle;

} else {

//注册服务

si = malloc(sizeof(*si) + (len + 1) * sizeof(uint16_t));

...

si->handle = handle;

si->len = len;

memcpy(si->name, s, (len + 1) * sizeof(uint16_t));

si->name[len] = '\0';

si->death.func = (void*) svcinfo_death;

si->death.ptr = si;

si->allow_isolated = allow_isolated;

si->dumpsys_priority = dumpsys_priority;

//绑定到svclist中

si->next = svclist;

svclist = si;

}

//增加引用,避免被销毁

binder_acquire(bs, handle);

//绑定死亡通知

binder_link_to_death (bs, handle, &si->death);

return 0;

} |

这里先检查Service是否有注册权限(不同版本内核加固调用不同,感兴趣可以查看selinux),然后先去尝试查找这个服务存在不,如果不存在就分配一个新的struct

svcinfo,并将其挂到svclist中,由此可见在service_manager中是维护这一个所有Service组件信息的svclist的

回到binder_parse中,接下来会调用binder_send_reply向Binder驱动发送一个BC_REPLY

|

void binder_send_reply (struct binder_state *bs,

struct binder_io *reply,

binder_uintptr_t buffer_to_free,

int status)

{

//匿名结构体

struct {

uint32_t cmd_free;

binder_uintptr_t buffer;

uint32_t cmd_reply;

struct binder_transaction_data txn;

} __attribute__((packed)) data;

data.cmd_free = BC_FREE_BUFFER;

data.buffer = buffer_to_free;

data.cmd_reply = BC_REPLY;

data.txn.target.ptr = 0;

data.txn.cookie = 0;

data.txn.code = 0;

if (status) {

//通信中产生了错误

data.txn.flags = TF_STATUS_CODE;

data.txn.data_size = sizeof(int);

data.txn.offsets_size = 0;

data.txn.data.ptr.buffer = (uintptr_t)&status;

data.txn.data.ptr.offsets = 0;

} else {

//成功处理的一次通信请求

data.txn.flags = 0;

data.txn.data_size = reply->data - reply->data0;

data.txn.offsets_size = ((char*) reply->offs)

- ((char*) reply->offs0);

data.txn.data.ptr.buffer = (uintptr_t)reply->data0;

data.txn.data.ptr.offsets = (uintptr_t)reply->offs0;

}

//向Binder驱动写入数据

binder_write(bs, &data, sizeof(data));

} |

这里会将进程通信结果写入到匿名struct data中,然后调用binder_write去向内核写入BC_FREE_BUFFER\BC_REPLY命令协议

| int binder_write (struct

binder_state *bs, void *data, size_t len)

{

struct binder_write_read bwr;

int res;

bwr.write_size = len;

bwr.write_consumed = 0;

bwr.write_buffer = (uintptr_t) data;

bwr.read_size = 0;

bwr.read_consumed = 0;

bwr.read_buffer = 0;

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

if (res < 0) {

fprintf (stderr,"binder_write: ioctl failed

(%s)\n",

strerror(errno));

}

return res;

} |

binder_write实际上还是通过IO控制命令写入一个binder_write_read结构体,注意这个结构体输入缓冲区是没有数据的,也就是说不需要处理返回协议

略过各种调用我们来看内核中binder_thread_write对于BC_FREE_BUFFER\BC_REPLY的处理

| platform/drivers/staging/android/binder.c

static int binder_thread_write (struct binder_proc

*proc,

struct binder_thread *thread,

binder_uintptr_t binder_buffer, size_t size,

binder_size_t *consumed)

{

uint32_t cmd;

void __user *buffer = (void __user *) (uintptr_t)binder_buffer;

void __user *ptr = buffer + *consumed;

void __user *end = buffer + size;

while (ptr < end && thread-> return_error

== BR_OK) {

if (get_user(cmd, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

...

switch (cmd) {

...

case BC_TRANSACTION:

case BC_REPLY: {

struct binder_transaction_data tr;

if (copy_from_user(&tr, ptr, sizeof(tr)))

return -EFAULT;

ptr += sizeof(tr);

binder_transaction(proc, thread, &tr, cmd

== BC_REPLY);

break;

}

...

} |

BC_FREE_BUFFER主要是做一些资源释放的操作,感兴趣可以自己看这里不再细看

重点看BC_REPLY,查看binder_transaction的处理

| static void binder_transaction(struct

binder_proc *proc,

struct binder_thread *thread,

struct binder_transaction_data *tr, int reply)

{

...

struct binder_transaction *t;

struct binder_work *tcomplete;

binder_size_t *offp, *off_end;

binder_size_t off_min;

struct binder_proc *target_proc;

struct binder_thread *target_thread = NULL;

struct binder_node *target_node = NULL;

struct list_head *target_list;

wait_queue_head_t *target_wait;

struct binder_transaction *in_reply_to = NULL;

struct binder_transaction_log_entry *e;

uint32_t return_error;

...

if (reply) {

//寻找请求通信的thread

//从之前的thread中取出通信的binder_transaction_data

in_reply_to = thread->transaction_stack;

if (in_reply_to == NULL) {

...

return_error = BR_FAILED_REPLY;

goto err_empty_call_stack;

}

//恢复线程优先级

binder_set_nice(in_reply_to->saved_priority);

if (in_reply_to->to_thread != thread) {

...

return_error = BR_FAILED_REPLY;

in_reply_to = NULL;

goto err_bad_call_stack;

}

thread->transaction_stack = in_reply_to->to_parent;

target_thread = in_reply_to->from;

if (target_thread == NULL) {

return_error = BR_DEAD_REPLY;

goto err_dead_binder;

}

if (target_thread->transaction_stack != in_reply_to)

{

...

return_error = BR_FAILED_REPLY;

in_reply_to = NULL;

target_thread = NULL;

goto err_dead_binder;

}

//目标进程

target_proc = target_thread->proc;

}else{

...

}

//有空闲线程就是用空间线程的等待队列,否则使用进程的

if (target_thread) {

...

target_list = &target_thread->todo;

target_wait = &target_thread->wait;

} else {

...

}

...

//创建binder_transaction结构体,BINDER_WORK_TRANSACTION

t = kzalloc(sizeof(*t), GFP_KERNEL);

...

//创建binder_work结构体,BINDER_STAT_TRANSACTION_COMPLETE

tcomplete = kzalloc(sizeof(*tcomplete), GFP_KERNEL);

...

//分配事务t的要进入那个栈

if (reply) {

BUG_ON(t->buffer->async_transaction !=

0);

//将事务弹出todo栈

binder_pop_transaction(target_thread, in_reply_to);

} else if (!(t->flags & TF_ONE_WAY))

{

...

}else{

...

}

//将binder_transaction_data指针t的类型修改为BINDER_WORK_TRANSACTION

t->work.type = BINDER_WORK_TRANSACTION;

//添加到target_list队列尾部

list_add_tail(&t->work.entry, target_list);

//将binder_work指针tcomplete.type置为BINDER_WORK_TRANSACTION_COMPLETE

tcomplete->type = BINDER_WORK_TRANSACTION_COMPLETE;

list_add_tail(&tcomplete->entry, &thread->todo);

//这里有两个执行分支

//1.处理类型为BINDER_WORK_TRANSACTION的binder_transaction_data

//2.处理类型为BINDER_WORK_TRANSACTION_COMPLETE的binder_work

if (target_wait)

wake_up_interruptible(target_wait);

return;

...

}

|

这里跟只爱去哪调用不同的地方在于走的是if分支,需要查找到之前通信的目标线程及进程,然后将上次通信的binder_transaction弹栈,然后回想之前通信的进程发送一个type为BINDER_WORK_TRANSACTION的binder_work,之前那个进程对应的binder_thread_read处理如下:

| static int binder_thread_read(struct

binder_proc *proc,

struct binder_thread *thread,

binder_uintptr_t binder_buffer, size_t size,

binder_size_t *consumed, int non_block)

{

void __user *buffer = (void __user *)(uintptr_t)binder_buffer;

void __user *ptr = buffer + *consumed;

void __user *end = buffer + size;

int ret = 0;

...

//线程唤醒

while (1) {

uint32_t cmd;

struct binder_transaction_data tr;

struct binder_work *w;

struct binder_transaction *t = NULL;

//检查工作队列,并将待处理项赋值给w

if (!list_empty(&thread->todo)) {

w = list_first_entry(&thread->todo, struct

binder_work,

entry);

} else if (!list_empty(&proc->todo) &&

wait_for_proc_work) {

w = list_first_entry(&proc->todo, struct

binder_work,

entry);

} else {

...

}

...

switch (w->type) {

case BINDER_WORK_TRANSACTION: {

t = container_of(w, struct binder_transaction,

work);

} break;

...

}

//返回协议的处理,现在target_node指向的是binder_context_mgr_node

if (t->buffer->target_node) {

...

}else{

tr.target.ptr = 0;

tr.cookie = 0;

cmd = BR_REPLY;

}

//Binder驱动程序分配给进程的内核缓冲区同时,映射了用户的内核地址、用户空间地址

tr.data_size = t->buffer->data_size;

tr.offsets_size = t->buffer->offsets_size;

tr.data.ptr.buffer = (binder_uintptr_t)(

(uintptr_t)t->buffer->data +

proc->user_buffer_offset);

//直接操作offsets

tr.data.ptr.offsets = tr.data.ptr.buffer +

ALIGN(t->buffer->data_size,

sizeof(void *));

//提供一个返回协议数据的缓冲区

if (put_user(cmd, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

if (copy_to_user(ptr, &tr, sizeof(tr)))

return -EFAULT;

ptr += sizeof(tr);

...

//cmd = BR_TRANSACTION && 不是正在处理异步通信请求

//就需要等待该同步进程通信完以后再进行下一步的操作

if (cmd == BR_TRANSACTION && !(t->flags

& TF_ONE_WAY)) {

...

} else {

t->buffer->transaction = NULL;

kfree(t);

binder_stats_deleted(BINDER_STAT_TRANSACTION);

}

break;

}

...

} |

这里就会封装一个BR_REPLY返回协议,然后返回到IPCThreadState::waitForResponse中

接下来看IPCThreadState::waitForResponse对于BR_REPLY的处理

| status_t IPCThreadState::waitForResponse(Parcel

*reply, status_t *acquireResult)

{

uint32_t cmd;

...

while (1) {

...

cmd = (uint32_t)mIn.readInt32();

...

switch (cmd) {

case BR_REPLY:

{

binder_transaction_data tr;

err = mIn.read(&tr, sizeof(tr));

...

if (reply) {

if ((tr.flags & TF_STATUS_CODE) == 0) {

//重置Parcel对象内部数据缓冲区,并指定释放函数为freeBuffer

reply->ipcSetDataReference(

reinterpret_cast

<const uint8_t*>(tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast

<const binder_size_t*>(tr.data.ptr.offsets),

tr.offsets_size/sizeof(binder_size_t),

freeBuffer, this);

} else {

...

} else {

...

}

}

goto finish;

...

}

}

...

}

|

实际上会调用reply->ipcSetDataReference去重置数据缓冲区,这里不再细述,整个Service注册就大致完成了,后续还有Binder线程的启动感兴趣可以自行查看

ServiceManager查找服务

@Service的查找流程|center

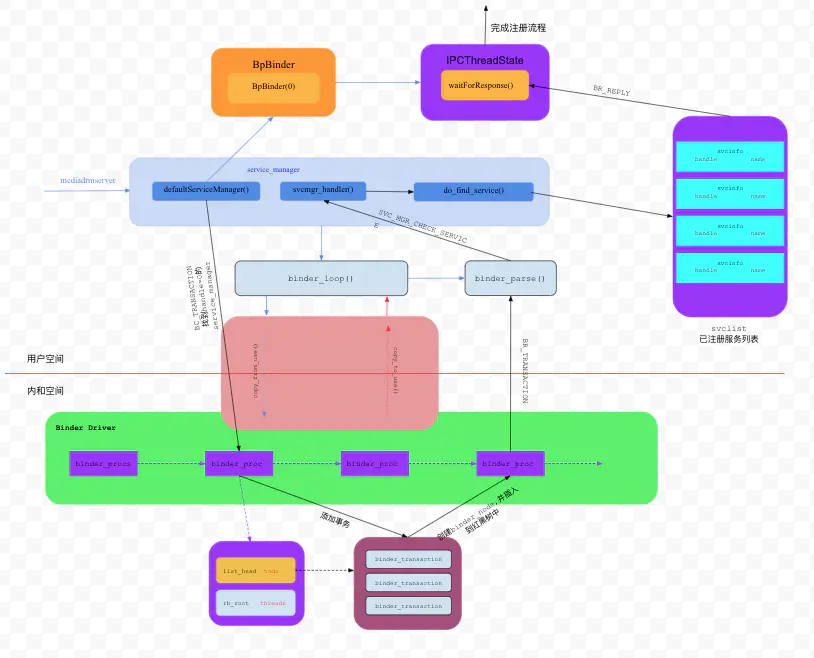

完整的通信流程

@完整的通信流程

|