| 编辑推荐: |

本文讲解TensorBoard是Tensorflow自带的网络模型可视化的工具,使用者可以清楚地看到网络的结构、数据流向、损失函数变化等一系列与网络模型有关的数据。希望对您有所帮助。

本文来自于csdn,由火龙果软件Delores编辑、推荐。 |

|

网络结构

单纯使用tensorboard查看网络结构只需要在程序里加入下面一行代码:

writer = tf.summary.FileWriter

('C:\\Users\\45374\\logs',sess.graph) |

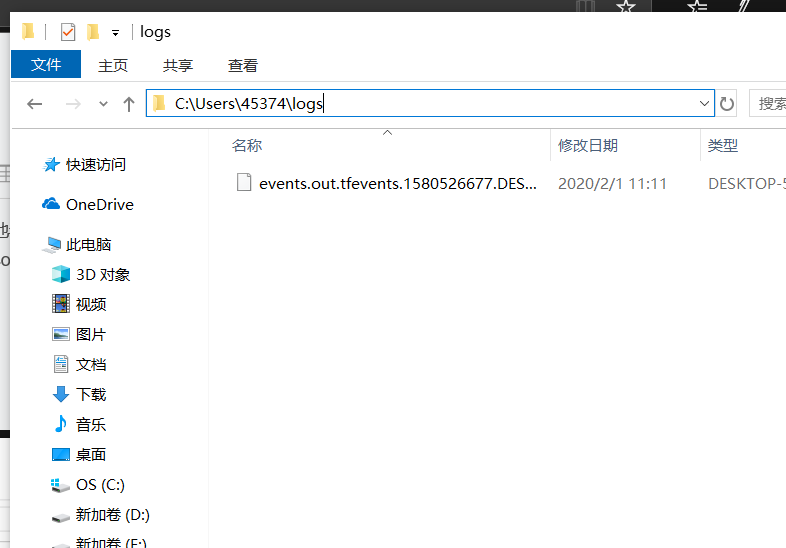

使用完后对应路径将会生成一个环境文件,如下图。

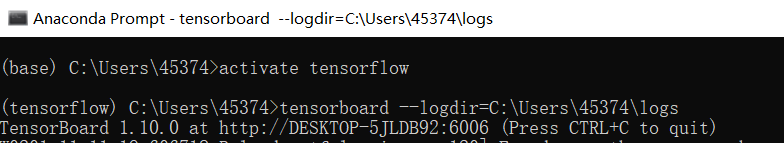

这时我们打开cmd(由于我是在anaconda下建的tensorflow环境,所以我用的anaconda prompt,并先进入到tensorflow的环境中),输入tensorboard --logdir='路径' 得到如下结果:

(如果不是自己建的tensorflow环境不需要前面那句activate tensorflow,而且注意这里的tensorflow是我自己给环境取的名字),一般大家只需在cmd中输入tensorboard --logdir='路径' 。

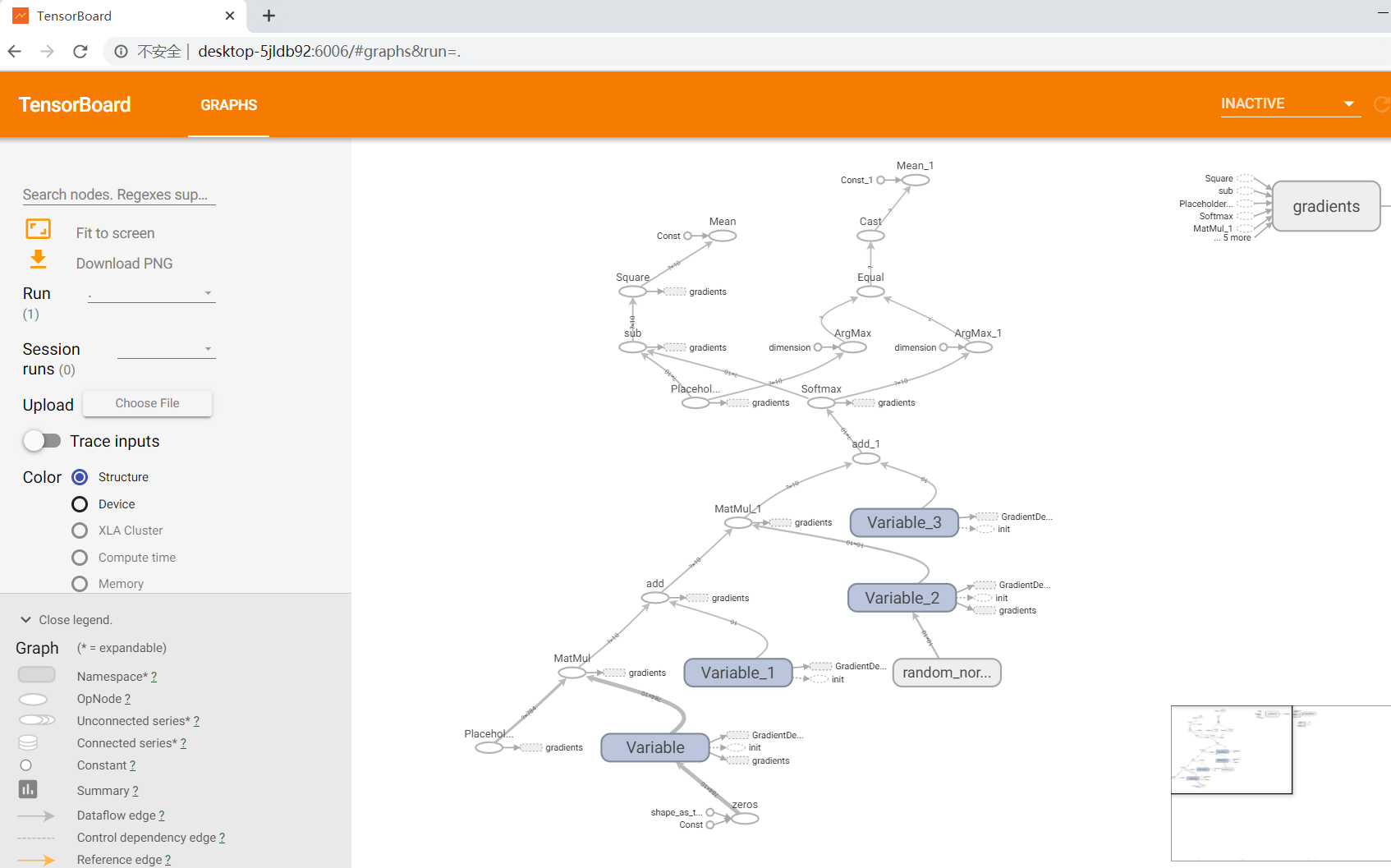

然后会返回一个网址,复制该网址然后在在浏览器中打开(推荐用火狐或谷歌浏览器,有的浏览器可能打不开),如下:

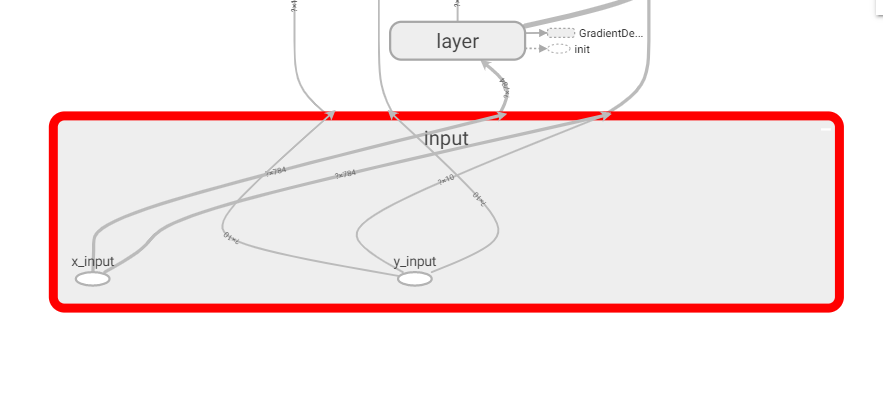

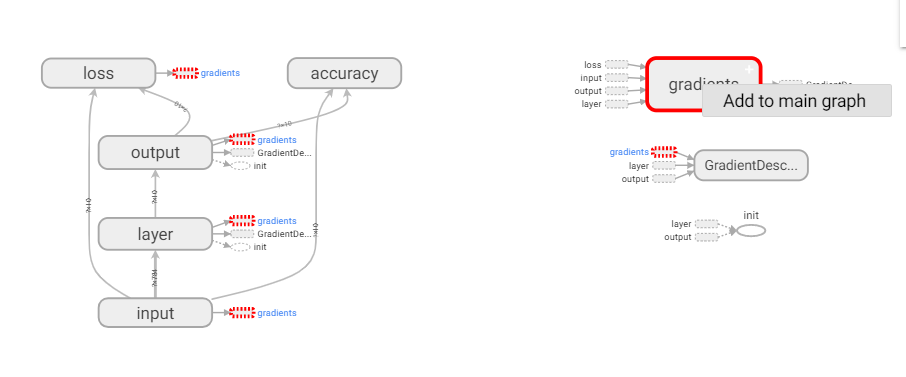

这个图结果较为混乱,图中4个变量分别是两组w和b。为了让网络结构更清晰,我们可以对部分变量和操作设置命名空间,使结构更容易观察,代码可作如下修改:

with tf.name_scope("input"):

x = tf.placeholder(tf.float32,

[None,784],name='x_input')

y = tf.placeholder(tf.float32,

[None,10],name='y_input')

#输入层到隐藏层

with tf.name_scope('layer'):

with tf.name_scope('wights'):

W = tf.Variable(tf.zeros([784,10]))

#这里W初始化为0,可以更快收敛

with tf.name_scope('biases'):

b = tf.Variable(tf.zeros([10]))

with tf.name_scope('Wx_plus_b_L1'):

Wx_plus_b_L1 = tf.matmul(x,W) + b

#隐藏层到输出层

with tf.name_scope('output'):

with tf.name_scope('wights'):

W2 = tf.Variable(tf.random_normal([10,10]))

#隐藏层不能初始化为0

with tf.name_scope('biases'):

b2 = tf.Variable(tf.zeros([10]))

with tf.name_scope('softmax'):

prediction = tf.nn.softmax

(tf.matmul(Wx_plus_b_L1,W2)+b2)

#二次代价函数

with tf.name_scope('loss'):

loss = tf.reduce_mean(tf.square(y-prediction))

#梯度下降法训练

train_step = tf.train.GradientDescentOptimizer(0.2).

minimize(loss)#学习率为0.2

init = tf.global_variables_initializer() |

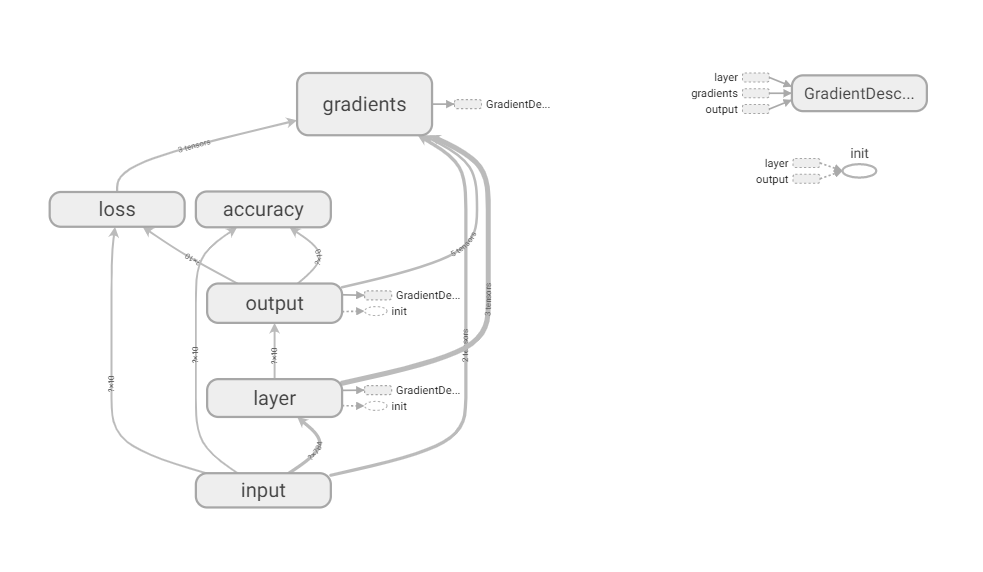

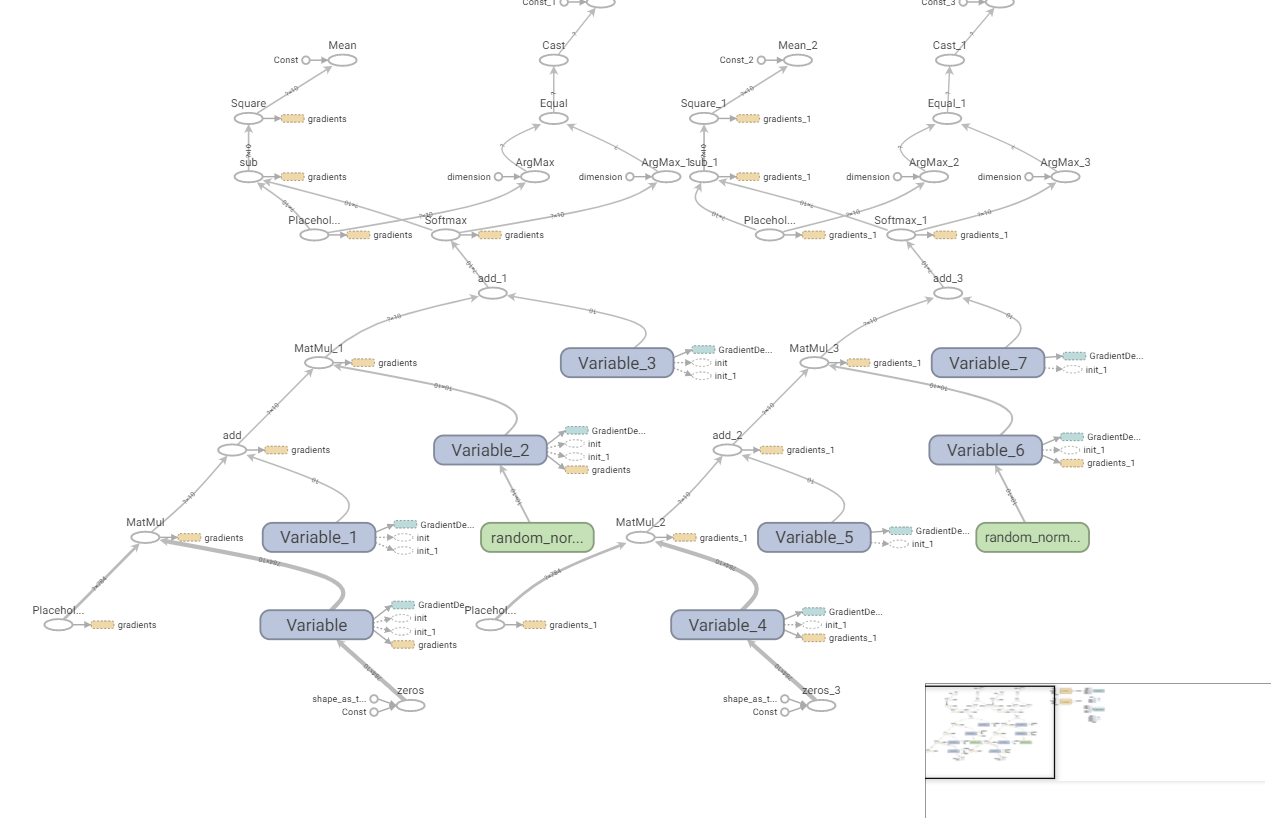

这时tensorboard中查看的网络结构如下:

每一个命名空间可以双击打开查看内部结构:

也可以右键选择把某一部分单独拿出来或放进网络:

不过注意,如果多次运行程序,必须先将程序关闭,然后重新运行,并且将logs文件中的enventout文件删除。不然可能会出现多个网络同时显示的情况,如下:

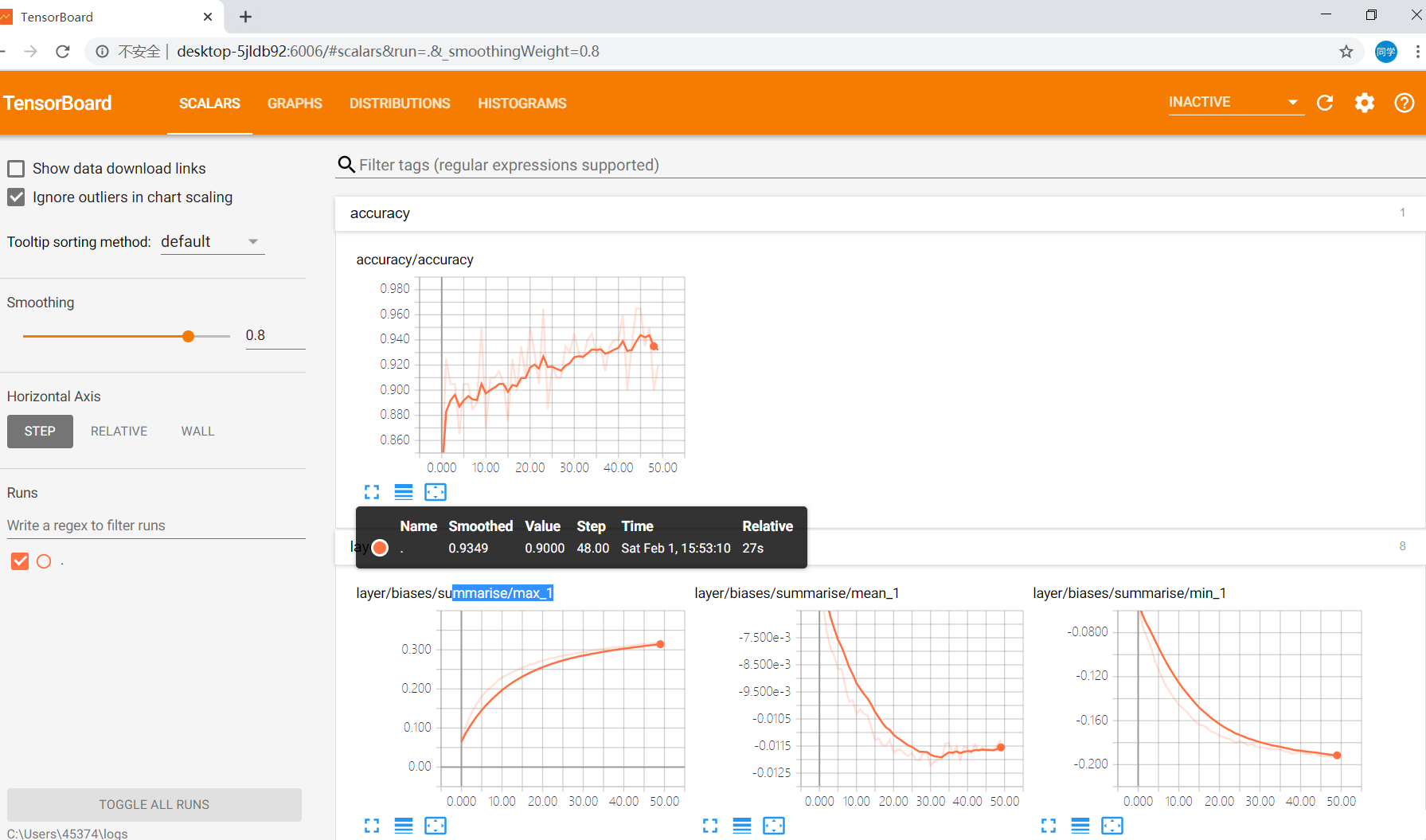

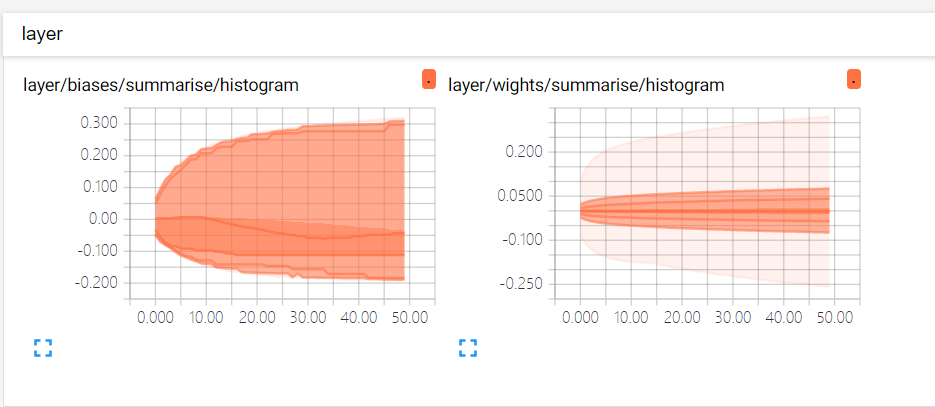

参数变化

在网络训练过程中,会有很多参数的变化过程,我们可以对这些参数的变化过程进行显示。

我们可以先定义一个函数:

def variable_summarise(var):

with tf.name_scope('summarise'):

mean = tf.reduce_mean(var)

tf.summary.scalar('mean',mean)

with tf.name_scope('stddev'):

stddev = tf.sqrt(tf.reduce_mean

(tf.square(var

- mean)))

#tf.summary.scalar输出标量

#tf.summary.histogram输出直方图

tf.summary.scalar('srddev',stddev)

tf.summary.scalar('max',tf.reduce_max(var))

tf.summary.scalar('min',tf.reduce_min(var))

tf.summary.histogram('histogram',var) |

函数里定义着我们想知道的信息,我们想知道那个变量信息,就调用这个函数。

损失函数和准确率每次只有一个标量值,所以只需一个summary.scalar函数:

with tf.name_scope('loss'):

loss = tf.reduce_mean(tf.square(y-prediction))

tf.summary.scalar('loss',loss)

with tf.name_scope('accuracy'):

correct_prediction = tf.equal(tf.argmax(y,1),

tf.argmax(prediction,1))

#equl判断是否相等,argmax返回张量最大值的索引

accuracy = tf.reduce_mean(tf.cast

(correct_prediction,tf.float32))

#将布尔型转换为浮点型

tf.summary.scalar('accuracy',accuracy) |

然后合并所有summary:

| merged = tf.summary.merge_all() |

将 merged函数和网络训练一起进行:

summary,_ = sess.run([merged,train_step],

feed_dict={x:batch_xs,y:batch_ys}) |

最后将summary中数据写入文件:

| writer.add_summary(summary,epoch)

|

接下来就可以在tensorboard中查看刚刚记录的各种数据:

完整代码如下:

import tensorflow

as tf

from tensorflow.examples.tutorials.mnist

import

input_data

#载入数据

mnist = input_data.read_data_sets

("E:/mnist",one_hot=True)

#每个批次大小

batch_size = 200

#计算一共有多少个批次

n_batch = mnist.train.num_examples

//batch_size

#整除

def variable_summarise(var):

with tf.name_scope('summarise'):

mean = tf.reduce_mean(var)

tf.summary.scalar('mean',mean)

with tf.name_scope('stddev'):

stddev = tf.sqrt(tf.reduce_mean

(tf.square(var

- mean)))

tf.summary.scalar('srddev',stddev)

tf.summary.scalar('max',tf.reduce_max(var))

tf.summary.scalar('min',tf.reduce_min(var))

tf.summary.histogram('histogram',var)

with tf.name_scope("input"):

x = tf.placeholder(tf.float32,

[None,784],name='x_input')

y = tf.placeholder(tf.float32,

[None,10],name='y_input')

#输入层到隐藏层

with tf.name_scope('layer'):

with tf.name_scope('wights'):

W = tf.Variable(tf.zeros([784,10]))

#这里W初始化为0,可以更快收敛

variable_summarise(W)

with tf.name_scope('biases'):

b = tf.Variable(tf.zeros([10]))

variable_summarise(b)

with tf.name_scope('Wx_plus_b_L1'):

Wx_plus_b_L1 = tf.matmul(x,W) + b

#隐藏层到输出层

with tf.name_scope('output'):

with tf.name_scope('wights'):

W2 = tf.Variable(tf.random_normal([10,10]))

#隐藏层不能初始化为0

with tf.name_scope('biases'):

b2 = tf.Variable(tf.zeros([10]))

with tf.name_scope('softmax'):

prediction = tf.nn.softmax(tf.matmul

(Wx_plus_b_L1,W2)+b2)

#二次代价函数

with tf.name_scope('loss'):

loss = tf.reduce_mean(tf.square(y-prediction))

tf.summary.scalar('loss',loss)

#梯度下降法训练

train_step =

tf.train.GradientDescentOptimizer(0.2).

minimize(loss)#学习率为0.2

init = tf.global_variables_initializer()

#求准确率

with tf.name_scope('accuracy'):

correct_prediction = tf.equal

(tf.argmax(y,1),tf.argmax

(prediction,1))#equl判断是否相等,

argmax返回张量最大值的索引

accuracy = tf.reduce_mean(tf.cast

(correct_prediction,tf.float32))

#将布尔型转换为浮点型

tf.summary.scalar('accuracy',accuracy)

merged = tf.summary.merge_all()

with tf.Session() as sess:

sess.run(init)

writer = tf.summary.FileWriter

('C:\\Users\\45374\\logs',sess.graph)

#迭代训练20次

for epoch in range(50):

for batch in range(n_batch):

#训练集数据与标签

batch_xs,batch_ys =

mnist.train.next_batch(batch_size)

# sess.run(train_step,feed_dict=

{x:batch_xs,y:batch_ys})

summary,_ = sess.run([merged,train_step],

feed_dict=

{x:batch_xs,y:batch_ys})

writer.add_summary(summary,epoch)

acc = sess.run(accuracy,feed_dict

={x:mnist.test.images,y:mnist.test.labels})

print("Iter " + str(epoch) + "

Accuracy" + str(acc)) |

|