| 编辑推荐: |

文章介绍了实现一个简单的三层神经网络以及优化算法(比如梯度下降法)的工作原理,希望对您的学习有所帮助。

本文来自于csdn,由火龙果软件Alice编辑、推荐。 |

|

在这篇文章中,我们将介绍为什么要从头开始实现神经网络呢?即使你打算以后使用PyBrain这样的神经网络库,至少一次从头开始实现一个神经网络也是一个极具价值的练习,这会帮助你理解神经网络是怎么工作的,并且如果你想要设计出高效的神经网络模型,做一个这样的练习也是很有必要的。

需要注意的一件事情是,本篇文章的示例代码效率并不高,它的目的是易于被人理解。

生成数据集

首先我们生成一个可以操作的数据集,幸运的是,scikit-learn提供了一些有用的数据集生成器,所以我们不需要自己写代码来生成数据集,只需使用make_moons这个函数就可以。

# 生成数据集并绘制出来

np.random.seed(0)

X, y = sklearn.datasets.make_moons(200, noise=0.20)

plt.scatter(X[:,0], X[:,1], s=40, c=y, cmap=plt.cm.Spectral) |

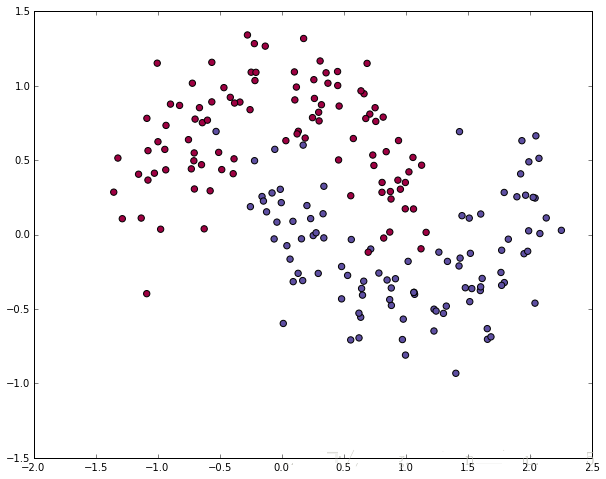

月牙形状的、有两类数据的数据集

我们生成的数据集中有两种类型的数据,分别用红点和蓝点标识了出来。你可以将蓝点视为男性患者,将红点视为女性患者,并且将x轴和y轴视为医疗方式。

我们的目标是训练一个机器学习分类器,让它在x、y坐标系下预测正确的类别(男性或者女性)。需要注意的是这些数据不能被线性分割,我们无法画出一条直线将这两种类型的数据分开,这意味着线性分类器(比如Logistic回归)将无法拟合这些数据,除非你手工设计的非线性特征(如多项式),为给定的数据集工作良好。

事实上,这就是神经网络主要的优点之一,你不必担心特征工程,神经网络的隐藏层将会为你学习特征。

Logistic回归

为了证明这一点,让我们训练一个Logistic回归分类器。这个分类器的输入是坐标x、y,它的输出是预测的数据类型(0或1)。为了方便,我们使用scikit-learn中的Logistic

Regression类。

# Train the logistic

rgeression classifier

clf = sklearn.linear_model.LogisticRegressionCV()

clf.fit(X, y)

# Plot the decision boundary

plot_decision_boundary(lambda x: clf.predict(x))

plt.title("Logistic Regression") |

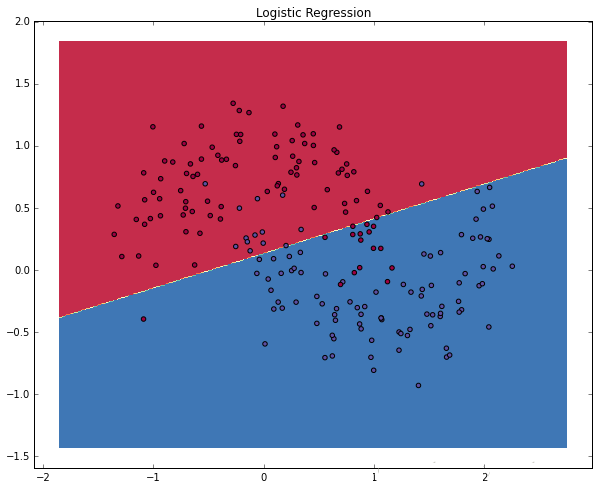

上图显示了Logistic回归分类器学习的决策边界,它用直线尽可能地把数据分开了,但是并没有捕获数据的月牙形状。

训练一个神经网络

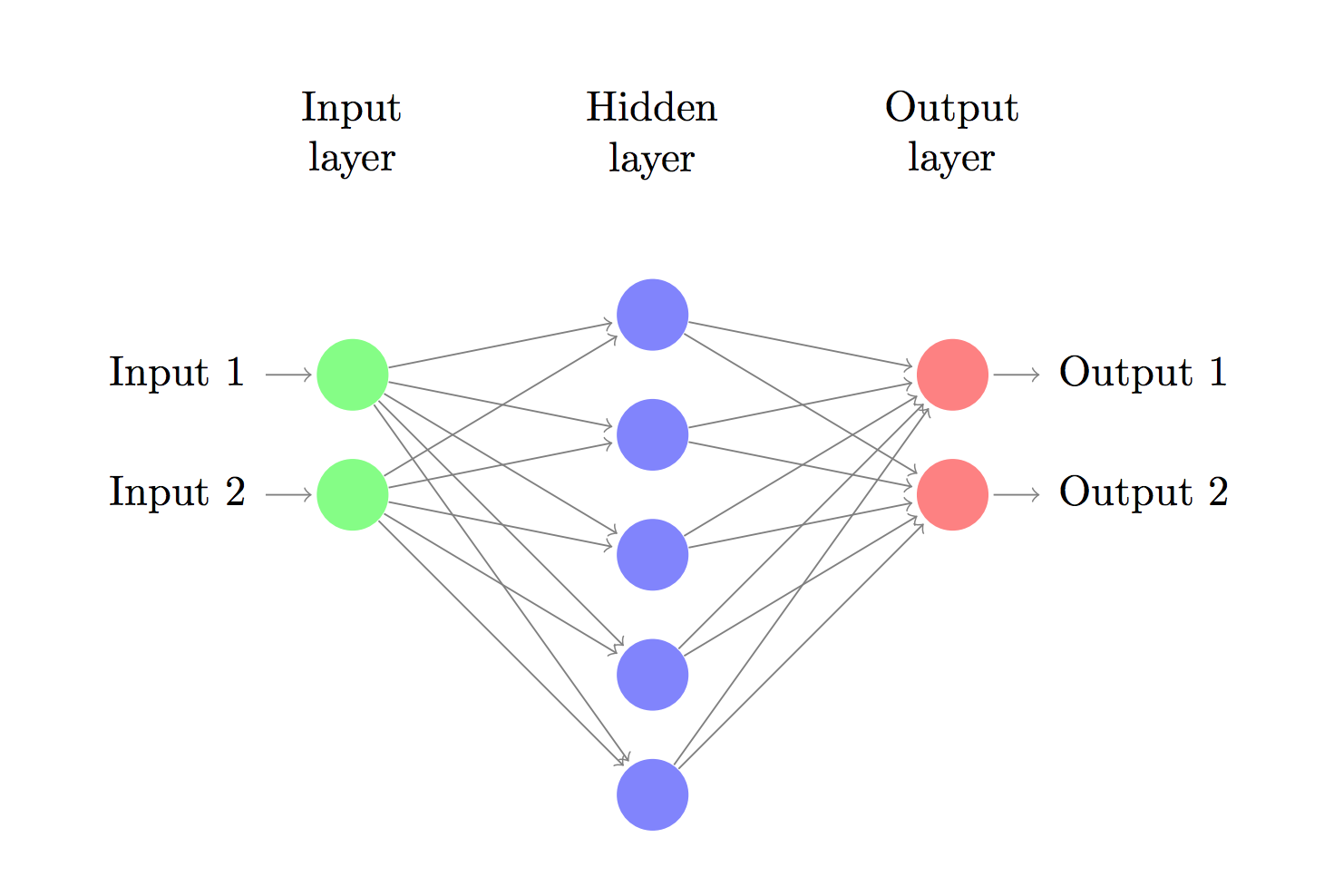

现在让我们搭建一个3层的神经网络,其中包含1个输入层,1个隐藏层以及1个输出层。输出层的节点数取决于我们的数据的维度,也就是2;类似地,输出层的节点数取决于我们有多少类数据,这里也是2(因为我们只有两类数据,所以实际上可以只有一个输出节点,输出0或1,但是有两个输出节点会使得以后有多类数据的时候神经网络更容易扩展)。神经网络的输入是x、y坐标,而输出是两个概率,一个是类型为0(女性患者)的概率,另一个是类型为1(男性患者)的概率。这个神经网络看起来就像下面这样:

我们可以自己选择隐藏层的维度(节点数量),隐藏层节点的数量越多,我们能够适应的功能就越复杂。但是高维度总是伴随着高成本。如果节点数量很多,首先,为了预测结果以及学习神经网络参数,就需要进行大量的计算;其次,更多的参数数量(译者注:隐藏层的参数量是由该层节点数和前一层的节点数决定的)意味着更容易造成对数据的过度拟合。

那么该如何选择隐藏层的节点数呢?尽管有一些通用指南和建议,但是隐藏层的节点数通常取决于你要解决的特定的问题,与其说节点数的选择是一门科学,不如说它是一门艺术。我们稍后会探究隐藏层节点的数量问题,并观察它是如何影响神经网络的输出的。

我们还需要为隐藏层选择一个激活函数,激活函数用来将该层的输入转换成输出。非线性激活函数能够使我们拟合非线性数据。常用的激活函数有tanh、sigmoid以及RELUs,在这里我们使用tanh,因为它在很多场景中都表现得很好。这些函数都有一个很棒的特性,那就是它们的导数都能用它们本身表示,比如,tanh(x)的导数是1?tanh2(x)1?tanh

2(x),这非常有用,因为我们只要计算tanh(x)的值一次,就可以在对tanh(x)求导的时候重复使用它。

因为我们想要让神经网络输出概率,所以输出层的激活函数我们使用softmax,它可以简单地把原始数值转换成概率。如果你对逻辑函数很熟悉,那么你可以将softmax看作是逻辑函数在多类型中的一般化应用。

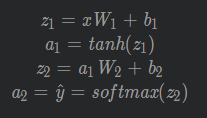

神经网络如何做出预测

我们的神经网络利用正向传播来做出预测,所谓正向传播,就是指一组矩阵的相乘以及我们之前提到的激活函数的应用。假设x是对神经网络的二维输入,那么我们按照如下步骤计算我们的预测结果$

\hat{y}$(维度也是2):

学习参数

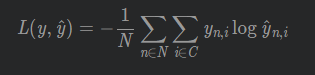

为神经网络学习参数意味着在训练数据上寻找最佳参数(W1,b1,W2,b2,以此达到使错误最小化的目的。但是我们应该如何定义“错误”呢?我们将衡量错误的函数称为损失函数(loss

function)。对于softmax输出来说,一个常用的选择是分类交叉熵损失(categorical

cross-entropy loss,又称为负对数似然 negative log likelihood)。如果我们有N个训练样本,以及C个输出类别,那么我们的预测结果$

\hat{y}相对于真值标签相对于真值标签 y$的损失是这样定义的:

这个公式看起来复杂,其实它做的事情就是总结训练样本,如果预测错了类型,就增加损失(译者注:粗体部分的原文如下:sum

over our training examples and add to the loss if

we predicted the incorrect class. 我不确定粗体部分这样翻译是否合适,如果有网友有更好的翻译欢迎指正)。yy(正确的标签)和y(我们的预测值)的数值相差越大,我们的损失就越大。通过寻找使损失最小化的参数,我们可以最大限度地提高训练数据的似然。

我们可以用梯度下降法来寻找损失函数的最小值。我会用固定的学习率实现一个最普通版本的梯度下降法,也称为批量梯度下降法,它的变化版本比如随机梯度下降法和小批量梯度下降法在实践中通常表现得更好,所以如果你对此要求严格,那么你一定会想要使用它们,最好随着时间的推移再配合以学习率衰减。

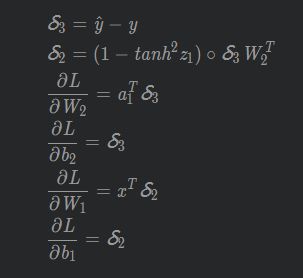

作为输入,梯度下降法需要计算损失函数对于参数的梯度(导数向量)我们利用著名的反向传播算法来计算这些梯度,从输出开始用反向传播计算梯度会很高效。对于反向传播的工作原理,我不会深入讲解其细节,但是网上有很多出色的解释(这里或者这里)。

下面的公式会帮助我们应用反向传播(这里请相信我):

实现

现在我们已经准备好实现一个神经网络了,我们为梯度下降定义一些变量和参数:

num_examples

= len(X) # 训练样本的数量

nn_input_dim = 2 # 输入层的维度

nn_output_dim = 2 # 输出层的维度

# 梯度下降的参数(我直接手动赋值)

epsilon = 0.01 # 梯度下降的学习率

reg_lambda = 0.01 # 正则化的强度 |

首先我们实现之前定义的损失函数,我们用这个损失函数来衡量模型的工作成果是否令人满意。

#帮助我们在数据集上估算总体损失的函数

def calculate_loss(model):

W1, b1, W2, b2 = model['W1'], model['b1'], model['W2'],

model['b2']

#正向传播,计算预测值

z1 = X.dot(W1) + b1

a1 = np.tanh(z1)

z2 = a1.dot(W2) + b2

exp_scores = np.exp(z2)

probs = exp_scores / np.sum(exp_scores, axis=1,

keepdims=True)

# 计算损失

corect_logprobs = -np.log(probs[range(num_examples),

y])

data_loss = np.sum(corect_logprobs)

#在损失上加上正则项(可选)

data_loss += reg_lambda/2 * (np.sum(np.square(W1))

+ np.sum(np.square(W2)))

return 1./num_examples * data_loss |

我们还实现了一个函数用来帮助我们计算神经网络的输出,就像我们之前定义的那样,在函数内部进行正向传播,然后返回概率最高的那个类别。

# 预测输出(0或1)

def predict(model, x):

W1, b1, W2, b2 = model['W1'], model['b1'], model['W2'],

model['b2']

# 正向传播

z1 = x.dot(W1) + b1

a1 = np.tanh(z1)

z2 = a1.dot(W2) + b2

exp_scores = np.exp(z2)

probs = exp_scores / np.sum(exp_scores, axis=1,

keepdims=True)

return np.argmax(probs, axis=1) |

最后,这个函数用来训练神经网络,它利用我们之前提到的反向传播导数来实现批量梯度下降。

# 这个函数为神经网络学习参数并且返回模型

# - nn_hdim: 隐藏层的节点数

# - num_passes: 通过训练集进行梯度下降的次数

# - print_loss: 如果是True, 那么每1000次迭代就打印一次损失值

def build_model(nn_hdim, num_passes=20000, print_loss=False):

# 用随机值初始化参数。我们需要学习这些参数

np.random.seed(0)

W1 = np.random.randn(nn_input_dim, nn_hdim) /

np.sqrt(nn_input_dim)

b1 = np.zeros((1, nn_hdim))

W2 = np.random.randn(nn_hdim, nn_output_dim) /

np.sqrt(nn_hdim)

b2 = np.zeros((1, nn_output_dim))

# 这是我们最终要返回的数据

model = {}

# 梯度下降

for i in xrange(0, num_passes):

# 正向传播

z1 = X.dot(W1) + b1

a1 = np.tanh(z1)

z2 = a1.dot(W2) + b2

exp_scores = np.exp(z2)

probs = exp_scores / np.sum(exp_scores, axis=1,

keepdims=True)

# 反向传播

delta3 = probs

delta3[range(num_examples), y] -= 1

dW2 = (a1.T).dot(delta3)

db2 = np.sum(delta3, axis=0, keepdims=True)

delta2 = delta3.dot(W2.T) * (1 - np.power(a1,

2))

dW1 = np.dot(X.T, delta2)

db1 = np.sum(delta2, axis=0)

# 添加正则项 (b1 和 b2 没有正则项)

dW2 += reg_lambda * W2

dW1 += reg_lambda * W1

# 梯度下降更新参数

W1 += -epsilon * dW1

b1 += -epsilon * db1

W2 += -epsilon * dW2

b2 += -epsilon * db2

# 为模型分配新的参数

model = { 'W1': W1, 'b1': b1, 'W2': W2, 'b2':

b2}

# 选择性地打印损失

# 这种做法很奢侈,因为我们用的是整个数据集,所以我们不想太频繁地这样做

if print_loss and i % 1000 == 0:

print "Loss after iteration %i: %f"

%(i, calculate_loss(model))

return model |

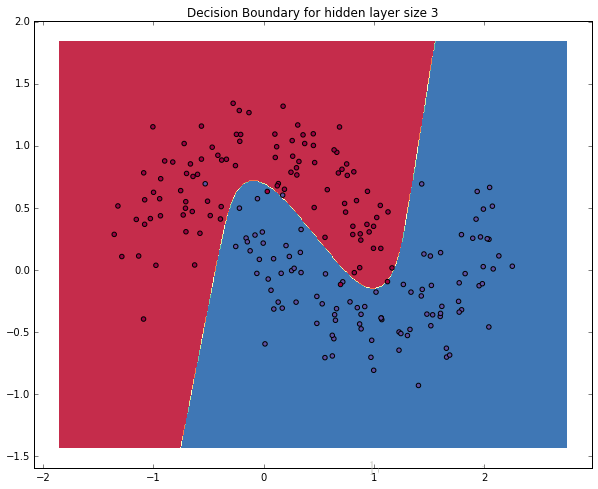

隐藏层节点数为3的神经网络

当我们训练一个隐藏层节点数为3的神经网络时,让我们看看会发生什么。

# 搭建一个3维隐藏层的模型

model = build_model(3, print_loss=True)

# 画出决策边界

plot_decision_boundary(lambda x: predict(model,

x))

plt.title("Decision Boundary for hidden layer

size 3") |

Yes!这看起来很棒。我们的神经网络发现的决策边界能够成功地区分数据类别。

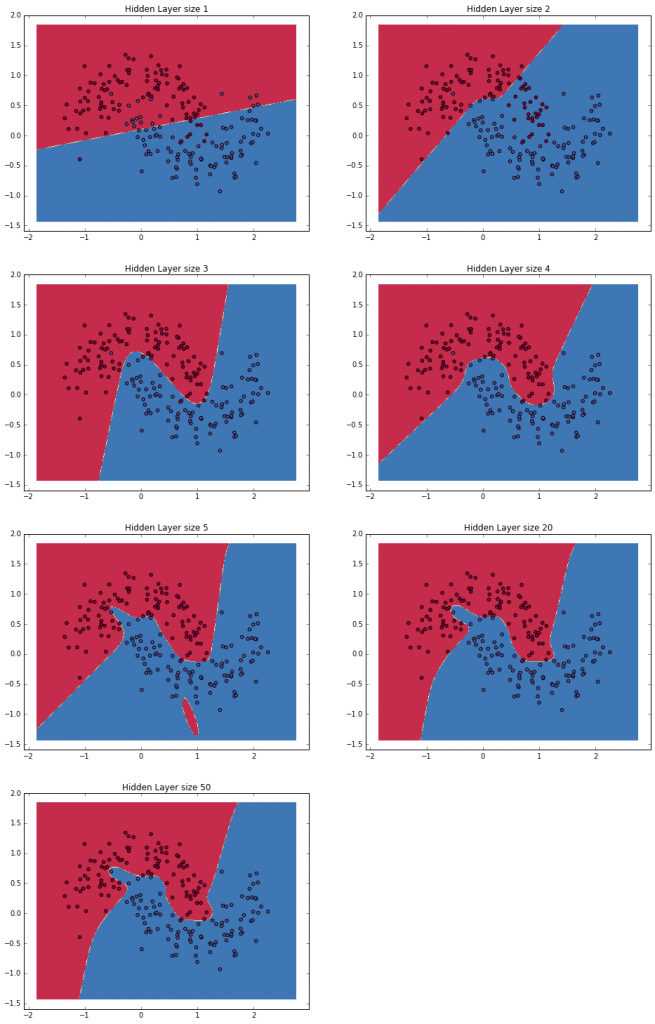

改变隐藏层的大小

在上面的例子中,我们选择了有三个节点的隐藏层,现在让我们看看改变隐藏层的大小会如何影响最终的结果。

plt.figure(figsize=(16,

32))

hidden_layer_dimensions = [1, 2, 3, 4, 5, 20,

50]

for i, nn_hdim in enumerate(hidden_layer_dimensions):

plt.subplot(5, 2, i+1)

plt.title('Hidden Layer size %d' % nn_hdim)

model = build_model(nn_hdim)

plot_decision_boundary(lambda x: predict(model,

x))

plt.show() |

我们可以看到低维度的隐藏层可以很好地捕获数据的大致边界,而高维度的隐藏层则更易出现过度拟合。正如我们期待的那样,它们“记住”了数据并适应了数据的大致形状。如果我们接下来在一个独立的测试集上评估我们的模型(事实上你也应该这么做),低维度的隐藏层会表现得更好,因为它们更一般化。我们可以利用更高强度的正则化来抵消过度拟合,但是为隐藏层选择一个正确的尺寸则是比较“经济”的解决办法。

练习

为了对代码更熟悉,你可以尝试做下面这些事:

1.用小批量梯度下降法(了解更多)而不是批量梯度下降法来训练神经网络。在实践中,小批量梯度下降法通常会表现得更好。

2.我们在梯度下降时用了固定的学习率??。你可以为梯度下降的学习率创建一个衰减过程(了解更多)。

3.我们在隐藏层使用的激活函数是tanhtanh。用其他的激活函数实践一下(有一些在上面提到了)。注意:改变激活函数也就意味着要改变反向传播导数。

4.把神经网络的输出类别从2增加到3。为此你需要生成一个类似的数据集。

将神经网络扩展到4层。在神经网络的层数上面做一下实践。增加另外一个隐藏层意味着你不仅要调整正向传播的代码,还要调整反向传播的代码。 |