| 1.常见算法套路

电商行业中,对于用户的商品推荐一直是一个非常热门而且重要的话题,有很多比较成熟的方法,但是也各有利弊,大致如下:

基于商品相似度:比如食物A和食物B,对于它们价格、味道、保质期、品牌等维度,可以计算它们的相似程度,可以想象,我买了包子,很有可能顺路带一盒水饺回家。

优点:冷启动,其实只要你有商品的数据,在业务初期用户数据不多的情况下,也可以做推荐

缺点:预处理复杂,任何一件商品,维度可以说至少可以上百,如何选取合适的维度进行计算,设计到工程经验,这些也是花钱买不到的

典型:亚马逊早期的推荐系统

基于关联规则:最常见的就是通过用户购买的习惯,经典的就是“啤酒尿布”的案例,但是实际运营中这种方法运用的也是最少的,首先要做关联规则,数据量一定要充足,否则置信度太低,当数据量上升了,我们有更多优秀的方法,可以说没有什么亮点,业内的算法有apriori、ftgrow之类的

优点:简单易操作,上手速度快,部署起来也非常方便

缺点:需要有较多的数据,精度效果一般

典型:早期运营商的套餐推荐

基于物品的协同推荐:假设物品A被小张、小明、小董买过,物品B被小红、小丽、小晨买过,物品C被小张、小明、小李买过;直观的看来,物品A和物品C的购买人群相似度更高(相对于物品B),现在我们可以对小董推荐物品C,小李推荐物品A,这个推荐算法比较成熟,运用的公司也比较多

优点:相对精准,结果可解释性强,副产物可以得出商品热门排序

缺点:计算复杂,数据存储瓶颈,冷门物品推荐效果差

典型:早期一号店商品推荐

基于用户的协同推荐:假设用户A买过可乐、雪碧、火锅底料,用户B买过卫生纸、衣服、鞋,用户C买过火锅、果汁、七喜;直观上来看,用户A和用户C相似度更高(相对于用户B),现在我们可以对用户A推荐用户C买过的其他东西,对用户C推荐用户A买过买过的其他东西,优缺点与基于物品的协同推荐类似,不重复了。

基于模型的推荐:svd+、特征值分解等等,将用户的购买行为的矩阵拆分成两组权重矩阵的乘积,一组矩阵代表用户的行为特征,一组矩阵代表商品的重要性,在用户推荐过程中,计算该用户在历史训练矩阵下的各商品的可能性进行推荐。

优点:精准,对于冷门的商品也有很不错的推荐效果

缺点:计算量非常大,矩阵拆分的效能及能力瓶颈一直是受约束的

典型:惠普的电脑推荐

基于时序的推荐:这个比较特别,在电商运用的少,在Twitter,Facebook,豆瓣运用的比较多,就是只有赞同和反对的情况下,怎么进行评论排序,详细的可以参见我之前写的一篇文章:应用:推荐系统-威尔逊区间法

基于深度学习的推荐:现在比较火的CNN(卷积神经网络)、RNN(循环神经网络)、DNN(深度神经网络)都有运用在推荐上面的例子,但是都还是试验阶段,但是有个基于word2vec的方法已经相对比较成熟,也是我们今天介绍的重点。

优点:推荐效果非常精准,所需要的基础存储资源较少

缺点:工程运用不成熟,模型训练调参技巧难

典型:苏宁易购的会员商品推荐

2.item2vec的工程引入

现在苏宁的商品有约4亿个,商品的类目有10000多组,大的品类也有近40个,如果通过传统的协同推荐,实时计算的话,服务器成本,计算能力都是非常大的局限,之前已经有过几篇应用介绍:基于推荐的交叉销售、基于用户行为的推荐预估。会员研发部门因为不是主要推荐的应用部门,所以在选择上,我们期望的是更加高效高速且相对准确的简约版模型方式,所以我们这边基于了word2vec的原始算法,仿造了itemNvec的方式。

首先,让我们对itemNvec进行理论拆分:

part one:n-gram

目标商品的前后商品对目标商品的影响程度

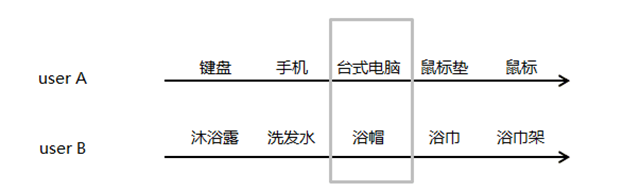

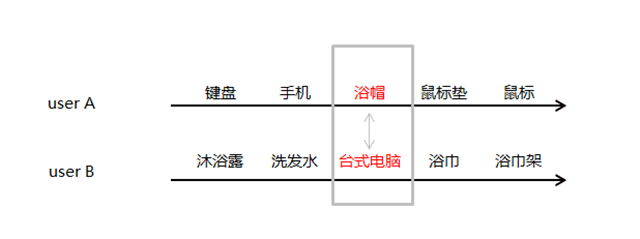

这是两个用户userA,userB在易购上面的消费time line,灰色方框内为我们观察对象,试问一下,如果换一下灰色方框内的userA、userB的购买物品,直观的可能性有多大?

直观的体验告诉我们,这是不可能出现,或者绝对不是常出现的,所以,我们就有一个初始的假设,对于某些用户在特定的类目下,用户的消费行为是连续影响的,换句话说,就是我买了什么东西是依赖我之前买过什么东西。如何通过算法语言解释上面说的这件事呢?

大家回想一下,naive bayes做垃圾邮件分类的时候是怎么做的?

假设“我公司可以提供发票、军火出售、航母维修”这句话是不是垃圾邮件?

P1(“垃圾邮件”|“我公司可以提供发票、军火出售、航母维修”)

=p(“垃圾邮件”)p(“我公司可以提供发票、军火出售、航母维修”/“垃圾邮件”)/p(“我公司可以提供发票、军火出售、航母维修”)

=p(“垃圾邮件”)p(“发票”,“军火”,“航母”/“垃圾邮件”)/p(“发票”,“军火”,“航母”)

同理

P2(“正常邮件”|“我公司可以提供发票、军火出售、航母维修”)

=p(“正常邮件”)p(“发票”,“军火”,“航母”/“正常邮件”)/p(“发票”,“军火”,“航母”)

我们只需要比较p1和p2的大小即可,在条件独立的情况下可以直接写成:

P1(“垃圾邮件”|“我公司可以提供发票、军火出售、航母维修”)

=p(“垃圾邮件”)p(“发票”/“垃圾邮件”)p(“军火”/“垃圾邮件”)p(“航母”/“垃圾邮件”)

P2(“正常邮件”|“我公司可以提供发票、军火出售、航母维修”)

=p(“正常邮件”)p(“发票”/“正常邮件”)p(“军火”/“正常邮件”)p(“航母”/“正常邮件”)

但是,我们看到,无论“我公司可以提供发票、军火出售、航母维修”词语的顺序怎么变化,不影响它最后的结果判定,但是我们这边的需求里面前面买的东西对后项的影响会更大。

冰箱=>洗衣机=>衣柜=>电视=>汽水,这样的下单流程合理

冰箱=>洗衣机=>汽水=>电视=>衣柜,这样的下单流程相对来讲可能性会更低

但是对于naive bayes,它们是一致的。

所以,我们这边考虑顺序,还是上面那个垃圾邮件的问题。

P1(“垃圾邮件”|“我公司可以提供发票、军火出售、航母维修”)

=p(“垃圾邮件”)p(“发票”)p(“军火”/“发票”)p(“军火”/“航母”)

P1(“正常邮件”|“我公司可以提供发票、军火出售、航母维修”)

=p(“正常邮件”)p(“发票”)p(“军火”/“发票”)p(“军火”/“航母”)

这边我们每个词只依赖前一个词,理论上讲依赖1-3个词通常都是可接受的。以上的考虑顺序的bayes就是基于著名的马尔科夫假设(Markov

Assumption):下一个词的出现仅依赖于它前面的一个或几个词下的联合概率问题,相关详细的理论数学公式就不给出了,这边这涉及一个思想。

part two:Huffman Coding

更大的数据存储形式

我们常用的user到item的映射是通过one hot encoding的形式去实现的,这有一个非常大的弊端就是数据存储系数且维度灾难可能性极大。

回到最初的那组数据:现在苏宁的商品有约4亿个,商品的类目有10000多组,大的品类也有近40个,同时现在会员数目达到3亿,要是需要建造一个用户商品对应的购买关系矩阵做基于用户的协同推荐的话,我们需要做一个4亿X6亿的1/0矩阵,这个是几乎不可能的,Huffman采取了一个近似二叉树的形式进行存储:

我们以易购商品购买量为例,讲解一下如何以二叉树的形式替换one hot encoding存储方式:

假设,818苏宁大促期间,经过统计,有冰箱=>洗衣机=>烘干机=>电视=>衣柜=>钻石的用户下单链条(及购买物品顺序如上),其中冰箱总售出15万台,洗衣机总售出8万台,烘干机总售出6万台,电视总售出5万台,衣柜总售出3万台,钻石总售出1万颗

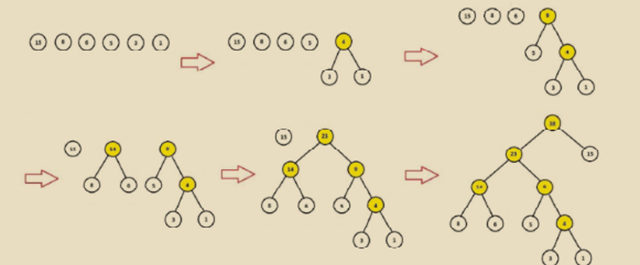

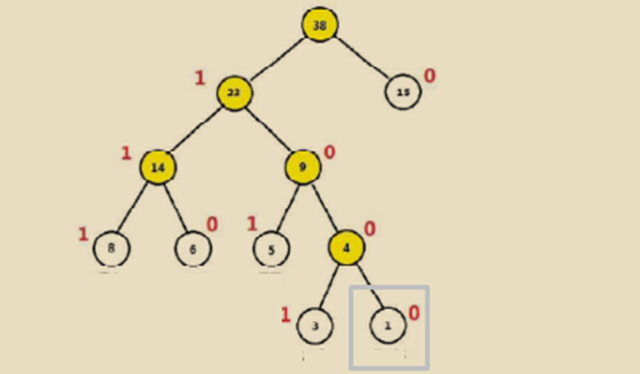

Huffman树构造过程

1.给定{15,8,6,5,3,1}为二叉树的节点,每个树仅有一个节点,那就存在6颗单独的树

2.选择节点权重值最小的两颗树进行合并也就是{3}、{1},合并后计算新权重3+1=4

3.将{3},{1}树从节点列表删除,将3+1=4的新组合树放回原节点列表

4.重新进行2-3,直到只剩一棵树为止

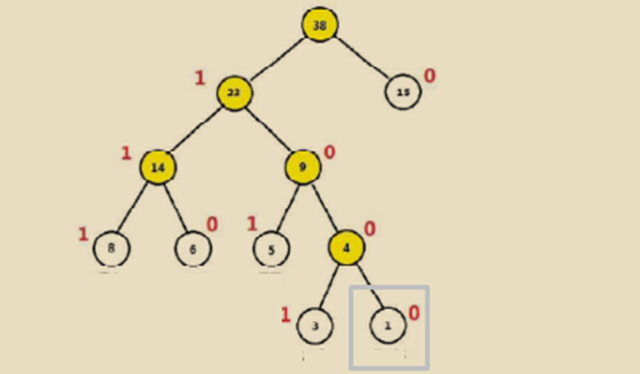

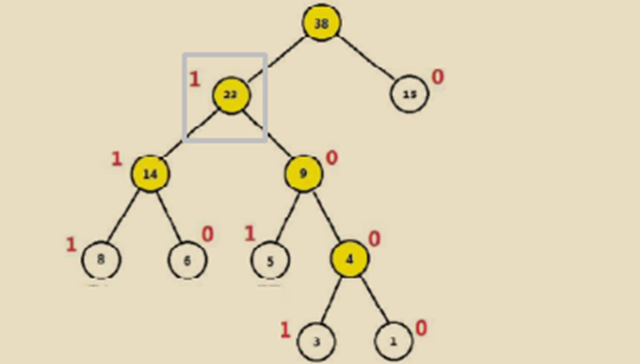

针对每层每次分支过程,我们可以将所有权重大的节点看做是1,权重小的节点看做是0,相反亦可。现在,我们比如需要知道钻石的code,就是1000,也就是灰色方框的位置,洗衣机的code就是111;这样的存储利用了0/1的存储方式,也同时考虑了组合位置的排列长度,节省了数据的存储空间。

part three:node probility

最大化当前数据出现可能的概率密度函数

对于钻石的位置而言,它的Huffman code是1000,那就意味着在每一次二叉选择的时候,它需要一次被分到1,三次被分到0,而且每次分的过程中,只有1/0可以选择,这是不是和logistic

regression里面的0/1分类相似,所以这边我们也直接使用了lr里面的交叉熵来作为loss function。

其实对于很多机器学习的算法而言,都是按照先假定一个模型,再构造一个损失函数,通过数据来训练损失函数求argmin(损失函数)的参数,放回到原模型。

让我们详细的看这个钻石这个例子:

第一步

p(1|No.1层未知参数)=sigmoid(No.1层未知参数)

第二步

p(0|No.2层未知参数)=sigmoid(No.2层未知参数)

同理,第三第四层:

p(0|No.3层未知参数)=sigmoid(No.3层未知参数)

p(0|No.4层未知参数)=sigmoid(No.4层未知参数)

然后求p(1|No.1层未知参数)xp(0|No.2层未知参数)xp(0|No.3层未知参数)xp(0|No.4层未知参数)最大下对应的每层的未知参数即可,求解方式与logistic求解方式近似,未知参数分布偏导,后续采用梯度下降的方式(极大、批量、牛顿按需使用)

part four:approximate nerual network

商品的相似度

刚才在part three里面有个p(1|No.1层未知参数)这个逻辑,这个NO.1层未知参数里面有一个就是商品向量。

举个例子:

存在1000万个用户有过:“啤酒=>西瓜=>剃须刀=>百事可乐”的商品购买顺序

10万个用户有过:“啤酒=>苹果=>剃须刀=>百事可乐”的商品购买顺序,如果按照传统的概率模型比如navie

bayes 或者n-gram来看,P(啤酒=>西瓜=>剃须刀=>百事可乐)>>p(啤酒=>苹果=>剃须刀=>百事可乐),但是实际上这两者的人群应该是同一波人,他们的属性特征一定会是一样的才对。

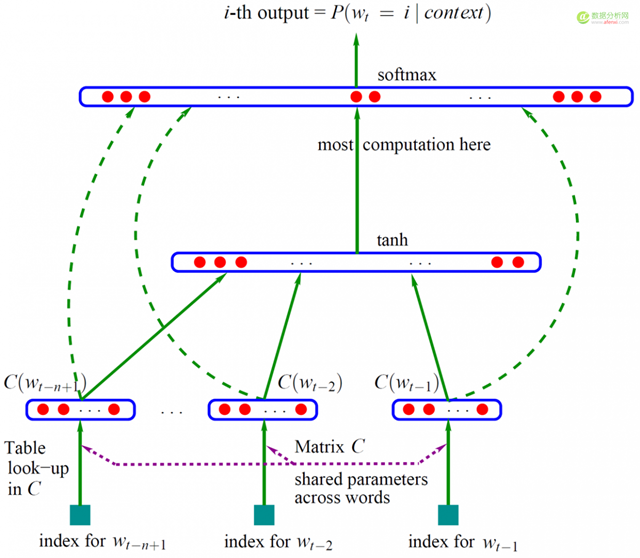

我们这边通过了随机初始化每个商品的特征向量,然后通过part three的概率模型去训练,最后确定了词向量的大小。除此之外,还可以通过神经网络算法去做这样的事情。

Bengio 等人在 2001 年发表在 NIPS 上的文章《A Neural Probabilistic

Language Model》介绍了详细的方法。

我们这边需要知道的就是,对于最小维度商品,我们以商品向量(0.8213,0.8232,0.6613,0.1234,…)的形式替代了0-1点(0,0,0,0,0,1,0,0,0,0…),单个的商品向量无意义,但是成对的商品向量我们就可以比较他们间的余弦相似度,就可以比较类目的相似度,甚至品类的相似度。

3.python代码实现

1.数据读取

# -*- coding:utf-8

-*-

import pandas as pd

import numpy as np

import matplotlib as mt

from gensim.models import word2vec

from sklearn.model_selection import train_test_split

order_data = pd.read_table ('C:/Users/17031877/Desktop/ SuNing/cross_sell_data_tmp1.txt')

dealed_data = order_data.drop ('member_id', axis=1)

dealed_data = pd.DataFrame (dealed_data).fillna(value='')

|

2.简单的数据合并整理

# 数据合并

dealed_data = dealed_data['top10'] + [" "]

+ dealed_data['top9'] + [" "] + dealed_data['top8']

+ [" "] + \

dealed_data['top7'] + [" "] + dealed_data['top6']

+ [" "] + dealed_data['top5'] + ["

"] + dealed_data[

'top4'] + [" "] + dealed_data['top3']

+ [" "] + dealed_data['top2'] + ["

"] + dealed_data['top1']

# 数据分列

dealed_data = [s.encode('utf-8').split() for s

in dealed_data]

# 数据拆分

train_data, test_data = train_test_split(dealed_data,

test_size=0.3, random_state=42) |

3.模型训练

# 原始数据训练

# sg=1,skipgram;sg=0,SBOW

# hs=1:hierarchical softmax,huffmantree

# nagative = 0 非负采样

model = word2vec.Word2Vec (train_data, sg=1, min_count=10,

window=2, hs=1, negative=0)

接下来就是用model来训练得到我们的推荐商品, 这边有三个思路,可以根据具体的业务需求和实际数据量来选择:

3.1 相似商品映射表

# 最后一次浏览商品最相似的商品组top3

x = 1000

result = []

result = pd.DataFrame(result)

for i in range(x):

test_data_split = [s.encode('utf-8').split()

for s in test_data[i]]

k = len(test_data_split)

last_one = test_data_split[k - 1]

last_one_recommended = model.most_similar(last_one,

topn=3)

tmp = last_one_recommended[0] + last_one_recommended[1]

+ last_one_recommended[2]

last_one_recommended = pd.concat([pd.DataFrame(last_one),

pd.DataFrame(np.array(tmp))], axis=0)

last_one_recommended = last_one_recommended.T

result = pd.concat([pd.DataFrame(last_one_recommended),

result], axis=0) |

考虑用户最后一次操作的关注物品x,干掉那些已经被用户购买的商品,剩下的商品表示用户依旧有兴趣但是因为没找到合适的或者便宜的商品,通过商品向量之间的相似度,可以直接计算出,与其高度相似的商品推荐给用户。

3.2 最大可能购买商品

根据历史上用户依旧购买的商品顺序,判断根据当前这个目标用户近期买的商品,接下来他最有可能买什么?

比如历史数据告诉我们,购买了手机+电脑的用户,后一周内最大可能会购买背包,那我们就针对那些近期购买了电脑+手机的用户去推送电脑包的商品给他,刺激他的潜在规律需求。

# 向量库

rbind_data = pd.concat(

[order_data['top1'], order_data['top2'], order_data['top3'],

order_data['top4'], order_data['top5'],

order_data['top6'], order_data['top7'], order_data['top8'],

order_data['top9'], order_data['top10']], axis=0)

x = 50

start = []

output = []

score_final = []

for i in range(x):

score = np.array(-100000000000000)

name = np.array(-100000000000000)

newscore = np.array(-100000000000000)

tmp = test_data[i]

k = len(tmp)

last_one = tmp[k - 2]

tmp = tmp[0:(k - 1)]

for j in range(number):

tmp1 = tmp[:]

target = rbind_data_level[j]

tmp1.append(target)

test_data_split = [tmp1]

newscore = model.score(test_data_split)

if newscore > score:

score = newscore

name = tmp1[len(tmp1) - 1]

else:

pass

start.append(last_one)

output.append(name)

score_final.append(score) |

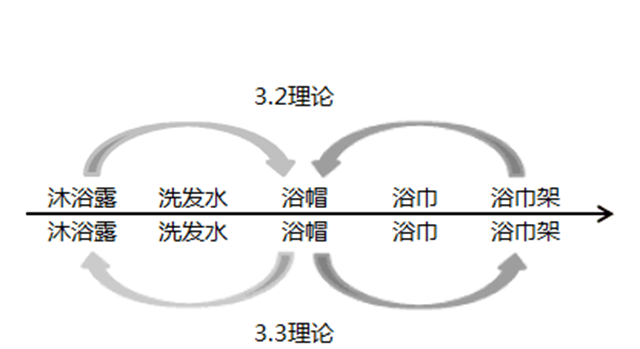

3.3 联想记忆推荐

在3.2中,我们根据了这个用户近期购买行为,从历史已购用户的购买行为数据发现规律,提供推荐的商品。还有一个近似的逻辑,就是通过目标用户最近一次的购买商品进行推测,参考的是历史用户的单次购买附近的数据,详细如下:

这个实现也非常的简单,这边代码我自己也没有写,就不贴了,采用的还是word2vec里面的predict_output_word(context_words_list,

topn=10),Report the probability distribution of the

center word given the context words as input to the

trained model

|