| 编辑推荐: |

|

本文首先介绍了机器学习概述、然后介绍了-特征工程:特征抽取、特征预处理、特征选择、特征降维。

本文来自于csdn,由火龙果软件Linda编辑、推荐。 |

|

1.机器学习概述

1.1 什么是机器学习

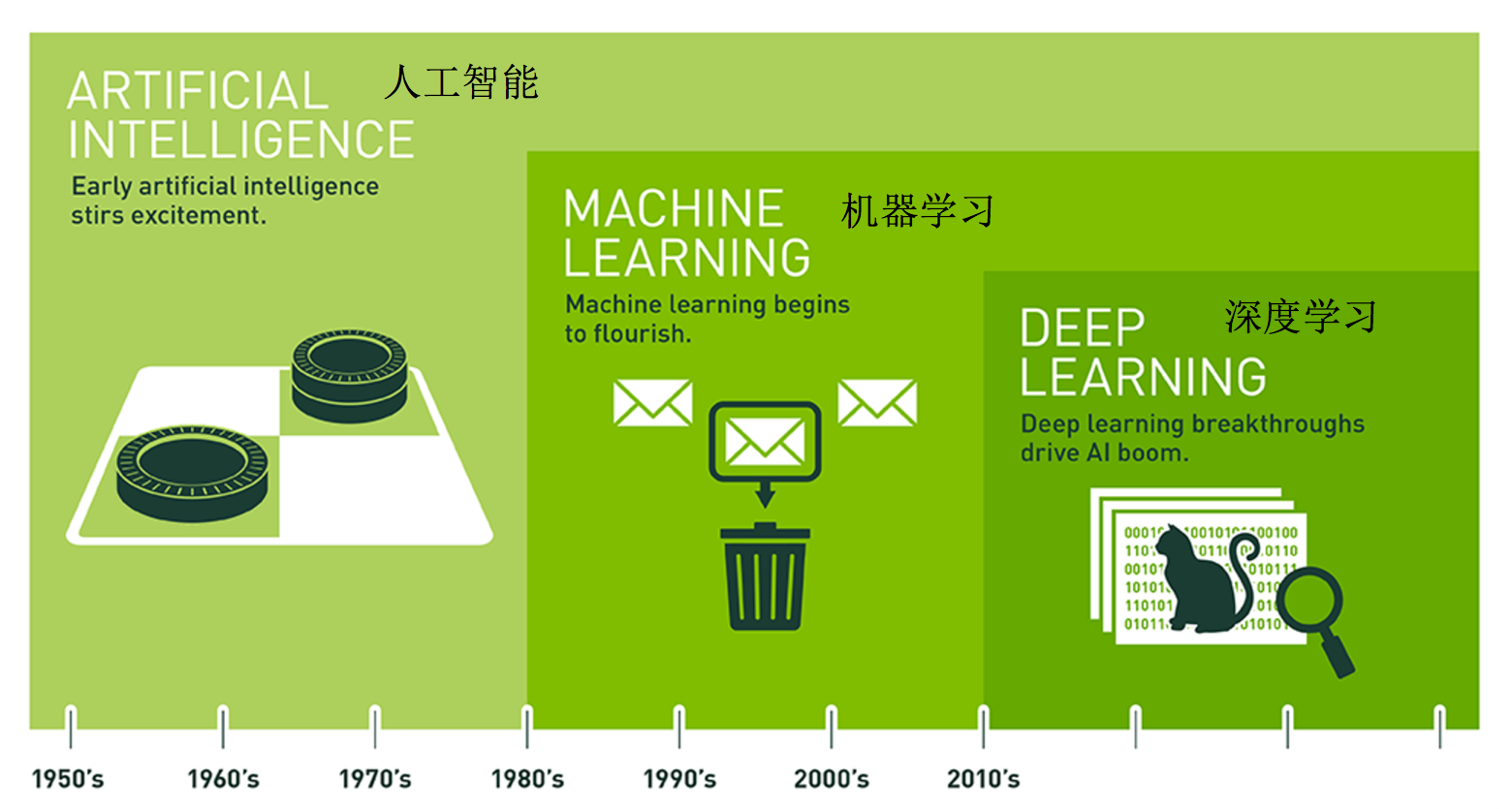

(1)背景介绍

(2)定义

机器学习是从数据中自动分析获得规律(模型),并利用规律对未知数据进行预测.

(3)解释

1.2 为什么要机器学习

(1)解放生产力:

智能客服:不知疲倦24小时小时作业

(2)量化投资:避免更多的交易人员

解决专业问题:

(3)医疗:

帮助医生辅助医疗

(4)提供便利:

阿里ET城市大脑:智能城市

1.3 机器学习应用场景

机器学习的应用场景非常多,可以说渗透到了各个行业领域当中。医疗、航空、教育、物流、电商等等领域的各种场景。

(1)用在挖掘、预测领域:

应用场景:店铺销量预测、量化投资、广告推荐、企业客户分类、SQL语句安全检测分类…

(2)用在图像领域:

应用场景:街道交通标志检测、图片商品识别检测等等

(3)用在自然语言处理领域:

应用场景:文本分类、情感分析、自动聊天、文本检测等等

当前重要的是掌握一些机器学习算法等技巧,从某个业务领域切入解决问题。

1.4 学习框架和资料的介绍

(1)学习框架

2.特征工程

学习目标:

了解特征工程在机器学习当中的重要性

应用sklearn实现特征预处理

应用sklearn实现特征抽取

应用sklearn实现特征选择

应用PCA实现特征的降维

说明机器学习算法监督学习与无监督学习的区别

说明监督学习中的分类、回归特点

说明机器学习算法目标值的两种数据类型

说明机器学习(数据挖掘)的开发流程

2.1 特征工程介绍

学习小目标:

(1)说出机器学习的训练数据集结构组成

(2)了解特征工程在机器学习当中的重要性

(3)知道特征工程的分类

带着问题学:从历史数据当中获得规律?这些历史数据是怎么的格式?

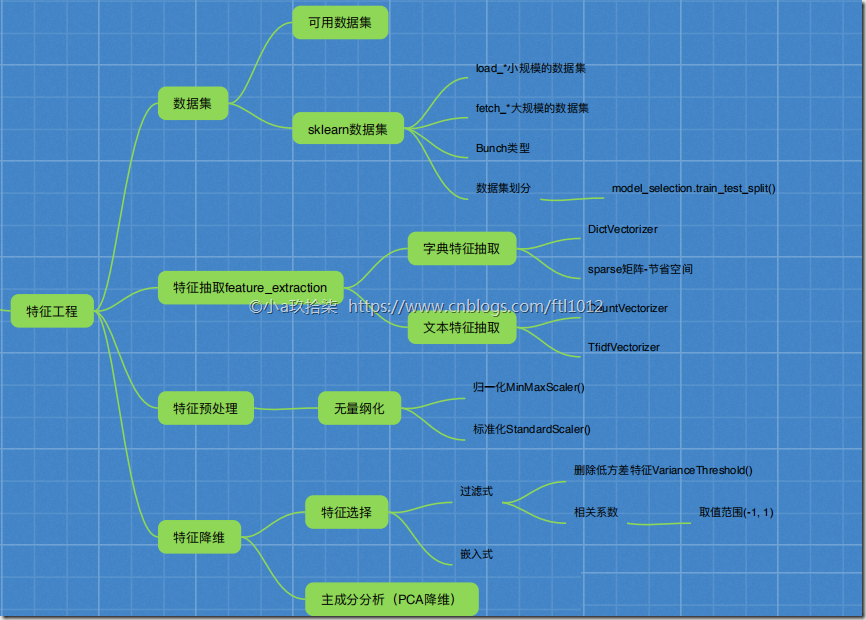

特征工程知识图谱:

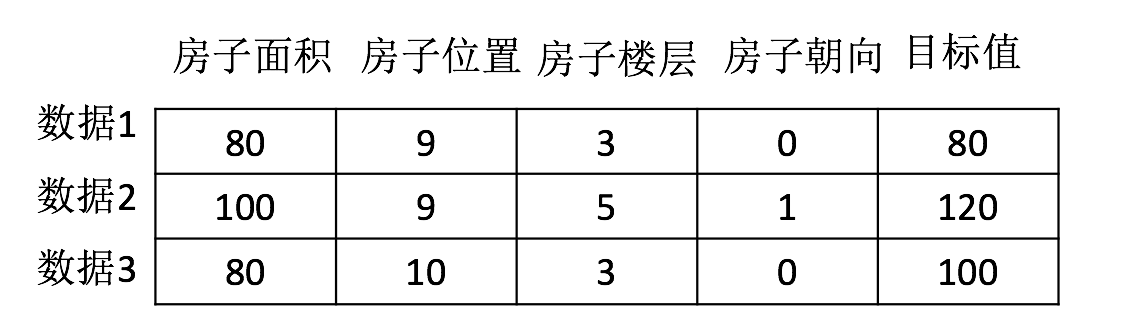

2.1.1 数据集的构成

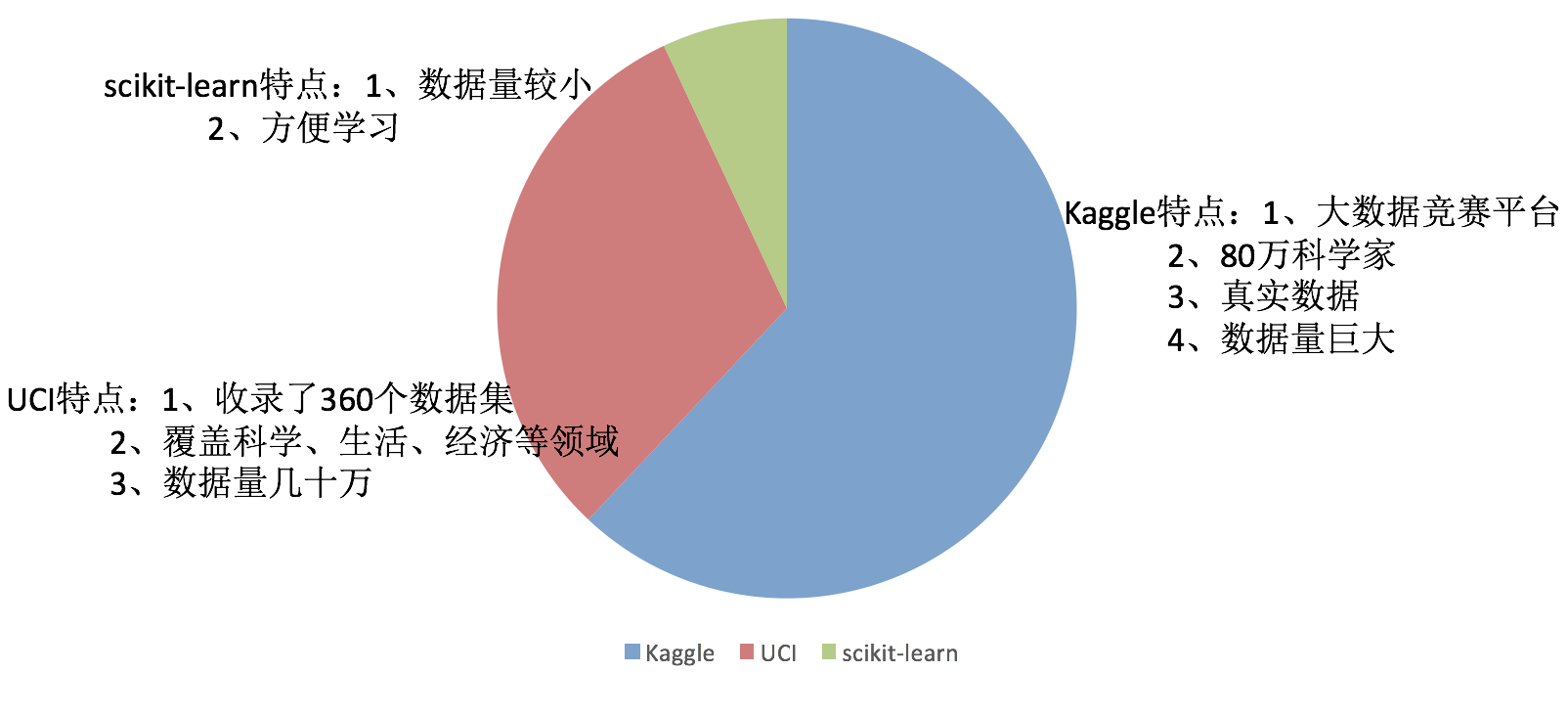

(1)可用数据集

(2)数据集的构成

结构:特征值+目标值

2.1.2 为什么需要特征工程(Feature Engineering)

机器学习领域的大神Andrew Ng(吴恩达)老师说“Coming up with features

is difficult, time-consuming, requires expert knowledge.

“Applied machine learning” is basically feature engineering.

”

注:业界广泛流传:数据和特征决定了机器学习的上限,而模型和算法只是逼近这个上限而已。

(1) 特征工程的意义和作用

意义: 会直接影响机器学习的效果

作用: 筛选,处理选择一些合适的特征

(2)举个简单的例子理解一下:

好孩子们要做饭,要想饭好吃,不仅需要精湛的厨艺,还需要优质的食材;

我们根据大厨选好的食材,去菜市场买;

然后对食材清洗,去掉糟粕,切好;

最后分类装到容器的过程,就想当于机器学习中的特征工程.

如何让好食材的价值全美实现,就看大厨怎么做了.做的过程就是算法训练的过程.

大厨多次实践尝试,调整做菜方法,火候,配料等,最后总结得出一个菜单.这个菜单便是模型.

有了这个模型,就可以让更多的好孩子做出好饭.

多好!

(3)特征工程包含内容:

特征抽取

特征预处理

特征选择

特征降维

2.1.3 特征工程所需工具

(1)Scikit-learn工具介绍

Python语言的机器学习工具

Scikit-learn包括许多知名的机器学习算法的实现

Scikit-learn文档完善,容易上手,丰富的API

(2)安装

conda install

Scikit-learn==0.20 或

pip3 install Scikit-learn==0.20 |

安装好之后可以通过以下命令查看是否安装成功

注:安装scikit-learn需要Numpy,Pandas等库

(3)Scikit-learn包含的内容

sklearn接口:

分类、聚类、回归

特征工程

模型选择、调优

2.2 特征抽取

学习目标

应用DictVectorizer实现对类别特征进行数值化、离散化

应用CountVectorizer实现对文本特征进行数值化

应用TfidfVectorizer实现对文本特征进行数值化

说出两种文本特征提取的方式区别

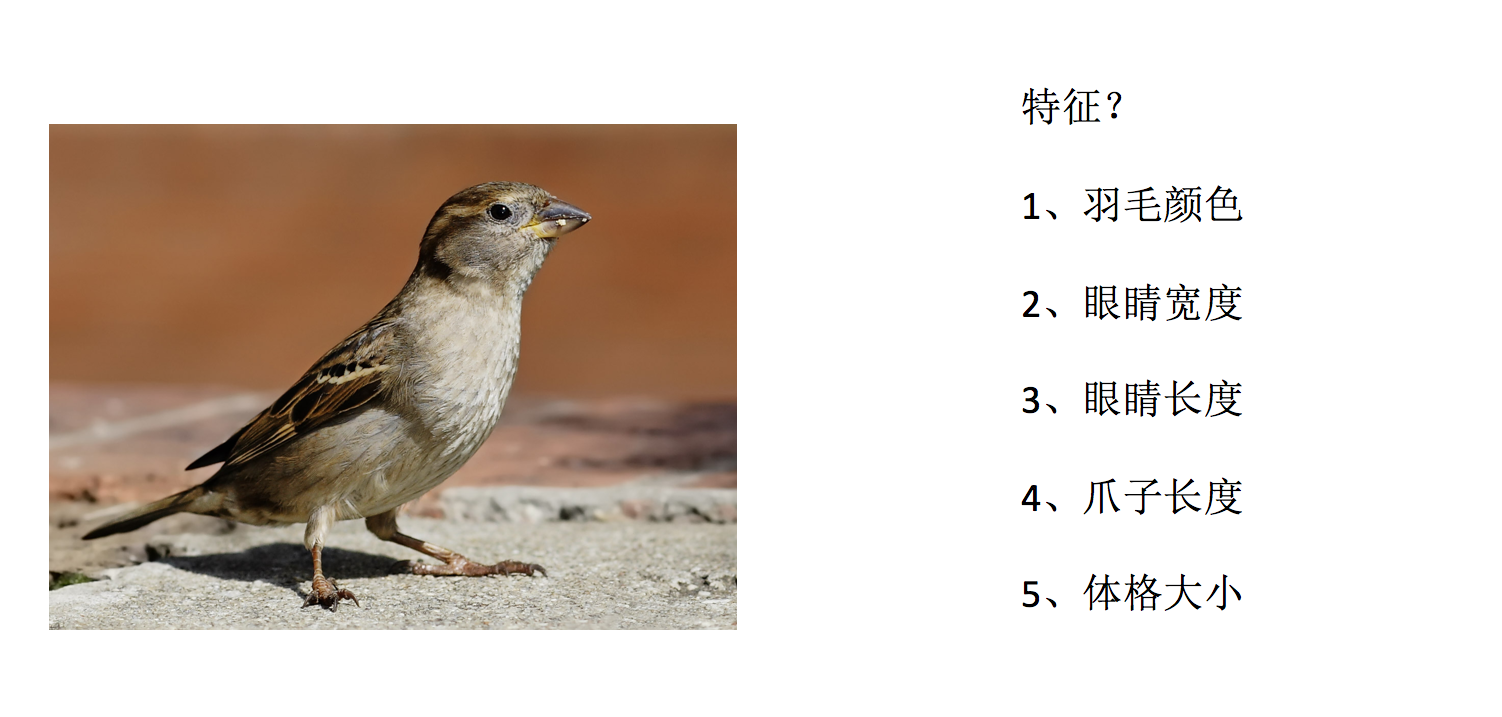

什么是特征提取呢?

2.2.1 特征提取

(1)包括将任意数据(如文本或图像)转换为可用于机器学习的数字特征.

注:特征值化是为了计算机更好的去理解数据

特征提取举例:

字典特征提取(特征离散化)

文本特征提取

图像特征提取(深度学习将介绍)

(2)特征提取API

| sklearn.feature_extraction |

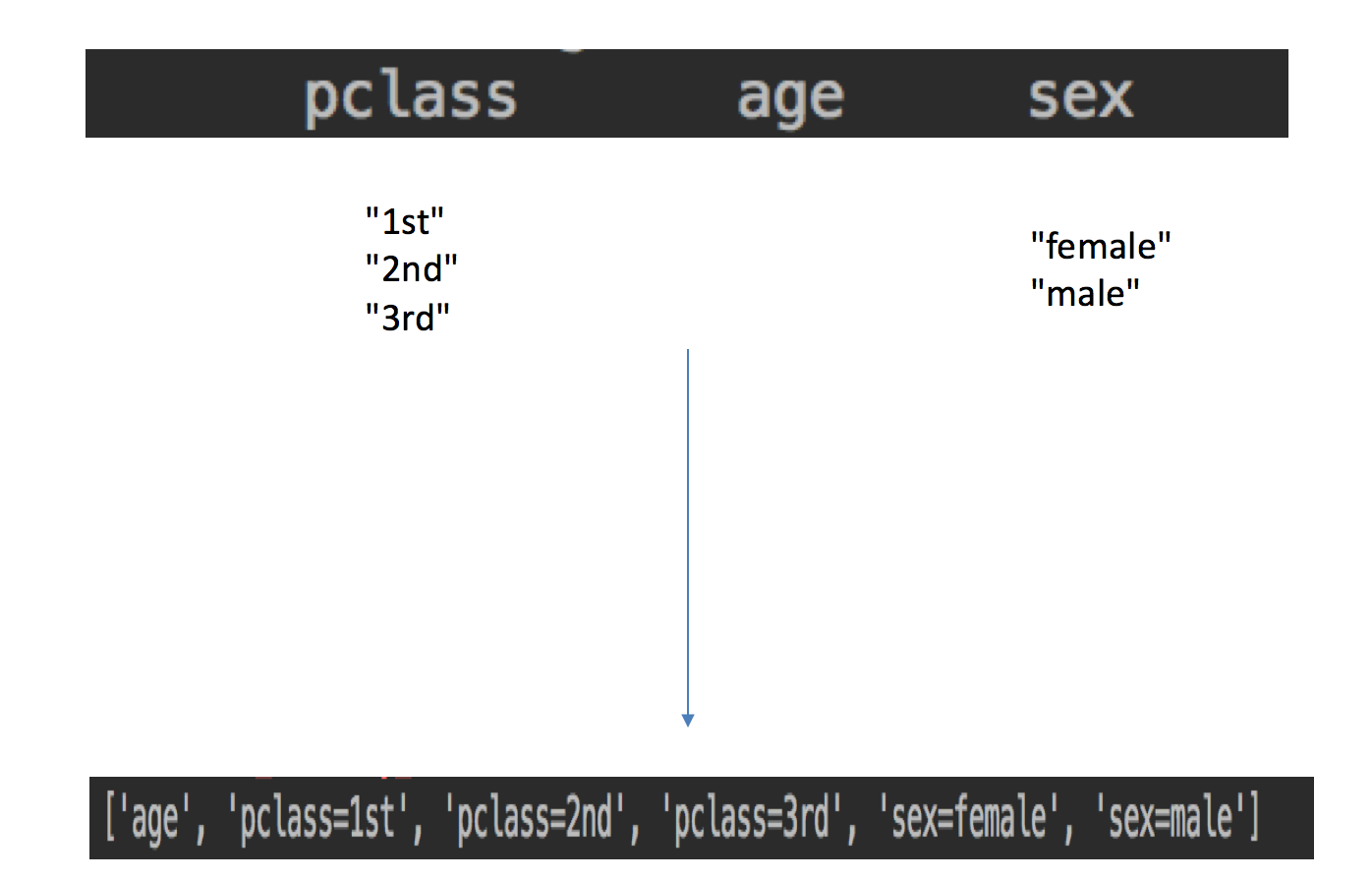

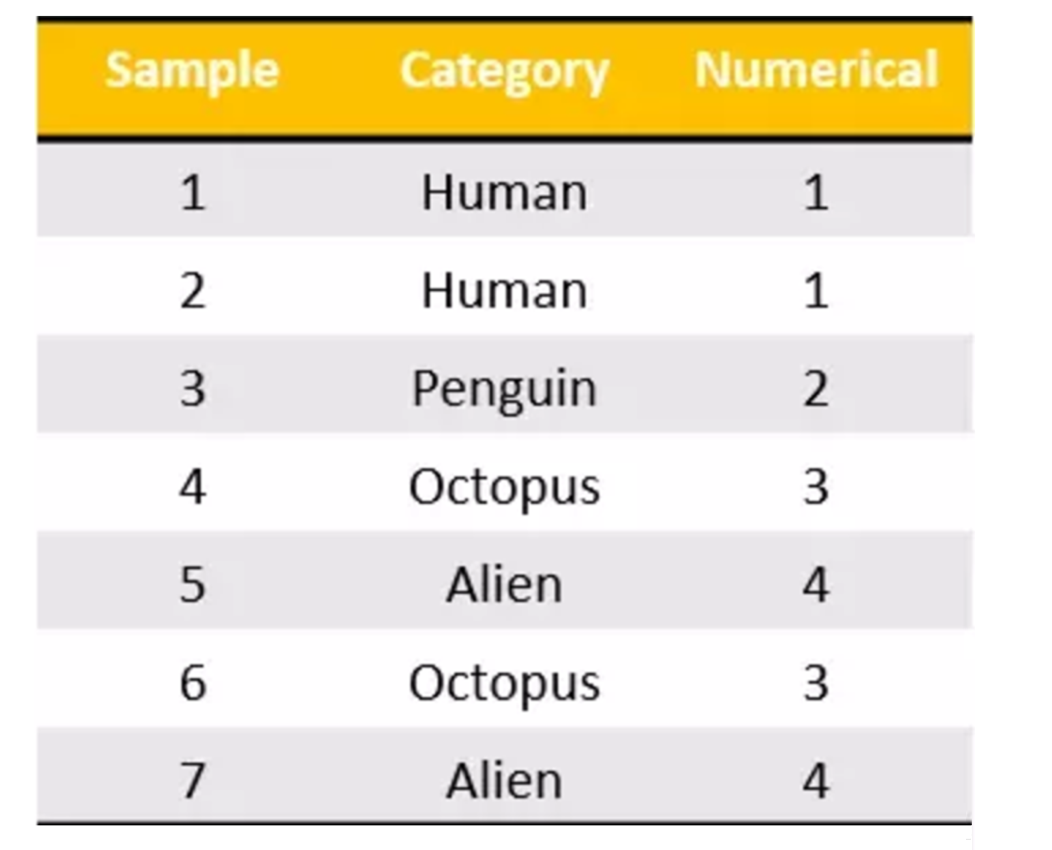

2.2.2 字典特征提取

(1)作用:对字典数据进行特征值化

sklearn.feature_extraction. DictVectorizer(sparse=True,…)

DictVectorizer.fit_transform(X) X: 字典或者包含字典的迭代器返回值:返回sparse矩阵

DictVectorizer.inverse_transform

(X) X:array 数组或者sparse矩阵 返回值:转换之前数据格式

DictVectorizer.get_feature_names() 返回类别名称

(2)应用:

我们对以下数据进行特征提取

[{'city': '北京','temperature':100}

{'city': '上海','temperature':60}

{'city': '深圳','temperature':30}] |

(3)流程分析:

实例化类DictVectorizer

调用fit_transform方法输入数据并转换(注意返回格式)

| from sklearn.feature_extraction

import DictVectorizer

def dict_vec():

# 实例化dict

dict = DictVectorizer(sparse=False)

#dict_vec调用fit_transform

#三个样本的特征值数据(字典形式)

data = dict.fit_transform ([{'city': '北京','temperature':100},{'city':

'上海','temperature':60}, {'city': '深圳','temperature':30}])

# 打印特征抽取之后的特征结果

print(dict.get_feature_names())

print(data)

return None

dict_vec()

|

输出结果:

/home/yuyang/anaconda3/envs/

tensor1-6/bin/python3.5 "/media/yuyang/Yinux/heima/

Machine learning/demo.py"

['city=上海', 'city=北京', 'city=深圳', 'temperature']

[[ 0. 1. 0. 100.]

[ 1. 0. 0. 60.]

[ 0. 0. 1. 30.]]

Process finished with exit code 0

|

DictVectorizer默认返回稀疏sparse矩阵,为解约内存空间

注意观察没有加上sparse=False参数的结果

/home/yuyang/anaconda3/envs/

tensor1-6/bin/python3.5 "/media/yuyang/Yinux/heima/

Machine learning/demo.py"

['city=上海', 'city=北京', 'city=深圳', 'temperature']

(0, 1) 1.0

(0, 3) 100.0

(1, 0) 1.0

(1, 3) 60.0

(2, 2) 1.0

(2, 3) 30.0

Process finished with exit code 0

|

这个结果并不是我们想要看到的,所以加上参数,得到想要的结果

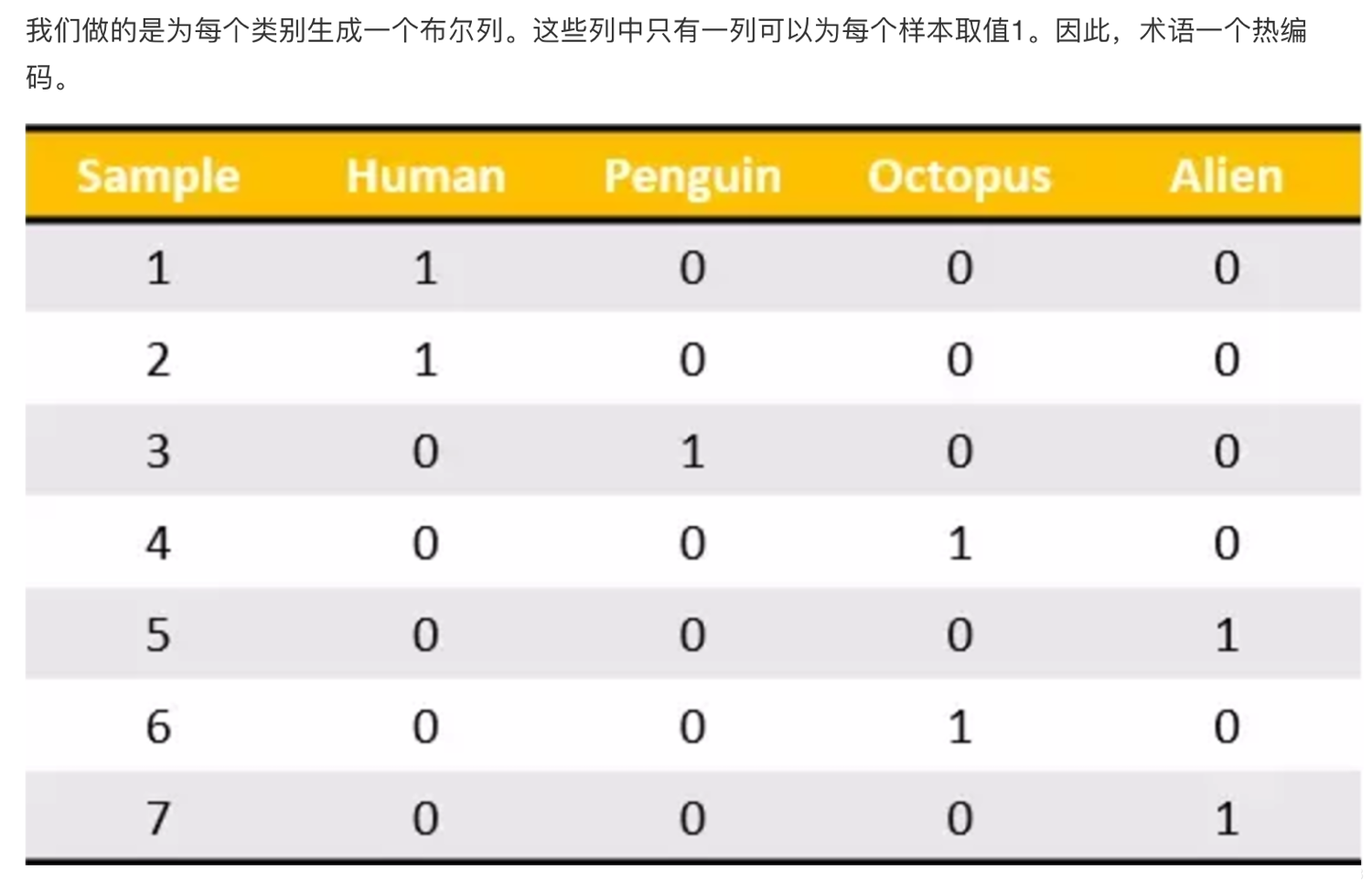

在做pandas的离散化时候,也实现了类似的效果,我们把这个处理数据的技巧用专业的称呼"one-hot"编码,之前我们也解释过为什么需要这种离散化的了,是分析数据的一种手段。比如下面例子:

(4)总结:对于特征当中存在类别信息的我们都会做one-hot编码处理

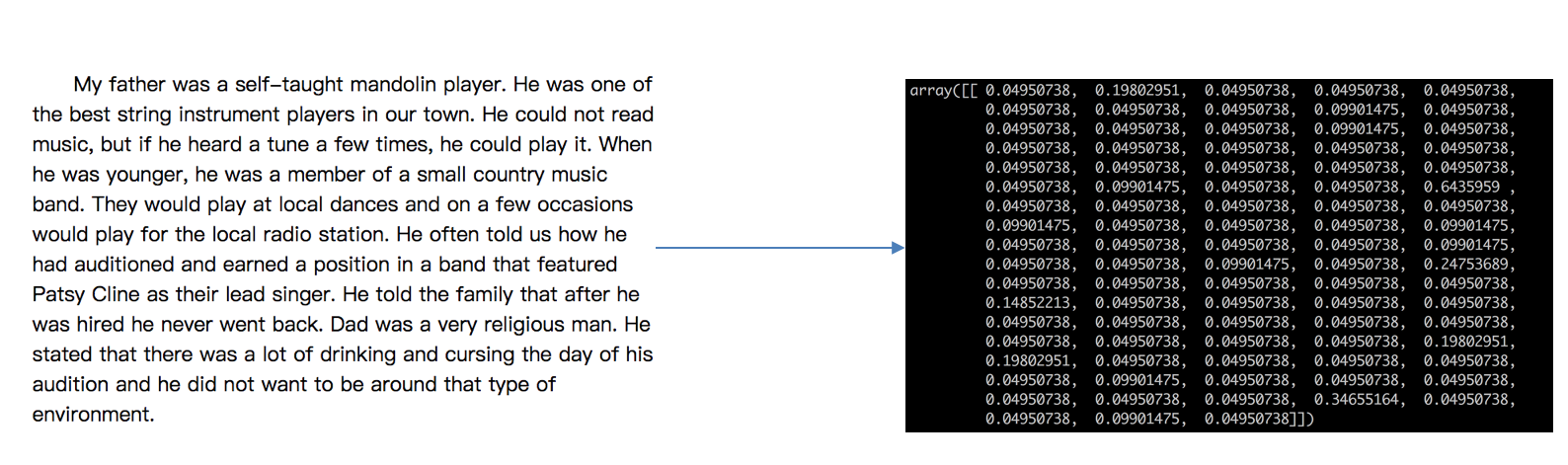

2.2.3文本特征提取

(1)作用:对文本数据进行特征值化

sklearn.feature_extraction.text.

CountVectorizer(stop_words=[])返回词频矩阵 stop_words=[]

在列表中指定不需要的词进行过滤

CountVectorizer.fit_transform(X)

X:文本或者包含文本字符串的可迭代对象 返回值:返回sparse矩阵

CountVectorizer.inverse_transform(X)

X:array数组或者sparse矩阵 返回值:转换之前数据格

CountVectorizer.get_feature_names()

返回值:单词列表

CountVectorizer:

单词列表:将所有文章的单词统计到一个列表当中(重复的次只当做一次),默认会过滤掉单个字母

对于单个字母,对文章主题没有影响,单词可以有影响.

sklearn.feature_extraction.text.TfidfVectorizer

(2)应用:

我们对以下数据进行特征提取:

["life

is short,i like python",

"life is too long,i dislike python"]

|

(3)流程分析:

实例化类CountVectorizer

调用fit_transform方法输入数据并转换 (注意返回格式,利用toarray()进行sparse矩阵转换array数组)

from sklearn.feature_extraction.text

import CountVectorizer

def countvec():

# 实例化count

count = CountVectorizer()

data = count.fit_transform (["life is is

short,i like python", "life is too long,i

dislike python"])

# 内容

print(count.get_feature_names())

print(data.toarray())

return None

countvec()

|

输出结果:

/home/yuyang/anaconda3/envs/

tensor1-6/bin/python3.5 "/media/yuyang/Yinux/heima/

Machine learning/demo.py"

['dislike', 'is', 'life', 'like', 'long', 'python',

'short', 'too']

[[0 2 1 1 0 1 1 0]

[1 1 1 0 1 1 0 1]]

Process finished with exit code 0

|

问题:如果我们将数据替换成中文?

"data

= count.fit_transform (["人生 苦短 我 喜欢 python",

"生活 太长了,我不 喜欢 python"])"

|

那么最终得到的结果是:

/home/yuyang/anaconda3/envs/ tensor1-6/bin/python3.5

"/media/yuyang/Yinux/heima/Machine learning/demo.py"

['python', '人生', '喜欢', '太长了', '我不', '生活', '苦短']

[[1 1 1 0 0 0 1]

[1 0 1 1 1 1 0]]

Process finished with exit code 0

|

注:不支持单个中文分词

为什么会得到这样的结果呢,仔细分析之后会发现英文默认是以空格分开的。其实就达到了一个分词的效果,所以我们要对中文进行分词处理.

中文分词采用jieba分词处理

(4)jieba分词处理

jieba.cut()返回词语组成的生成器

需要安装下jieba库

案例分析:

from sklearn.feature_extraction.text

import CountVectorizer

import jieba

def cutword():

'''

进行分词处理

:return:

'''

# 将三个句子用jieba.cut处理

contetn1 = jieba.cut("今天很残酷,明天更残酷,后天很美好,但绝对大部分是死在明天晚上,所以每个人不要放弃今天。")

contetn2 = jieba.cut("我们看到的从很远星系来的光是在几百万年之前发出的,这样当我们看到宇宙时,我们是在看它的过去。")

contetn3 = jieba.cut("如果只用一种方式了解某样事物,你就不会真正了解它。了解事物真正含义的秘密取决于如何将其与我们所了解的事物相联系。")

# 先将着三个转换成列表

c1 = ' '.join(list(contetn1))

c2 = ' '.join(list(contetn2))

c3 = ' '.join(list(contetn3))

return c1, c2, c3

def chvec():

# 实例化conunt

count = CountVectorizer (stop_words=['不要', '我们',

'所以']) #指定不需要的词进行过滤

# 定义一个分词的函数

c1, c2, c3 = cutword()

data = count.fit_transform([c1, c2, c3])

# 内容

print(count.get_feature_names())

print(data.toarray())

return None

|

输出结果:

/home/yuyang/anaconda3/envs/

tensor1-6/bin/python3.5 "/media/yuyang/Yinux/heima/

Machine learning/demo.py"

Building prefix dict from the default dictionary

...

Dumping model to file cache /tmp/jieba.cache

Loading model cost 0.604 seconds.

Prefix dict has been built succesfully.

['一种', '不会', '之前', '了解', '事物', '今天', '光是在',

'几百万年', '发出', '取决于', '只用', '后天', '含义', '大部分',

'如何', '如果', '宇宙', '放弃', '方式', '明天', '星系', '晚上',

'某样', '残酷', '每个', '看到', '真正', '秘密', '绝对', '美好',

'联系', '过去', '这样']

[[0 0 0 0 0 2 0 0 0 0 0 1 0 1 0 0 0 1 0 2 0

1 0 2 1 0 0 0 1 1 0 0 0]

[0 0 1 0 0 0 1 1 1 0 0 0 0 0 0 0 1 0 0 0 1 0

0 0 0 2 0 0 0 0 0 1 1]

[1 1 0 4 3 0 0 0 0 1 1 0 1 0 1 1 0 0 1 0 0 0

1 0 0 0 2 1 0 0 1 0 0]]

Process finished with exit code 0

|

该如何处理某个词或短语在多篇文章中出现的次数高这种情况

2.2.4 Tf-idf文本特征提取

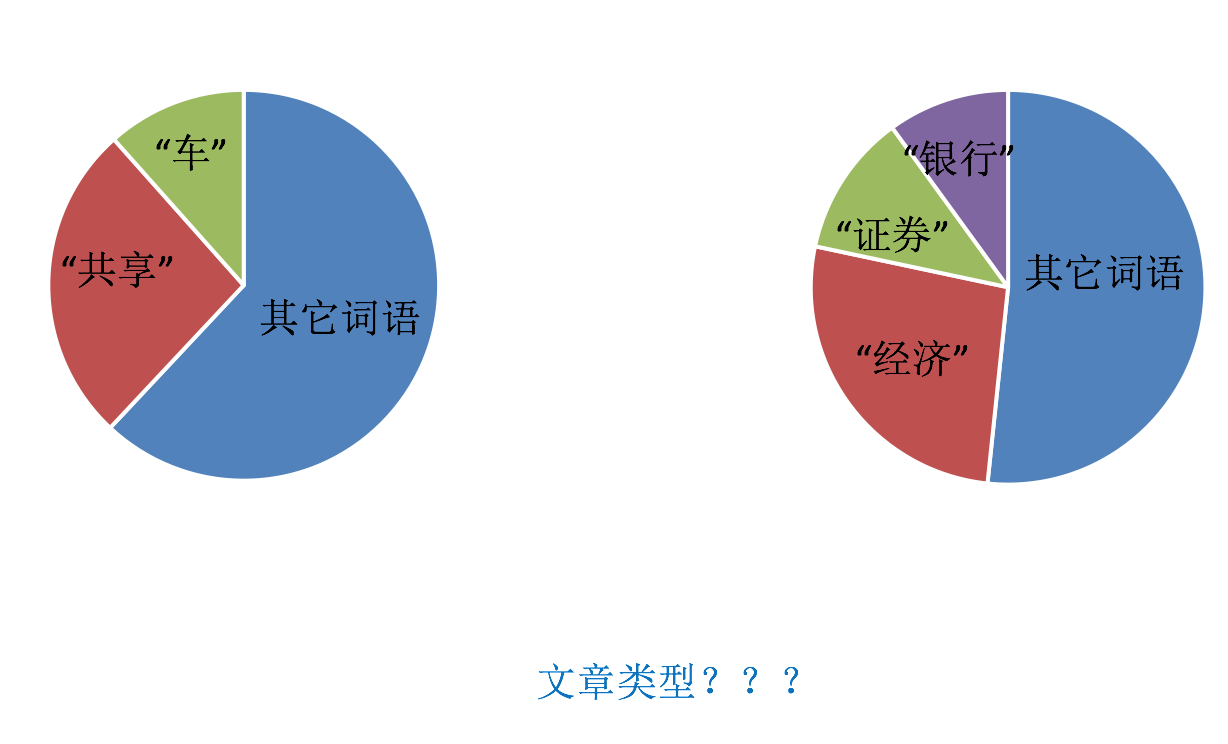

TF-IDF的主要思想:如果某个词或短语在一篇文章中出现的概率高,并在其他文章中很少出现,则认为此词或者短语具有很好的类别区分能力,适合用来分类。

TF-IDF作用:用以评估一字词对于一个文件集或一个语料库中的其中一份文件的重要程度。

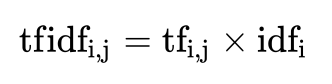

(1)公式

词频(term frequency,tf)指的是某一个给定的词语在该文件中出现的频率

逆向文档频率(inverse document frequency,idf)是一个词语普遍重要性的度量。某一特定词语的idf,可以由总文件数目除以包含该词语之文件的数目,再将得到的商取以10为底的对数得到

最终得出结果可以理解为重要程度。

注:假如一篇文件的总词语数是100个,而词语"非常"出现了5次,那么"非常"一词在该文件中的词频就是5/100=0.05。而计算文件频率(IDF)的方法是以文件集的文件总数,除以出现"非常"一词的文件数。所以,如果"非常"一词在1,000份文件出现过,而文件总数是10,000,000份的话,其逆向文件频率就是lg(10,000,000

/ 1,0000)=3。最后"非常"对于这篇文档的tf-idf的分数为0.05

* 3=0.15

(2)案例

rom sklearn.feature_extraction.text

import TfidfVectorizer

import jieba

def cutword():

'''

进行分词处理

:return:

'''

# 将三个句子用jieba.cut处理

contetn1 = jieba.cut("今天很残酷,明天更残酷,后天很美好,但绝对大部分是死在明天晚上,所以每个人不要放弃今天。")

contetn2 = jieba.cut("我们看到的从很远星系来的光是在几百万年之前发出的,这样当我们看到宇宙时,我们是在看它的过去。")

contetn3 = jieba.cut("如果只用一种方式了解某样事物,你就不会真正了解它。了解事物真正含义的秘密取决于如何将其与我们所了解的事物相联系。")

# 先将着三个转换成列表

c1 = ' '.join(list(contetn1))

c2 = ' '.join(list(contetn2))

c3 = ' '.join(list(contetn3))

return c1, c2, c3

def tfidfvec():

# 实例化conunt

tfidf = TfidfVectorizer()

# 进行3句话的分词

c1, c2, c3 = cutword()

#对两篇文章进行特征提取

data = tfidf.fit_transform([c1, c2, c3])

# 内容

print(tfidf.get_feature_names())

print(data.toarray())

return None

tfidfvec()

|

(3)Tf-idf的重要性

分类机器学习算法进行文章分类中前期数据处理方式.

根据不同词汇在文章中的重要程度,判断文章类别.

2.3特征预处理

学习目标:

了解数值型数据、类别型数据特点

应用MinMaxScaler实现对特征数据进行归一化

应用StandardScaler实现对特征数据进行标准化

2.3.1什么是特征预处理

#scikit-learn的解释

provides several common utility functions

and transformer classes to change raw feature vectors

into a representation that is more suitable for the

downstream estimators.

翻译:通过一些转换函数将特征转换成更加适合算法模型的过程

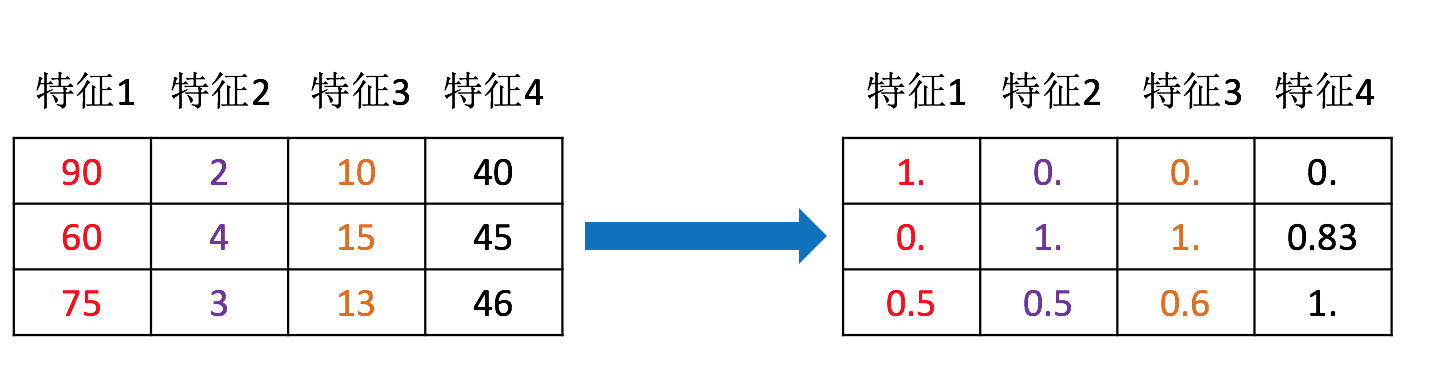

可以通过上面那张图来理解

(1)包含内容

数值型数据的无量纲化:

归一化

标准化

(2)特征预处理API

为什么我们要进行归一化/标准化?

特征的单位或者大小相差较大,或者某特征的方差相比其他的特征要大出几个数量级,容易影响(支配)目标结果,使得一些算法无法学习到其它的特征.

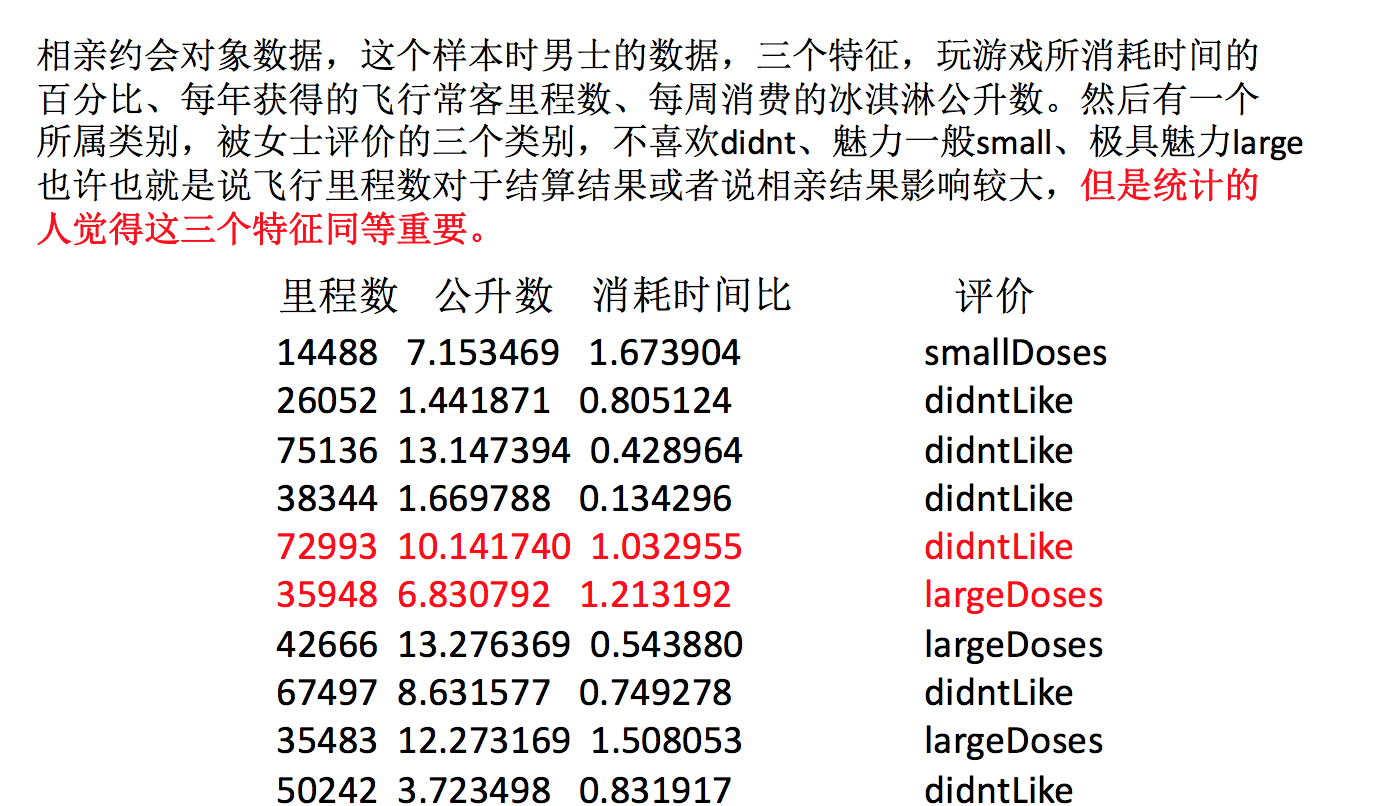

约会对象数据

我们需要用到一些方法进行无量纲化,使不同规格的数据转换到同一规格.

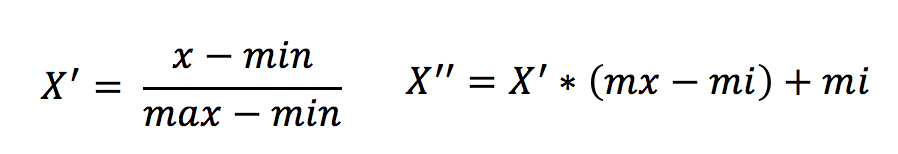

2.3.2 归一化

(1)定义

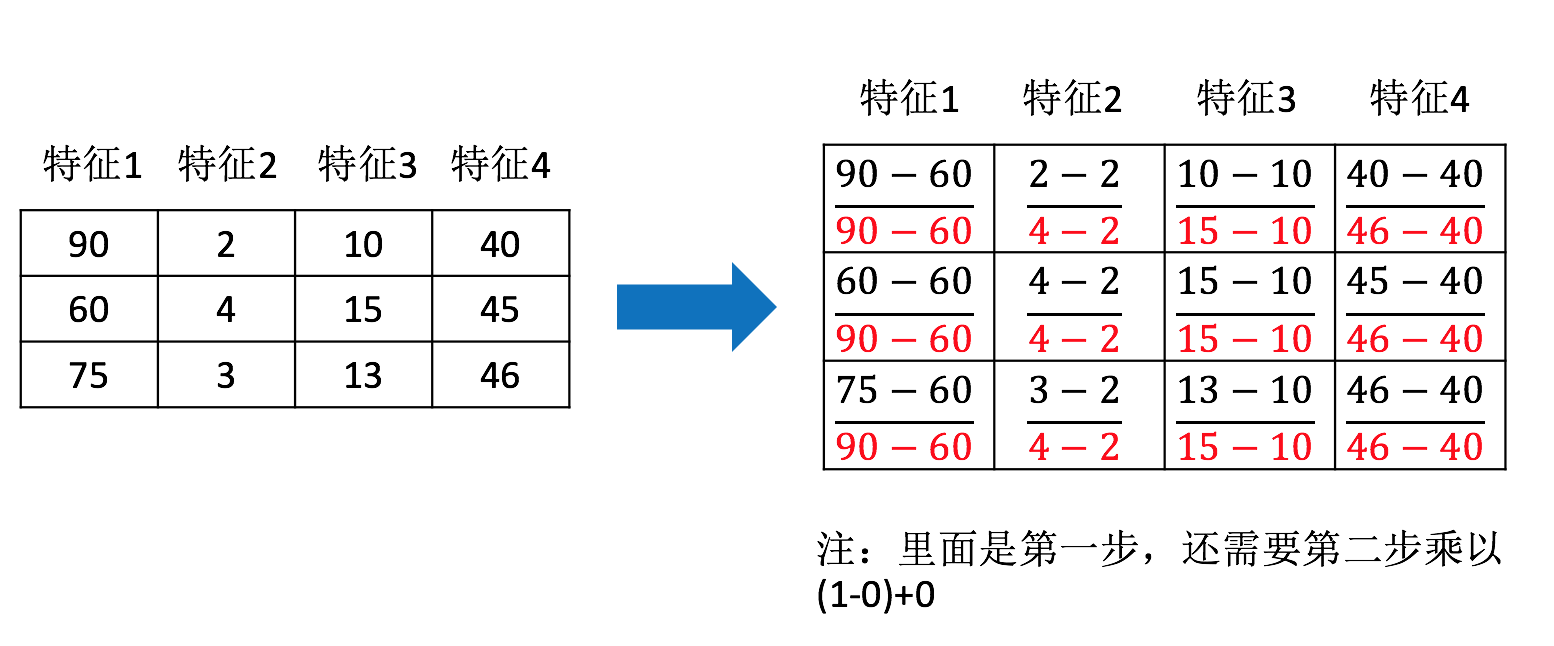

通过对原始数据进行变换把数据映射到(默认为[0,1])之间

(2)公式

那么怎么理解这个过程呢?我们通过一个例子

(3)API

sklearn.preprocessing.MinMaxScaler (feature_range=(0,1)…

)

MinMaxScalar.fit_transform(X)

X:numpy array格式的数据[n_samples,n_features]

返回值:转换后的形状相同的array

(4)数据计算

我们对以下数据进行运算:

milage,Liters,Consumtime,target

40920,8.326976,0.953952,3

14488,7.153469,1.673904,2

26052,1.441871,0.805124,1

75136,13.147394,0.428964,1

|

分析:

1、实例化MinMaxScalar

2、通过fit_transform转换

注:只取前三列数据(特征值),最后一列是目标值.

from sklearn.preprocessing

import MinMaxScaler

import pandas as pd

def minmaxscalar():

"""

对约会对象进行归一化处理

:return:

"""

#读取数据,选择要处理的特征

dating = pd.read_csv ("./data/dating.txt")

data = dating[['milage','Liters','Consumtime']]

#实例化minmaxscaler进行fit_transform

#mm = MinMaxScaler(feature_range=(2, 3))#将数据映射到2-3

mm = MinMaxScaler() #默认feature_range=(0, 1)

data = mm.fit_transform(data)

print(data)

print(data.shape)

return None

minmaxscalar()

|

输出结果:

[[0.44832535

0.39805139 0.56233353]

[0.15873259 0.34195467 0.98724416]

[0.28542943 0.06892523 0.47449629]

...

[0.29115949 0.50910294 0.51079493]

[0.52711097 0.43665451 0.4290048 ]

[0.47940793 0.3768091 0.78571804]]

(1000, 3)

Process finished with exit code 0

|

问题:如果数据中异常点较多,会有什么影响?

(5)归一化总结

注意最大值最小值是变化的,另外,最大值与最小值非常容易受异常点影响,所以归一化方法鲁棒性较差,只适合传统精确小数据场景。

怎么办?

2.3.3 标准化

(1)定义

通过对原始数据进行变换把数据变换到均值为0,标准差为1范围内

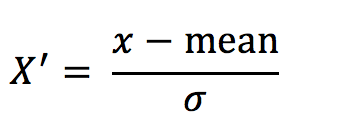

(2)公式

作用于每一列,mean为平均值,σ为标准差

所以回到刚才异常点的地方,我们再来看看标准化.

(1)对于归一化来说:如果出现异常点,影响了最大值和最小值,那么结果显然会发生改变

(2)对于标准化来说:如果出现异常点,由于具有一定数据量,少量的异常点对于平均值的影响并不大,从而方差改变较小。

(3)API

sklearn.preprocessing.StandardScaler( )

处理之后每列来说所有数据都聚集在均值0附近;标准差差为1

StandardScaler.fit_transform(X)

X:numpy array格式的数据[n_samples,n_features]

返回值:转换后的形状相同的array

(4)数值计算

from sklearn.preprocessing

import StandardScaler

import pandas as pd

def stdscalar():

"""

对约会对象进行标准化处理

:return:

"""

#读取数据,选择要处理的特征

dating = pd.read_csv("./data/dating.txt")

data = dating [['milage','Liters','Consumtime']]

#实例化minmaxscaler进行fit_transform

#mm = MinMaxScaler(feature_range=(2, 3))#将数据映射到2-3

std = StandardScaler() #默认feature_range=(0,

1)

data = std.fit_transform(data)

print(data)

return None

stdscalar()

|

输出结果:

[[ 0.33193158

0.41660188 0.24523407]

[-0.87247784 0.13992897 1.69385734]

[-0.34554872 -1.20667094 -0.05422437]

...

[-0.32171752 0.96431572 0.06952649]

[ 0.65959911 0.60699509 -0.20931587]

[ 0.46120328 0.31183342 1.00680598]]

Process finished with exit code 0

|

(5)总结

标准化方法,在已有样本足够多的情况下比较稳定,适合现代嘈杂大数据场景。

处理后,每列数据均值为0

2.4 特征选择

学习目标:

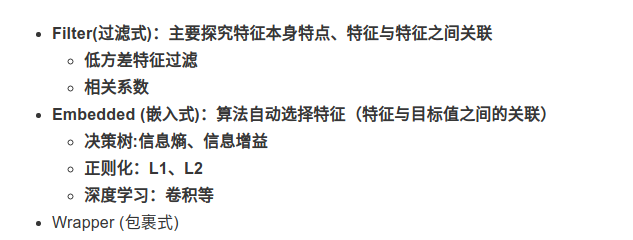

知道特征选择的嵌入式、过滤式以及包裹氏三种方式

应用VarianceThreshold实现删除低方差特征

了解相关系数的特点和计算

应用相关性系数实现特征选择

在引入特征选择之前,先介绍一个降维的概念.

2.4.1降维

降维是指在某些限定条件下,降低**随机变量(特征)个数,得到一组“不相关”**主变量的过程.

2.4.2 降维的2种方式

(1)特征选择

(2)主成分分析(可以理解一种特征提取的方式)

2.4.3什么是特征选择

(1)定义

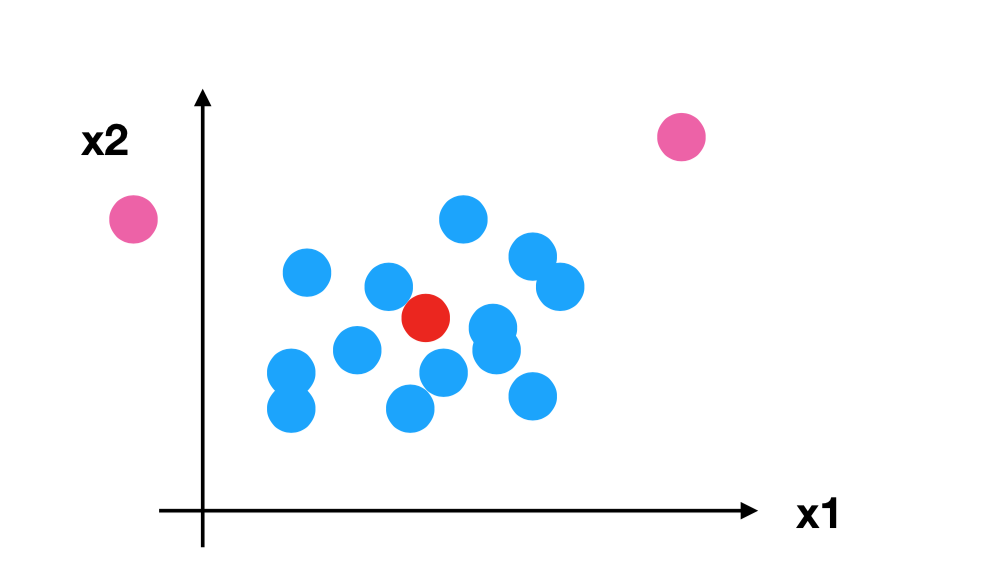

数据中包含冗余或无关变量(或称特征、属性、指标等),旨在从原有特征中找出主要特征。

(2)方法

(3)模块

| sklearn.feature_selection

|

2.4.4 过滤式

2.4.4.1 低方差特征过滤

删除低方差的一些特征。再结合方差的大小来考虑这个方式的角度。

(方差可以表示数据的离散程度)

特征方差小:某个特征大多样本的值比较相近

特征方差大:某个特征很多样本的值都有差别

(1)API

sklearn.feature_selection. VarianceThreshold(threshold

= 0.0)

删除所有低方差特征

Variance.fit_transform(X)

X:numpy array格式的数据[n_samples,n_features]

返回值:训练集差异低于threshold的特征将被删除。默认值是保留所有非零方差特征,即删除所有样本中具有相同值的特征。threshold的值越大,删除的特征值越多.

(2)数值计算

我们对下面数据进行运算

[[0, 2, 0,

3],

[0, 1, 4, 3],

[0, 1, 1, 3]] |

(3)数值分析

1、初始化VarianceThreshold,指定阀值方差

2、调用fit_transform

from sklearn.feature_selection

import VarianceThreshold

def varthreshold():

"""

删除所有低方差特征

:return:

"""

var = VarianceThreshold (threshold=0.0) #删除方差为0的特征

data = var.fit_transform([[0, 2, 0, 3],

[0, 1, 4, 3],

[0, 1, 1, 3]])

print(data)

return None

varthreshold()

|

/home/yuyang/anaconda3/envs/

tensor1-6/bin/python3.5 "/media/yuyang/Yinux/heima/

Machine learning/demo.py"

[[2 0]

[1 4]

[1 1]]

Process finished with exit code 0

|

2.4.4.2 相关系数

皮尔逊相关系数(Pearson Correlation Coefficient)

反映变量之间相关关系密切程度的统计指标

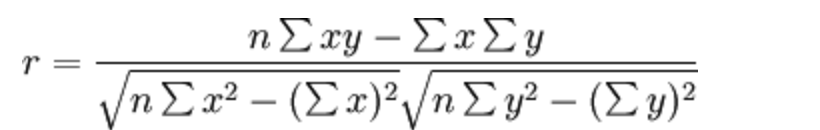

(1)皮尔逊相关系数(无需记忆)

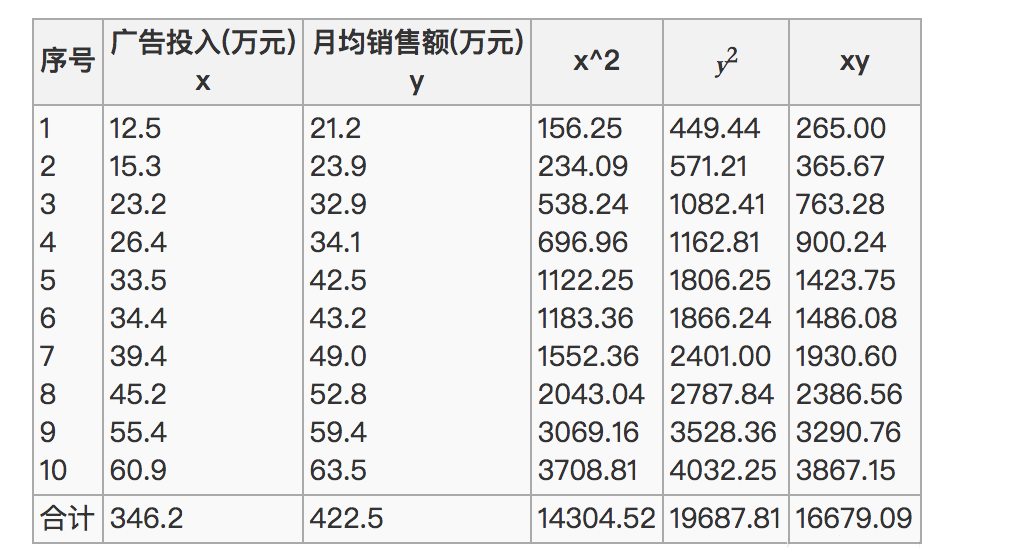

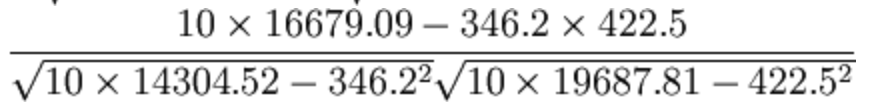

比如说我们计算年广告费投入与月均销售额

那么之间的相关系数怎么计算

最终计算:

上面是协方差,下面是各自的标准差

所以我们最终得出结论是广告投入费与月平均销售额之间有高度的正相关关系。

(2)特点

(3)API

from scipy.stats

import pearsonr

#x:(N,)array_like

#y(N,)arrat_like

Returns:(Pearson's correlation coefficient,

p-value) #返回元组中,第一个数为皮尔逊相关系数

|

(4)数值分析

import pandas

as pd

from scipy.stats import pearsonr

data = pd.read_csv ('./data/factor_returns.csv')

factor = ['pe_ratio', 'pb_ratio', 'market_cap',

'return_on_asset_net_profit', 'du_return_on_equity',

'ev',

'earnings_per_share', 'revenue', 'total_expense']

datas = [(factor[i], factor[j + 1], pearsonr(data[factor[i]],

data[factor[j + 1]])[0])

for i in range(len(factor))

for j in range(i, len(factor) - 1)]

for data in datas:

print("指标 {} 与指标 {} 之间的相关性大小为 {} ".format(*data))

|

2.5 特征降维

目标:

应用PCA实现特征的降维

应用:

用户与物品类别之间主成分分析

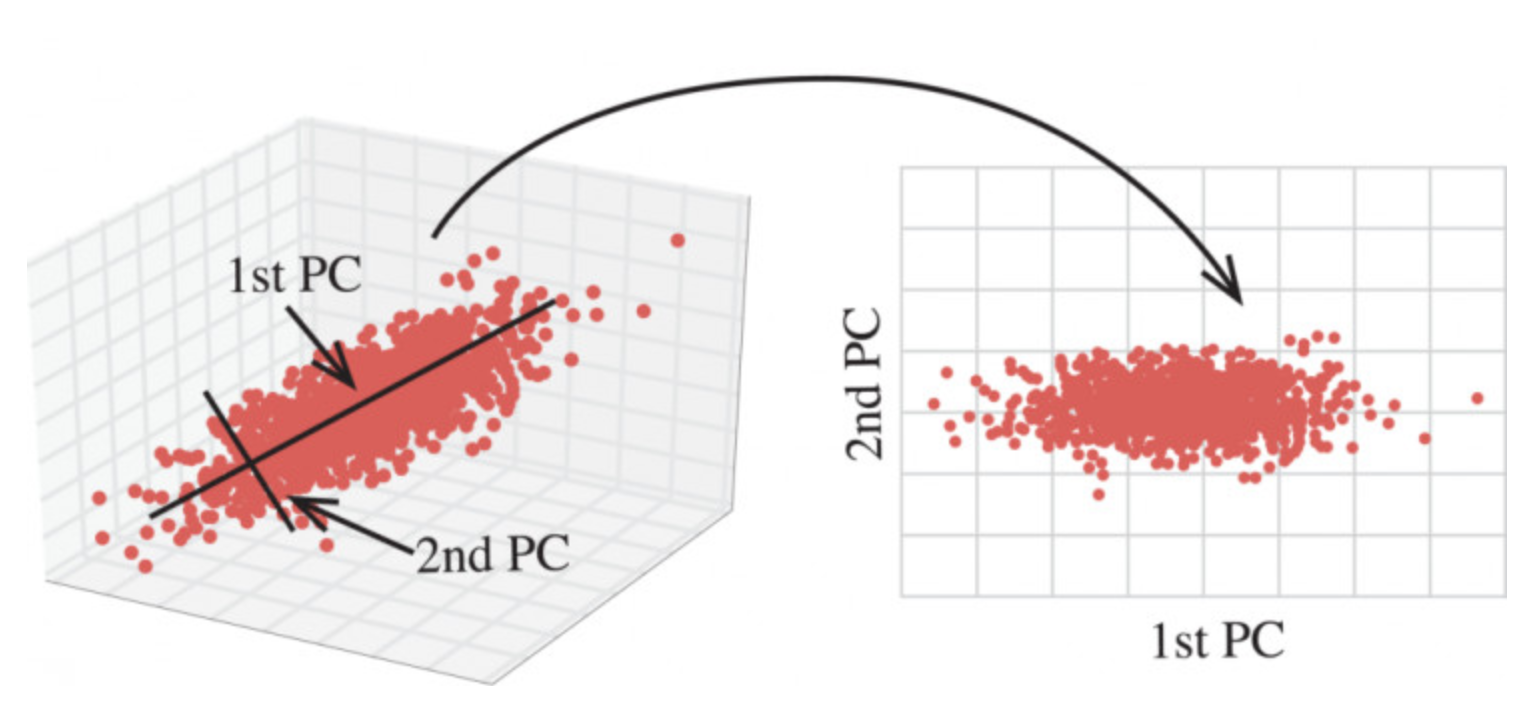

2.5.1 什么是主成分分析(PCA)

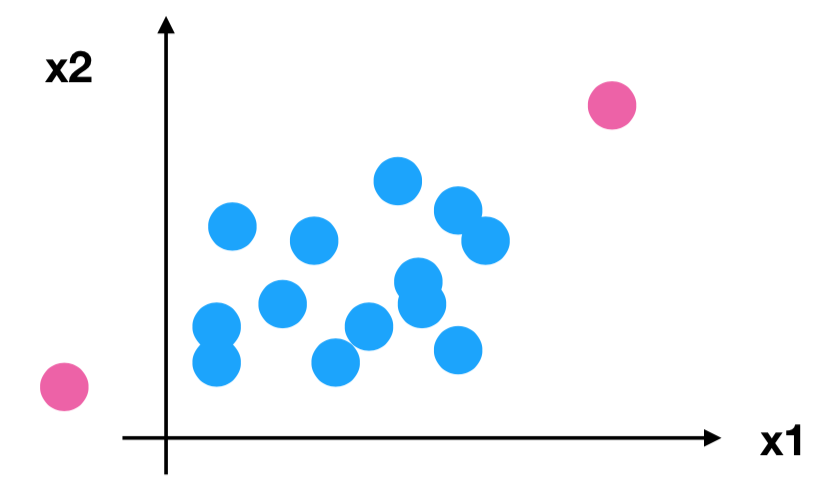

定义:高维数据转化为低维数据的过程,在此过程中可能会舍弃原有数据、创造新的变量

作用:是数据维数压缩,尽可能降低原数据的维数(复杂度),损失少量信息。

应用:回归分析或者聚类分析当中

那么更好的理解这个过程呢?我们来看一张图:

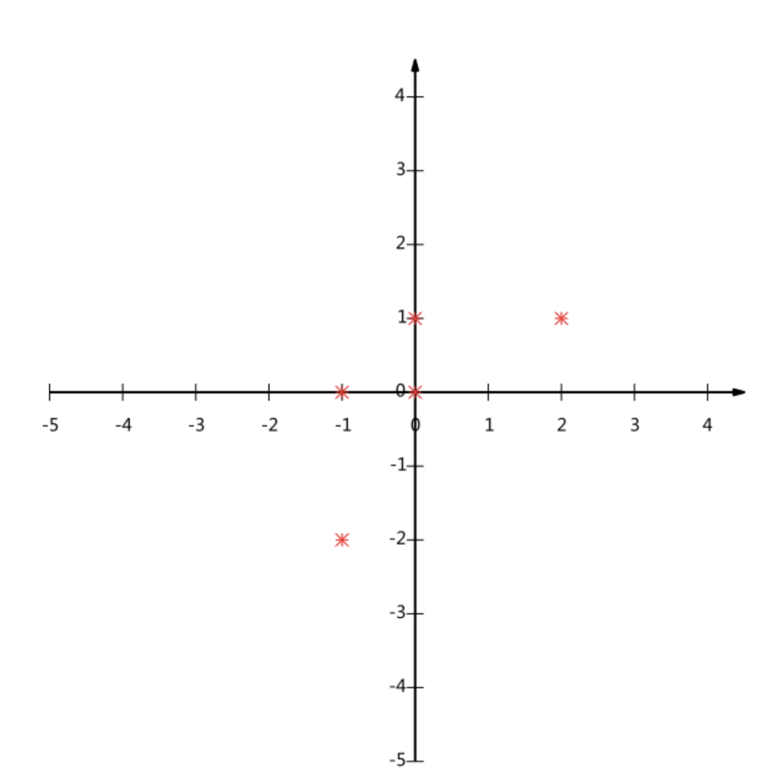

(1)计算案例理解

假设对于给定5个点,数据如下:

(-1,-2)

(-1, 0)

( 0, 0)

( 2, 1)

( 0, 1)

|

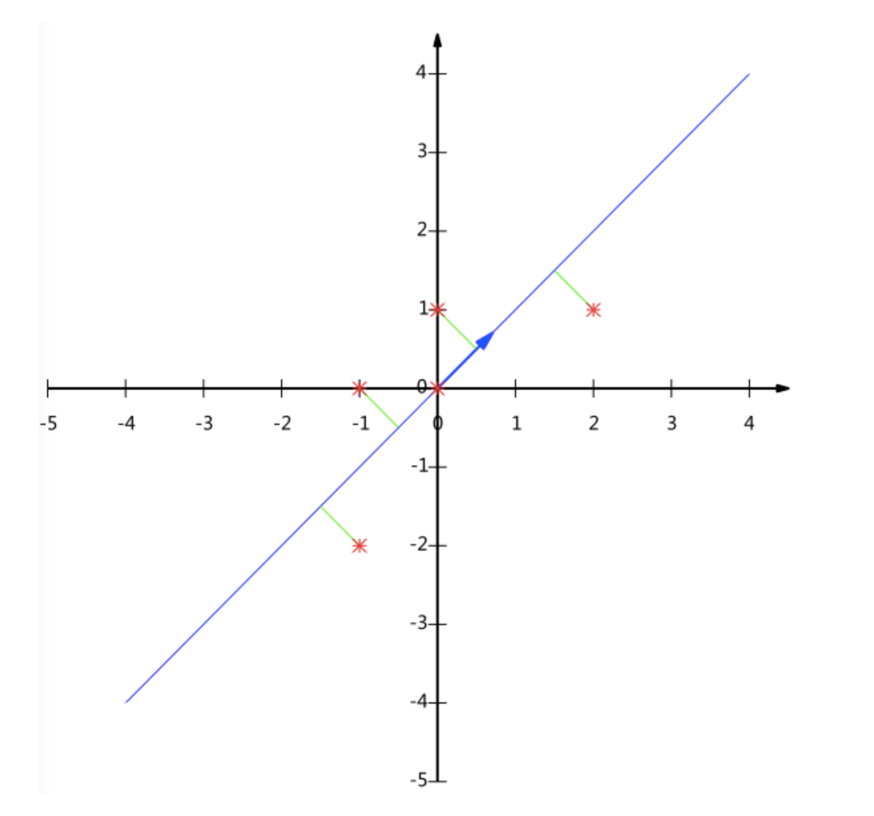

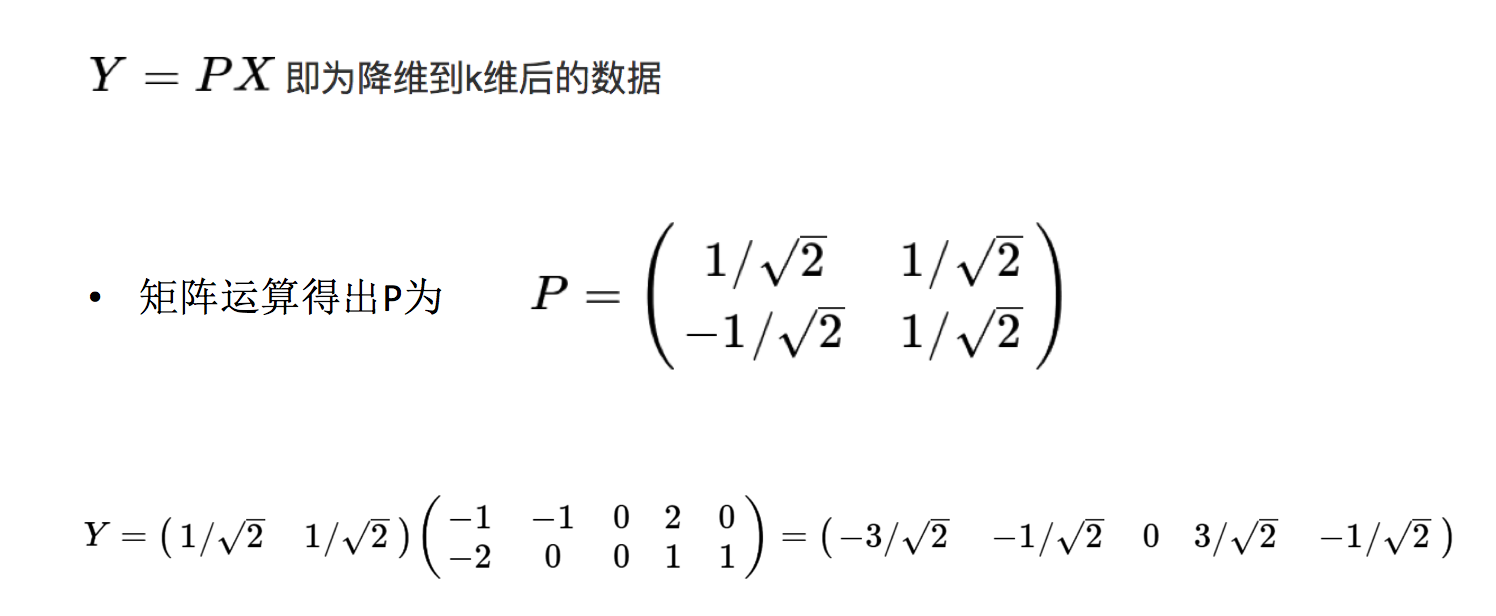

要求:将这个二维的数据简化成一维? 并且损失少量的信息

这个过程如何计算的呢?找到一个合适的直线,通过一个矩阵运算得出主成分分析的结果:

(2)API

sklearn.decomposition.PCA (n_components=None)

将数据分解为较低维数空间

n_components:

小数:表示保留百分之多少的信息

整数:减少到多少特征

PCA.fit_transform(X) X:numpy array格式的数据[n_samples,n_features]

返回值:转换后指定维度的array

(3)数值分析

先举一个简单的例子:

将下面的3个样本,4个特征矩阵降维:

[[2,8,4,5],

[6,3,0,8],

[5,4,9,1]]

|

from sklearn.decomposition

import PCA

def pca():

"""

主成分分析进行降维

:return:

"""

pca = PCA(n_components=0.7) #保留百分之五十的信息

data = pca.fit_transform ([[2,8,4,5],[6,3,0,8],[5,4,9,1]])

print(data)

return None

pca()

|

输出:

[[ 1.22879107e-15

3.82970843e+00]

[ 5.74456265e+00 -1.91485422e+00]

[-5.74456265e+00 -1.91485422e+00]]

Process finished with exit code 0

|

(4)应用场景

特征数量非常大的时候(上百个特征):PCA去压缩相关的冗余信息

创造新的变量(新的特征):例如将股票数据中,高度相关的两个指标,revenue与指标total_expens压缩成一个新的指标(特征)

2.5.2 案例:探究用户对物品类别的喜好细分降维

Kaggle项目:

(1)数据如下:

products.csv:商品信息

字段:product_id, product_name, aisle_id,

department_id

order_products__prior.csv:订单与商品信息

字段:order_id, product_id, add_to_cart_order,

reordered

orders.csv:用户的订单信息

字段:order_id,user_id,eval_set, order_number,….

aisles.csv:商品所属具体物品类别

字段: aisle_id, aisle

(2)需求

(3)分析

合并表,使得user_id与aisle在一张表当中

进行交叉表变换

进行降维

(4)完整代码

from sklearn.decomposition

import PCA

import pandas as pd

def pca():

"""

主成分分析进行降维

:return:

"""

# 导入四张表的数据

prior = pd.read_csv ("./data/instacart/order_products__prior.csv")

products = pd.read_csv ("./data/instacart/products.csv")

orders = pd.read_csv ("./data/instacart/orders.csv")

aisles = pd.read_csv ("./data/instacart/aisles.csv")

# 合并四张表到一张表

#on指定两张表共同拥有的键 内连接

mt = pd.merge(prior, products, on= ['product_id',

'product_id'])

mt1 = pd.merge(mt, orders, on= ['order_id', 'order_id'])

mt2 = pd.merge(mt1, aisles, on= ['aisle_id', 'aisle_id'])

# 进行交叉表变换,pd.crosstab 统计用户与物品之间的次数关系(统计次数)

user_sisle_cross = pd.crosstab (mt2['user_id'],

mt2['aisle'])

# PCA进行主成分分析

pc = PCA(n_components=0.95)

data = pc.fit_transform (user_sisle_cross)

print(data)

pca()

|

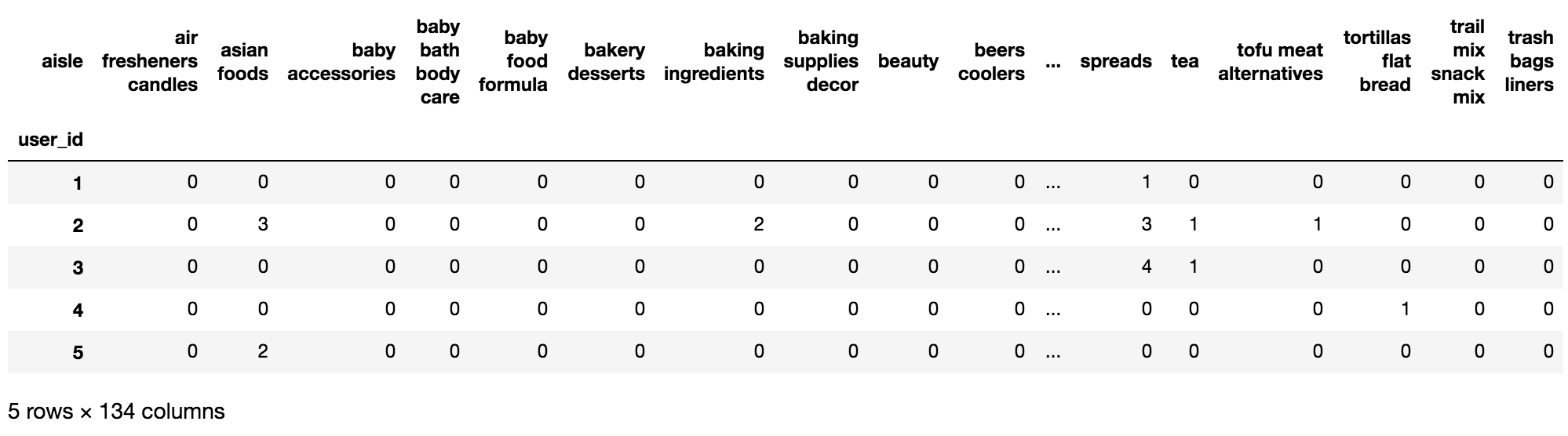

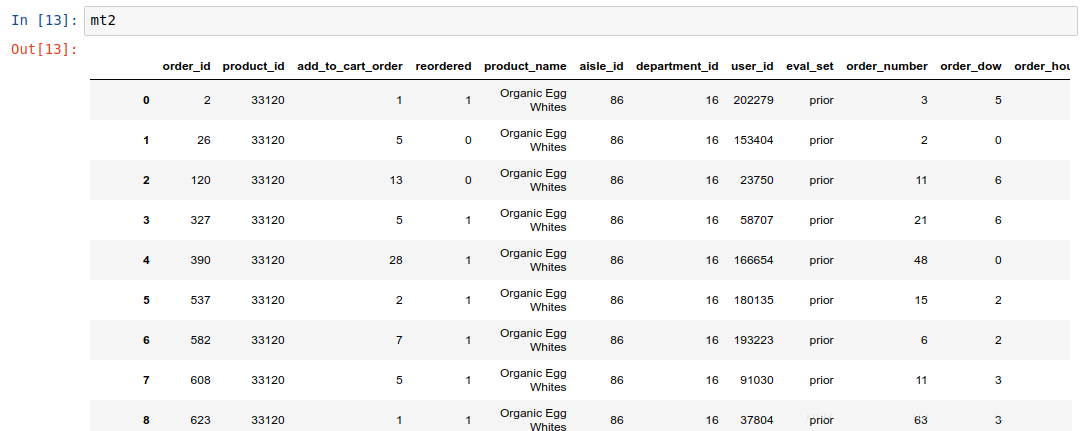

mt2表输出:

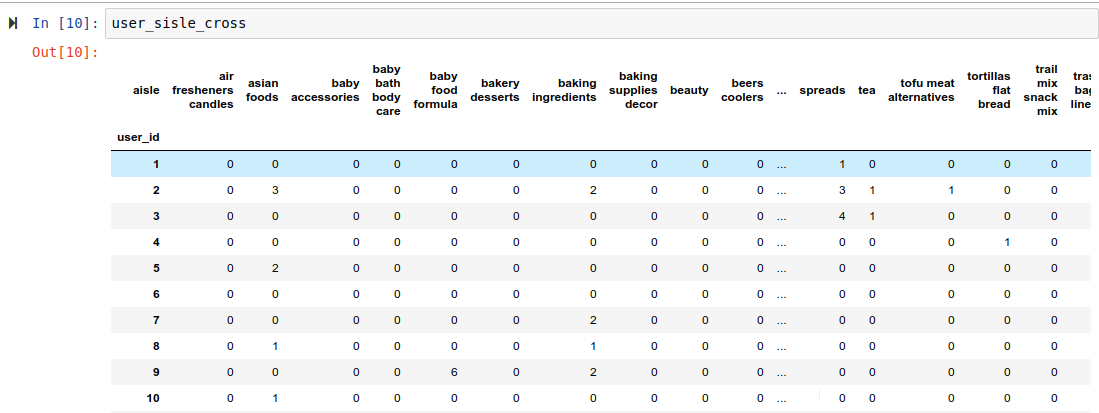

交叉表输出:

降维后输出:————————————————

|