|

1 先决条件

确保在你集群中的每个节点上都安装了所有必需软件:sun-JDK ,ssh,Hadoop

JavaTM1.5.x,必须安装,建议选择Sun公司发行的Java版本。

ssh 必须安装并且保证 sshd一直运行,以便用Hadoop 脚本管理远端Hadoop守护进程。

2 实验环境搭建

2.1 准备工作

操作系统:Ubuntu

部署:Vmvare

在vmvare安装好一台Ubuntu虚拟机后,可以导出或者克隆出另外两台虚拟机。

说明:

保证虚拟机的ip和主机的ip在同一个ip段,这样几个虚拟机和主机之间可以相互通信。

为了保证虚拟机的ip和主机的ip在同一个ip段,虚拟机连接设置为桥连。

准备机器:一台master,若干台slave,配置每台机器的/etc/hosts保证各台机器之间通过机器名可以互访,例如:

10.64.56.76 node1(master)

10.64.56.77 node2 (slave1)

10.64.56.78 node3 (slave2)

|

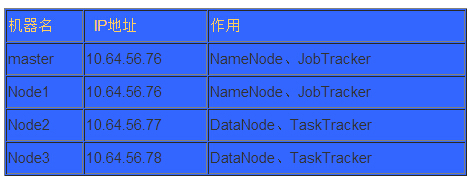

主机信息:

2.2 安装JDK

#安装JDK

$ sudo apt-get install sun-java6-jdk1.2.3 |

这个安装,java执行文件自动添加到/usr/bin/目录。

验证 shell命令 :java -version 看是否与你的版本号一致。

2.3下载、创建用户

$ useradd hadoop

$ cd /home/hadoop |

在所有的机器上都建立相同的目录,也可以就建立相同的用户,最好是以该用户的home路径来做hadoop的安装路径。

例如在所有的机器上的安装路径都是:/home/hadoop/hadoop-0.20.203,这个不需要mkdir,在/home/hadoop/下解压hadoop包的时候,会自动生成)

(当然可以安装/usr/local/目录下,例如/usr/local/hadoop-0.20.203/

chown -R hadoop /usr/local/hadoop-0.20.203/

chgrp -R hadoop /usr/local/hadoop-0.20.203/)

(最好不要使用root安装,因为不推荐各个机器之间使用root访问 ) |

2.4 安装ssh和配置

1) 安装:sudo apt-get install ssh

这个安装完后,可以直接使用ssh命令 了。

执行$ netstat -nat 查看22端口是否开启了。

测试:ssh localhost。

输入当前用户的密码,回车就ok了。说明安装成功,同时ssh登录需要密码。

(这种默认安装方式完后,默认配置文件是在/etc/ssh/目录下。sshd配置文件是:/etc/ssh/sshd_config):

注意:在所有机子都需要安装ssh。

2) 配置:

在Hadoop启动以后,Namenode是通过SSH(Secure Shell)来启动和停止各个datanode上的各种守护进程的,这就须要在节点之间执行指令的时候是不须要输入密码的形式,故我们须要配置SSH运用无密码公钥认证的形式。

以本文中的三台机器为例,现在node1是主节点,他须要连接node2和node3。须要确定每台机器上都安装了ssh,并且datanode机器上sshd服务已经启动。

( 说明:hadoop@hadoop~]$ssh-keygen -t rsa

这个命令将为hadoop上的用户hadoop生成其密钥对,询问其保存路径时直接回车采用默认路径,当提示要为生成的密钥输入passphrase的时候,直接回车,也就是将其设定为空密码。生成的密钥对id_rsa,id_rsa.pub,默认存储在/home/hadoop/.ssh目录下然后将id_rsa.pub的内容复制到每个机器(也包括本机)的/home/dbrg/.ssh/authorized_keys文件中,如果机器上已经有authorized_keys这个文件了,就在文件末尾加上id_rsa.pub中的内容,如果没有authorized_keys这个文件,直接复制过去就行.)

3) 首先设置namenode的ssh为无需密码的、自动登录。

切换到hadoop用户( 保证用户hadoop可以无需密码登录,因为我们后面安装的hadoop属主是hadoop用户。)

$ su hadoop

cd /home/hadoop

$ ssh-keygen -t rsa |

然后一直按回车

完成后,在home跟目录下会产生隐藏文件夹.ssh

之后ls 查看文件

cp id_rsa.pub authorized_keys |

测试:

或者:

第一次ssh会有提示信息:

The authenticity of host ‘node1 (10.64.56.76)’ can’t be established.

RSA key fingerprint is 03:e0:30:cb:6e:13:a8:70:c9:7e:cf:ff:33:2a:67:30.

Are you sure you want to continue connecting (yes/no)?

|

输入 yes 来继续。这会把该服务器添加到你的已知主机的列表中

发现链接成功,并且无需密码。

4 ) 复制authorized_keys到node2 和node3

上

为了保证node1可以无需密码自动登录到node2和node3,先在node2和node3上执行

$ su hadoop

cd /home/hadoop

$ ssh-keygen -t rsa |

一路按回车.

然后回到node1,复制authorized_keys到node2 和node3

[hadoop@hadoop .ssh]$ scp authorized_keys node2:/home/hadoop/.ssh/

[hadoop@hadoop .ssh]$ scp authorized_keys node3:/home/hadoop/.ssh/ |

这里会提示输入密码,输入hadoop账号密码就可以了。

改动你的 authorized_keys 文件的许可权限

[hadoop@hadoop .ssh]$chmod 644 authorized_keys |

测试:ssh node2或者ssh node3(第一次需要输入yes)。

如果不须要输入密码则配置成功,如果还须要请检查上面的配置能不能正确。

2.5 安装Hadoop

#切换为hadoop用户

su hadoop

wgethttp://apache.mirrors.tds.net//hadoop/common/hadoop-0.20.203.0/hadoop-0.20.203.0rc1.tar.gz

|

下载安装包后,直接解压安装即可:

$ tar -zxvfhadoop-0.20.203.0rc1.tar.gz |

1 ) 安装Hadoop集群通常要将安装软件解压到集群内的所有机器上。并且安装路径要一致,如果我们用HADOOP_HOME指代安装的根路径,通常,集群里的所有机器的HADOOP_HOME路径相同。

2 ) 如果集群内机器的环境完全一样,可以在一台机器上配置好,然后把配置好的软件即hadoop-0.20.203整个文件夹拷贝到其他机器的相同位置即可。

3 ) 可以将Master上的Hadoop通过scp拷贝到每一个Slave相同的目录下,同时根据每一个Slave的Java_HOME

的不同修改其hadoop-env.sh 。

4) 为了方便,使用hadoop命令或者start-all.sh等命令,修改Master上/etc/profile

新增以下内容:

export HADOOP_HOME=/home/hadoop/hadoop-0.20.203

exportPATH=$PATH:$HADOOP_HOME/bin |

修改完毕后,执行source /etc/profile 来使其生效。

6)配置conf/hadoop-env.sh文件

配置conf/hadoop-env.sh文件

#添加

export JAVA_HOME=/usr/lib/jvm/java-6-sun/ |

这里修改为你的jdk的安装位置。

测试hadoop安装:

Bin/hadoop jar hadoop-0.20.2-examples.jarwordcount

conf/ /tmp/out |

3. 集群配置(所有节点相同)

3.1配置文件:conf/core-site.xml

<?xml version="1.0"?>

<?xml-stylesheet type="text/xsl"href="configuration.xsl"?>

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://node1:49000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/home/hadoop/hadoop_home/var</value>

</property>

</configuration> |

1)fs.default.name是NameNode的URI。hdfs://主机名:端口/

2)hadoop.tmp.dir :Hadoop的默认临时路径,这个最好配置,如果在新增节点或者其他情况下莫名其妙的DataNode启动不了,就删除此文件中的tmp目录即可。不过如果删除了NameNode机器的此目录,那么就需要重新执行NameNode格式化的命令。

3.2配置文件:conf/mapred-site.xml

<?xmlversion="1.0"?>

<?xml-stylesheettype="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>mapred.job.tracker</name>

<value>node1:49001</value>

</property>

<property>

<name>mapred.local.dir</name>

<value>/home/hadoop/hadoop_home/var</value>

</property>

</configuration> |

1)mapred.job.tracker是JobTracker的主机(或者IP)和端口。主机:端口。

3.3配置文件:conf/hdfs-site.xml

<?xmlversion="1.0"?>

<?xml-stylesheettype="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>dfs.name.dir</name>

<value>/home/hadoop/name1, /home/hadoop/name2</value> #hadoop的name目录路径

<description> </description>

</property>

<property>

<name>dfs.data.dir</name>

<value>/home/hadoop/data1, /home/hadoop/data2</value>

<description> </description>

</property>

<property>

<name>dfs.replication</name>

<!-- 我们的集群又两个结点,所以rep两份 -->

<value>2</vaue>

</property>

</configuration> |

1) dfs.name.dir是NameNode持久存储名字空间及事务日志的本地文件系统路径。

当这个值是一个逗号分割的目录列表时,nametable数据将会被复制到所有目录中做冗余备份。

2) dfs.data.dir是DataNode存放块数据的本地文件系统路径,逗号分割的列表。

当这个值是逗号分割的目录列表时,数据将被存储在所有目录下,通常分布在不同设备上。

3)dfs.replication是数据需要备份的数量,默认是3,如果此数大于集群的机器数会出错。

注意:此处的name1、name2、data1、data2目录不能预先创建,hadoop格式化时会自动创建,如果预先创建反而会有问题。

3.4配置masters和slaves主从结点

配置conf/masters和conf/slaves来设置主从结点,注意最好使用主机名,并且保证机器之间通过主机名可以互相访问,每个主机名一行。

vi masters:

输入:

vi slaves:

输入:

配置结束,把配置好的hadoop文件夹拷贝到其他集群的机器中,并且保证上面的配置对于其他机器而言正确,例如:如果其他机器的Java安装路径不一样,要修改conf/hadoop-env.sh

$ scp -r /home/hadoop/hadoop-0.20.203 root@node2: /home/hadoop/ |

4 hadoop启动

4.1 格式化一个新的分布式文件系统

先格式化一个新的分布式文件系统

$ cd hadoop-0.20.203

$ bin/hadoop namenode -format |

成功情况下系统输出:

12/02/06 00:46:50 INFO namenode.NameNode:STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = ubuntu/127.0.1.1

STARTUP_MSG: args = [-format]

STARTUP_MSG: version = 0.20.203.0

STARTUP_MSG: build =http://svn.apache.org/repos/asf/hadoop/common

/branches/branch-0.20-security-203-r 1099333; compiled by 'oom' on Wed May 4 07:57:50 PDT 2011

************************************************************/

12/02/0600:46:50 INFO namenode.FSNamesystem: fsOwner=root,root

12/02/06 00:46:50 INFO namenode.FSNamesystem:supergroup=supergroup

12/02/06 00:46:50 INFO namenode.FSNamesystem:isPermissionEnabled=true

12/02/06 00:46:50 INFO common.Storage: Imagefile of size 94 saved in 0 seconds.

12/02/06 00:46:50 INFO common.Storage: Storagedirectory /opt/hadoop/hadoopfs/name1 has been successfully formatted.

12/02/06 00:46:50 INFO common.Storage: Imagefile of size 94 saved in 0 seconds.

12/02/06 00:46:50 INFO common.Storage: Storagedirectory /opt/hadoop/hadoopfs/name2 has been successfully formatted.

12/02/06 00:46:50 INFO namenode.NameNode:SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode atv-jiwan-ubuntu-0/127.0.0.1

************************************************************/

|

查看输出保证分布式文件系统格式化成功

执行完后可以到master机器上看到/home/hadoop//name1和/home/hadoop//name2两个目录。在主节点master上面启动hadoop,主节点会启动所有从节点的hadoop。

4.2 启动所有节点

启动方式1:

$ bin/start-all.sh (同时启动HDFS和Map/Reduce) |

系统输出:

starting namenode, logging to /usr/local/hadoop/logs/hadoop-hadoop-namenode-ubuntu.out

node2: starting datanode, loggingto /usr/local/hadoop/logs/hadoop-hadoop-datanode-ubuntu.out

node3: starting datanode, loggingto /usr/local/hadoop/logs/hadoop-hadoop-datanode-ubuntu.out

node1: starting secondarynamenode,logging to /usr/local/hadoop/logs/hadoop-hadoop-secondarynamenode-ubuntu.out

starting jobtracker, logging to/usr/local/hadoop/logs/hadoop-hadoop-jobtracker-ubuntu.out

node2: starting tasktracker,logging to /usr/local/hadoop/logs/hadoop-hadoop-tasktracker-ubuntu.out

node3: starting tasktracker,logging to /usr/local/hadoop/logs/hadoop-hadoop-tasktracker-ubuntu.out

As you can see in slave's output above, it will automatically format it's storage directory

(specified by dfs.data.dir) if it is not formattedalready. It will also create the directory if it does not exist yet.

|

执行完后可以到master(node1)和slave(node1,node2)机器上看到/home/hadoop/hadoopfs/data1和/home/hadoop/data2两个目录。

启动方式2:

启动Hadoop集群需要启动HDFS集群和Map/Reduce集群。

在分配的NameNode上,运行下面的命令启动HDFS:

$ bin/start-dfs.sh(单独启动HDFS集群) |

bin/start-dfs.sh脚本会参照NameNode上${HADOOP_CONF_DIR}/slaves文件的内容,在所有列出的slave上启动DataNode守护进程。

在分配的JobTracker上,运行下面的命令启动Map/Reduce:

$bin/start-mapred.sh (单独启动Map/Reduce) |

bin/start-mapred.sh脚本会参照JobTracker上${HADOOP_CONF_DIR}/slaves文件的内容,在所有列出的slave上启动TaskTracker守护进程。

4.3 关闭所有节点

从主节点master关闭hadoop,主节点会关闭所有从节点的hadoop。

Hadoop守护进程的日志写入到 ${HADOOP_LOG_DIR} 目录

(默认是 ${HADOOP_HOME}/logs).

5 .测试

1)浏览NameNode和JobTracker的网络接口,它们的地址默认为:

NameNode - http://node1:50070/

JobTracker - http://node2:50030/ |

3) 使用netstat –nat查看端口49000和49001是否正在使用。

4) 使用jps查看进程

要想检查守护进程是否正在运行,可以使用 jps 命令(这是用于 JVM 进程的ps 实用程序)。这个命令列出

5 个守护进程及其进程标识符。

5)将输入文件拷贝到分布式文件系统:

$ bin/hadoop fs -mkdir input

$ bin/hadoop fs -put conf/core-site.xml input |

运行发行版提供的示例程序:

$ bin/hadoop jar hadoop-0.20.2-examples.jar grep input output 'dfs[a-z.]+' |

6.补充

Q: bin/hadoop jar hadoop-0.20.2-examples.jar

grep input output 'dfs[a-z.]+' 什么意思啊?

A: bin/hadoop jar(使用hadoop运行jar包) hadoop-0.20.2_examples.jar(jar包的名字)

grep (要使用的类,后边的是参数)input output 'dfs[a-z.]+'

整个就是运行hadoop示例程序中的grep,对应的hdfs上的输入目录为input、输出目录为output。

Q: 什么是grep?

A: A map/reduce program that counts

the matches of a regex in the input.

查看输出文件:

将输出文件从分布式文件系统拷贝到本地文件系统查看:

$ bin/hadoop fs -get output output

$ cat output/* |

或者

在分布式文件系统上查看输出文件:

$ bin/hadoop fs -cat output/* |

统计结果:

root@v-jiwan-ubuntu-0:~/hadoop/hadoop-0.20.2-bak/hadoop-0.20.2#bin/hadoop fs -cat output/part-00000

3 dfs.class

2 dfs.period

1 dfs.file

1 dfs.replication

1 dfs.servers

1 dfsadmin |

7. HDFS常用操作

hadoopdfs -ls 列出HDFS下的文件

hadoop dfs -ls in 列出HDFS下某个文档中的文件

hadoop dfs -put test1.txt test 上传文件到指定目录并且重新命名,只有所有的DataNode都接收完数据才算成功

hadoop dfs -get in getin 从HDFS获取文件并且重新命名为getin,同put一样可操作文件也可操作目录

hadoop dfs -rmr out 删除指定文件从HDFS上

hadoop dfs -cat in/* 查看HDFS上in目录的内容

hadoop dfsadmin -report 查看HDFS的基本统计信息,结果如下

hadoop dfsadmin -safemode leave 退出安全模式

hadoop dfsadmin -safemode enter 进入安全模式 |

8.添加节点

可扩展性是HDFS的一个重要特性,首先在新加的节点上安装hadoop,然后修改$HADOOP_HOME/conf/master文件,加入

NameNode主机名,然后在NameNode节点上修改$HADOOP_HOME/conf/slaves文件,加入新加节点主机名,再建立到新加节点无密码的SSH连接

运行启动命令:

然后可以通过http://(Masternode的主机名):50070查看新添加的DataNode

9负载均衡

start-balancer.sh,可以使DataNode节点上选择策略重新平衡DataNode上的数据块的分布

结束语:遇到问题时,先查看logs,很有帮助。

10 SHell自动安装脚本

#!/bin/bash

#validate user or group

validate() {

if [ 'id -u' == 0 ];then

echo "must not be root!"

exit 0

else

echo "---------welcome to hadoop---------"

fi

}

#hadoop install

hd-dir() {

if [ ! -d /home/hadoop/ ];then

mkdir /home/hadoop/

else

echo "download hadoop will begin"

fi

}

download-hd() {

wget -c http://archive.apache.org/dist/hadoop/core/stable/hadoop-1.0.4.tar.gz -O /home/hadoop/hadoop-1.0.4.tar.gz

tar -xzvf /home/hadoop/hadoop-1.0.4.tar.gz -C /home/hadoop

rm /home/hadoop/hadoop-1.0.4.tar.gz

Ln -s /home/hadoop/hadoop-1.0.4 /home/hadoop/hadoop1.0.4

}

#hadoop conf

hd-conf() {

echo "export JAVA_HOME=/usr/lib/jvm/java-6-openjdk-i386" >> /home/hadoop/hadoop1.0.4/conf/hadoop-env.sh

echo "#set path jdk" >> /home/hadoop/.profile

echo "export JAVA_HOME=/usr/lib/jvm/java-6-openjdk-i386" >> /home/hadoop/.profile

echo "#hadoop path" >> /home/hadoop/.profile

echo "export HADOOP_HOME=/home/hadoop/hadoop1.0.4" >> /home/hadoop/.profile

echo "PATH=$PATH:$HADOOP_HOME/bin:$JAVA_HOME/bin" >> /home/hadoop/.profile

echo "HADOOP_HOME_WARN_SUPPRESS=1" >> /home/hadoop/.profile

#hadoop core-site.xml

echo "" >> /home/hadoop/hadoop1.0.4/conf/core-site.xml

echo "" >> /home/hadoop/hadoop1.0.4/conf/core-site.xml

echo "fs.default.name" >> /home/hadoop/hadoop1.0.4/conf/core-site.xml

echo "hdfs://hadoop-master:9000" >> /home/hadoop/hadoop1.0.4/conf/core-site.xml

echo "" >> /home/hadoop/hadoop1.0.4/conf/core-site.xml

echo "" >> /home/hadoop/hadoop1.0.4/conf/core-site.xml

echo "hadoop.tmp.dir" >> /home/hadoop/hadoop1.0.4/conf/core-site.xml

echo "/home/hadoop/tmp" >> /home/hadoop/hadoop1.0.4/conf/core-site.xml

echo "" >> /home/hadoop/hadoop1.0.4/conf/core-site.xml

echo "" >> /home/hadoop/hadoop1.0.4/conf/core-site.xml

#hadoop hdfs-site.xml

echo "" >> /home/hadoop/hadoop1.0.4/conf/hdfs-site.xml

echo "" >> /home/hadoop/hadoop1.0.4/conf/hdfs-site.xml

echo "dfs.name.dir" >> /home/hadoop/hadoop1.0.4/conf/hdfs-site.xml

echo "/home/hadoop/name" >> /home/hadoop/hadoop1.0.4/conf/hdfs-site.xml

echo "" >> /home/hadoop/hadoop1.0.4/conf/hdfs-site.xml

echo "" >> /home/hadoop/hadoop1.0.4/conf/hdfs-site.xml

echo "dfs.data.dir" >> /home/hadoop/hadoop1.0.4/conf/hdfs-site.xml

echo "/home/hadoop/data" >> /home/hadoop/hadoop1.0.4/conf/hdfs-site.xml

echo "" >> /home/hadoop/hadoop1.0.4/conf/hdfs-site.xml

echo "" >> /home/hadoop/hadoop1.0.4/conf/hdfs-site.xml

echo "dfs.replication" >> /home/hadoop/hadoop1.0.4/conf/hdfs-site.xml

echo "1" >> /home/hadoop/hadoop1.0.4/conf/hdfs-site.xml

echo "" >> /home/hadoop/hadoop1.0.4/conf/hdfs-site.xml

echo "" >> /home/hadoop/hadoop1.0.4/conf/hdfs-site.xml

# hadoop mapred-site.xml

echo "" >> /home/hadoop/hadoop1.0.4/conf/mapred-site.xml

echo "" >> /home/hadoop/hadoop1.0.4/conf/mapred-site.xml

echo "mapred.job.tracker" >> /home/hadoop/hadoop1.0.4/conf/mapred-site.xml

echo "hadoop-master:9001" >> /home/hadoop/hadoop1.0.4/conf/mapred-site.xml

echo "" >> /home/hadoop/hadoop1.0.4/conf/mapred-site.xml

echo "" >> /home/hadoop/hadoop1.0.4/conf/mapred-site.xml

#hadoop master

echo "hadoop-master" >> /home/hadoop/hadoop1.0.4/conf/masters

#hadoop slaves

echo "hadoop-master" >> /home/hadoop/hadoop1.0.4/conf/slaves

source /home/hadoop/.profile

}

hd-start() {

hadoop namenode -format

}

yes-or-no() {

echo "Is your name $* ?"

while true

do

echo -n "Enter yes or no: "

read x

case "$x" in

y | yes ) return 0;;

n | no ) return 1;;

* ) echo "Answer yes or no";;

esac

done

}

echo "Original params are $*"

if yes-or-no "$1"

then

echo "HI $1,nice name!"

validate

hd-dir

download-hd

hd-conf

else

echo "Never mind!"

fi

|

|