| 1.

hadoop集群:

1.1 系统及硬件配置:

hadoop版本:2.6 ;三台虚拟机:node101(192.168.0.101)、node102(192.168.0.102)、node103(192.168.0.103);

每台机器2G内存、1个CPU核;

node101: NodeManager、 NameNode、ResourceManager、DataNode;

node102: NodeManager、DataNode 、SecondaryNameNode、JobHistoryServer;

node103: NodeManager 、DataNode;

1.2 配置过程中遇到的问题:

1) NodeManager启动不了;

最开始配置的虚拟机配置的是512M内存,所以在yarn-site.xml 中的“yarn.nodemanager.resource.memory-mb”配置为512(其默认配置是1024),查看日志,报错:

org.apache.hadoop.yarn.exceptions.YarnRuntimeException: Recieved SHUTDOWN signal from Resourcemanager ,

Registration of NodeManager failed, Message from ResourceManager:

NodeManager from node101 doesn't satisfy minimum allocations, Sending SHUTDOWN signal to the NodeManager. |

把它改为1024或者以上就可以正常启动NodeManager了,我设置的是2048;

2) 任务可以提交,但是不会继续运行

a. 由于这里每个虚拟机只配置了一个核,但是yarn-site.xml里面的“yarn.nodemanager.resource.cpu-vcores”默认配置是8,这样在分配资源的时候会有问题,所以把这个参数配置为1;

b. 出现下面的错误:

is running beyond virtual memory limits. Current usage: 96.6 MB of 1.5 GB physical memory used;

1.6 GB of 1.5 GB virtual memory used. Killing container. |

这个应该是map、reduce、NodeManager的资源配置没有配置好,大小配置不正确导致的,但是我改了好久,感觉应该是没问题的,但是一直报这个错,最后没办法,把这个检查去掉了,即把yarn-site.xml

中的“yarn.nodemanager.vmem-check-enabled”配置为false;这样就可以提交任务了。

1.3 配置文件(希望有高人可以指点下资源配置情况,可以不出现上面b的错误,而不是使用去掉检查的方法):

1)hadoop-env.sh 和yarn-env.sh 中配置jdk,同时HADOOP_HEAPSIZE和YARN_HEAPSIZE配置为512;

2)hdfs-site.xml 配置数据存储路径和secondaryname所在节点:

<configuration>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:////data/hadoop/hdfs/name</value>

<description>Determines where on the local filesystem the DFS name node

should store the name table(fsimage). If this is a comma-delimited list

of directories then the name table is replicated in all of the

directories, for redundancy. </description>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:///data/hadoop/hdfs/data</value>

<description>Determines where on the local filesystem an DFS data node

should store its blocks. If this is a comma-delimited

list of directories, then data will be stored in all named

directories, typically on different devices.

Directories that do not exist are ignored.

</description>

</property>

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>node102:50090</value>

</property>

</configuration> |

3)core-site.xml 配置namenode:

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://node101:8020</value>

</property>

</configuration> |

4) mapred-site.xml 配置map和reduce的资源:

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

<description>The runtime framework for executing MapReduce jobs.

Can be one of local, classic or yarn.

</description>

</property>

<!-- jobhistory properties -->

<property>

<name>mapreduce.jobhistory.address</name>

<value>node102:10020</value>

<description>MapReduce JobHistory Server IPC host:port</description>

</property>

<property>

<name>mapreduce.map.memory.mb</name>

<value>1024</value>

</property>

<property>

<name>mapreduce.reduce.memory.mb</name>

<value>1024</value>

</property>

<property>

<name>mapreduce.map.java.opts</name>

<value>-Xmx512m</value>

</property>

<property>

<name>mapreduce.reduce.java.opts</name>

<value>-Xmx512m</value>

</property>

</configuration> |

5)yarn-site.xml 配置resourcemanager及相关资源:

<configuration>

<property>

<description>The hostname of the RM.</description>

<name>yarn.resourcemanager.hostname</name>

<value>node101</value>

</property>

<property>

<description>The address of the applications manager interface in the RM.</description>

<name>yarn.resourcemanager.address</name>

<value>${yarn.resourcemanager.hostname}:8032</value>

</property>

<property>

<description>The address of the scheduler interface.</description>

<name>yarn.resourcemanager.scheduler.address</name>

<value>${yarn.resourcemanager.hostname}:8030</value>

</property>

<property>

<description>The http address of the RM web application.</description>

<name>yarn.resourcemanager.webapp.address</name>

<value>${yarn.resourcemanager.hostname}:8088</value>

</property>

<property>

<description>The https adddress of the RM web application.</description>

<name>yarn.resourcemanager.webapp.https.address</name>

<value>${yarn.resourcemanager.hostname}:8090</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>${yarn.resourcemanager.hostname}:8031</value>

</property>

<property>

<description>The address of the RM admin interface.</description>

<name>yarn.resourcemanager.admin.address</name>

<value>${yarn.resourcemanager.hostname}:8033</value>

</property>

<property>

<description>List of directories to store localized files in. An

application's localized file directory will be found in:

${yarn.nodemanager.local-dirs}/usercache/${user}/appcache/application_${appid}.

Individual containers' work directories, called container_${contid}, will

be subdirectories of this.

</description>

<name>yarn.nodemanager.local-dirs</name>

<value>/data/hadoop/yarn/local</value>

</property>

<property>

<description>Whether to enable log aggregation</description>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<property>

<description>Where to aggregate logs to.</description>

<name>yarn.nodemanager.remote-app-log-dir</name>

<value>/data/tmp/logs</value>

</property>

<property>

<description>Amount of physical memory, in MB, that can be allocated

for containers.</description>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>2048</value>

</property>

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>512</value>

</property>

<property>

<name>yarn.nodemanager.vmem-pmem-ratio</name>

<value>1.0</value>

</property>

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

<!--

<property>

<description>The class to use as the resource scheduler.</description>

<name>yarn.resourcemanager.scheduler.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler</value>

</property>

<property>

<description>fair-scheduler conf location</description>

<name>yarn.scheduler.fair.allocation.file</name>

<value>${yarn.home.dir}/etc/hadoop/fairscheduler.xml</value>

</property>

-->

<property>

<name>yarn.nodemanager.resource.cpu-vcores</name>

<value>1</value>

</property>

<property>

<description>the valid service name should only contain a-zA-Z0-9_ and can not start with numbers</description>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

</configuration> |

2. Java调用Hadoop2.6 ,运行MR程序:

需修改下面两个地方:

1) 调用主程序的Configuration需要配置:

Configuration conf = new Configuration();

conf.setBoolean("mapreduce.app-submission.cross-platform", true);// 配置使用跨平台提交任务

conf.set("fs.defaultFS", "hdfs://node101:8020");//指定namenode

conf.set("mapreduce.framework.name", "yarn"); // 指定使用yarn框架

conf.set("yarn.resourcemanager.address", "node101:8032"); // 指定resourcemanager

conf.set("yarn.resourcemanager.scheduler.address", "node101:8030");// 指定资源分配器 |

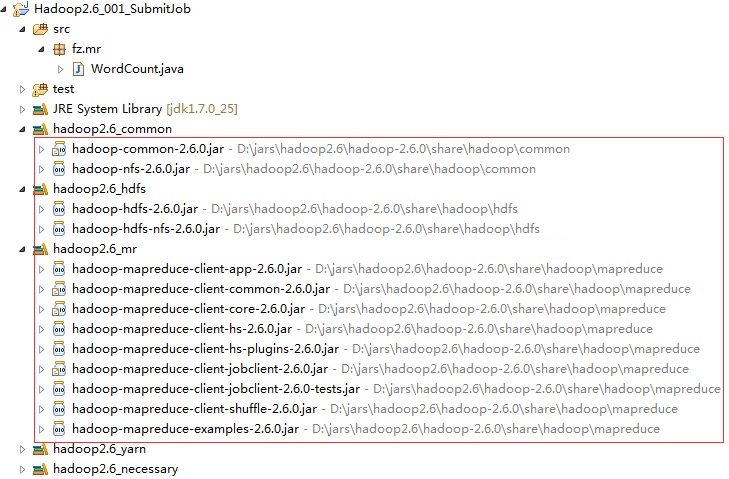

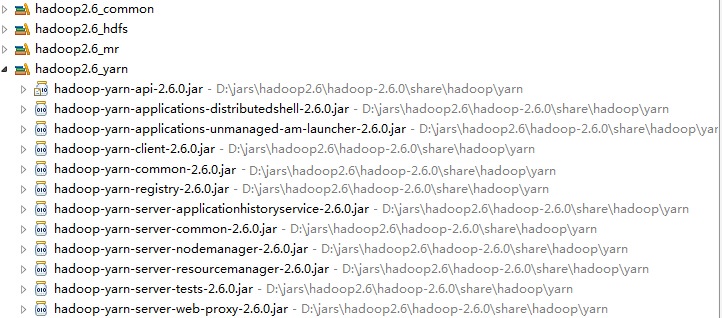

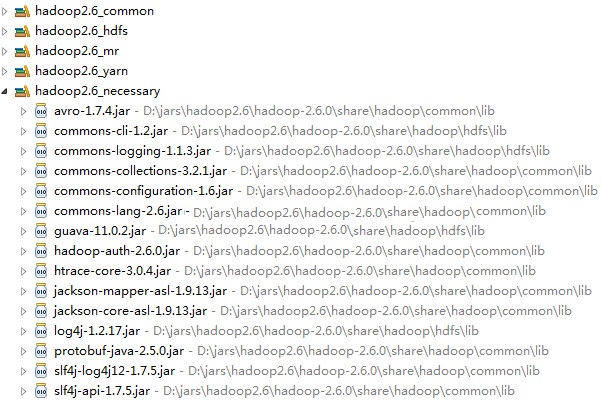

2) 添加下面的类到classpath:

其他地方不用修改,这样就可以运行;

3. Web程序调用Hadoop2.6,运行MR程序;

程序可以在java web程序调用hadoop2.6 下载;

这个web程序调用部分和上面的java是一样的,基本都没有修改,所使用到的jar包也全部放在了lib下面。

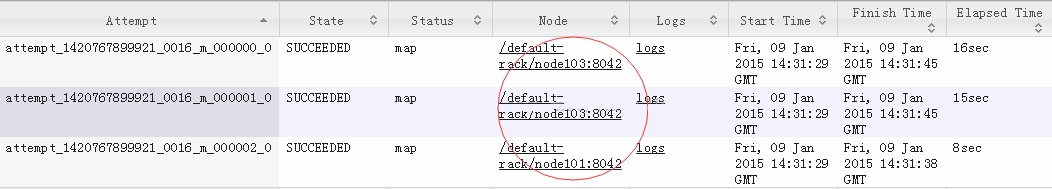

最后有一点,我运行了三个map,但是三个map不是均匀分布的:

可以看到node103分配了两个map,node101分配了1一个map;还有一次是node101分配了两个map,node103分配了一个map;两次node102都没有分配到map任务,这个应该是资源管理和任务分配的地方还是有点问题的缘故。

|