| 编辑推荐: |

本文主要对Spark

SQL进行概述,并详细介绍如何编写Spark SQL程序的操作,希望对您的学习有所帮助。

本文来自csdn,由火龙果软件Alice编辑、推荐。 |

|

一、Spark SQL

1.Spark SQL概述

1.1.Spark SQL的前世今生

Shark是一个为Spark设计的大规模数据仓库系统,它与Hive兼容。Shark建立在Hive的代码基础上,并通过将Hive的部分物理执行计划交换出来。这个方法使得Shark的用户可以加速Hive的查询,但是Shark继承了Hive的大且复杂的代码使得Shark很难优化和维护,同时Shark依赖于Spark的版本。随着我们遇到了性能优化的上限,以及集成SQL的一些复杂的分析功能,我们发现Hive的MapReduce设计的框架限制了Shark的发展。在2014年7月1日的SparkSummit上,Databricks宣布终止对Shark的开发,将重点放到SparkSQL上。

1.2.什么是Spark SQL

Spark SQL是Spark用来处理结构化数据的一个模块,它提供了一个编程抽象叫做DataFrame并且作为分布式SQL查询引擎的作用。

相比于Spark RDD API,Spark SQL包含了对结构化数据和在其上运算的更多信息,Spark

SQL使用这些信息进行了额外的优化,使对结构化数据的操作更加高效和方便。

有多种方式去使用Spark SQL,包括SQL、DataFrames API和Datasets API。但无论是哪种API或者是编程语言,它们都是基于同样的执行引擎,因此你可以在不同的API之间随意切换,它们各有各的特点,看你喜欢那种风格。

1.3.为什么要学习Spark SQL

我们已经学习了Hive,它是将Hive SQL转换成MapReduce然后提交到集群中去执行,大大简化了编写MapReduce程序的复杂性,由于MapReduce这种计算模型执行效率比较慢,所以Spark

SQL应运而生,它是将Spark SQL转换成RDD,然后提交到集群中去运行,执行效率非常快!

1.易整合

将sql查询与spark程序无缝混合,可以使用java、scala、python、R等语言的API操作。

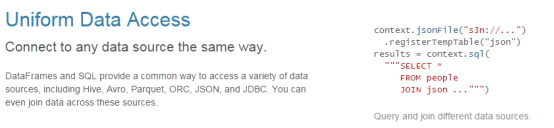

2.统一的数据访问

以相同的方式连接到任何数据源。

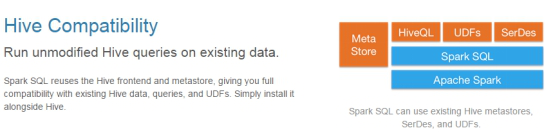

3.兼容Hive

支持hiveSQL的语法。

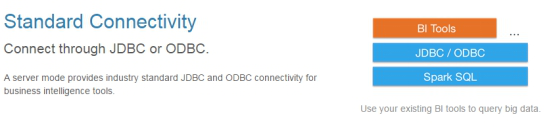

4.标准的数据连接

可以使用行业标准的JDBC或ODBC连接。

2.DataFrame

2.1.什么是DataFrame

DataFrame的前身是SchemaRDD,从Spark 1.3.0开始SchemaRDD更名为DataFrame。与SchemaRDD的主要区别是:DataFrame不再直接继承自RDD,而是自己实现了RDD的绝大多数功能。你仍旧可以在DataFrame上调用rdd方法将其转换为一个RDD。

在Spark中,DataFrame是一种以RDD为基础的分布式数据集,类似于传统数据库的二维表格,DataFrame带有Schema元信息,即DataFrame所表示的二维表数据集的每一列都带有名称和类型,但底层做了更多的优化。DataFrame可以从很多数据源构建,比如:已经存在的RDD、结构化文件、外部数据库、Hive表。

2.2.DataFrame与RDD的区别

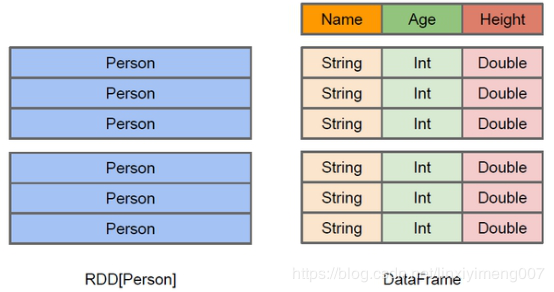

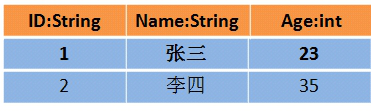

RDD可看作是分布式的对象的集合,Spark并不知道对象的详细模式信息,DataFrame可看作是分布式的Row对象的集合,其提供了由列组成的详细模式信息,使得Spark

SQL可以进行某些形式的执行优化。DataFrame和普通的RDD的逻辑框架区别如下所示:

上图直观地体现了DataFrame和RDD的区别。

左侧的RDD[Person]虽然以Person为类型参数,但Spark框架本身不了解 Person类的内部结构。

而右侧的DataFrame却提供了详细的结构信息,使得Spark SQL可以清楚地知道该数据集中包含哪些列,每列的名称和类型各是什么。DataFrame多了数据的结构信息,即schema。这样看起来就像一张表了,DataFrame还配套了新的操作数据的方法,DataFrame

API(如df.select())和SQL(select id, name from xx_table

where ...)。

此外DataFrame还引入了off-heap,意味着JVM堆以外的内存, 这些内存直接受操作系统管理(而不是JVM)。Spark能够以二进制的形式序列化数据(不包括结构)到off-heap中,

当要操作数据时, 就直接操作off-heap内存. 由于Spark理解schema, 所以知道该如何操作。

RDD是分布式的Java对象的集合。DataFrame是分布式的Row对象的集合。DataFrame除了提供了比RDD更丰富的算子以外,更重要的特点是提升执行效率、减少数据读取以及执行计划的优化。

有了DataFrame这个高一层的抽象后,我们处理数据更加简单了,甚至可以用SQL来处理数据了,对开发者来说,易用性有了很大的提升。

不仅如此,通过DataFrame API或SQL处理数据,会自动经过Spark

优化器(Catalyst)的优化,即使你写的程序或SQL不高效,也可以运行的很快。

2.3.DataFrame与RDD的优缺点

RDD的优缺点:

优点:

(1)编译时类型安全

编译时就能检查出类型错误

(2)面向对象的编程风格

直接通过对象调用方法的形式来操作数据

缺点:

(1)序列化和反序列化的性能开销

无论是集群间的通信, 还是IO操作都需要对对象的结构和数据进行序列化和反序列化。

(2)GC的性能开销

频繁的创建和销毁对象, 势必会增加GC

DataFrame通过引入schema和off-heap(不在堆里面的内存,指的是除了不在堆的内存,使用操作系统上的内存),解决了RDD的缺点,

Spark通过schame就能够读懂数据, 因此在通信和IO时就只需要序列化和反序列化数据, 而结构的部分就可以省略了;通过off-heap引入,可以快速的操作数据,避免大量的GC。但是却丢了RDD的优点,DataFrame不是类型安全的,

API也不是面向对象风格的。

2.4.读取数据源创建DataFrame

2.4.1 读取文本文件创建DataFrame

在spark2.0版本之前,Spark SQL中SQLContext是创建DataFrame和执行SQL的入口,利用hiveContext通过hive

sql语句操作hive表数据,兼容hive操作,并且hiveContext继承自SQLContext。在spark2.0之后,这些都统一于SparkSession,SparkSession

封装了 SparkContext,SqlContext,通过SparkSession可以获取到 SparkConetxt,SqlContext

对象。

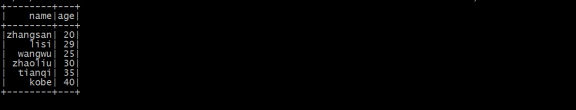

(1)在本地创建一个文件,有三列,分别是id、name、age,用空格分隔,然后上传到hdfs上。person.txt内容为:

1 zhangsan

20

2 lisi 29

3 wangwu 25

4 zhaoliu 30

5 tianqi 35

6 kobe 40 |

上传数据文件到HDFS上:

hdfs dfs -put person.txt /

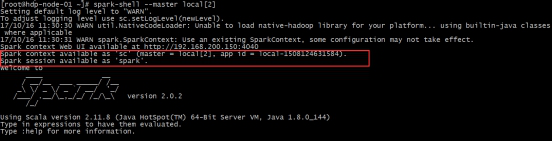

(2)在spark shell执行下面命令,读取数据,将每一行的数据使用列分隔符分割

先执行 spark-shell --master local[2]

val lineRDD= sc.textFile("/person.txt").map(_.split("

"))

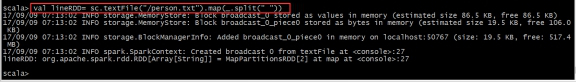

(3)定义case class(相当于表的schema)

case class Person(id:Int, name:String, age:Int)

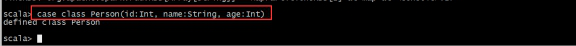

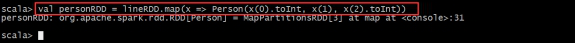

(4)将RDD和case class关联

val personRDD = lineRDD.map(x =>

Person( x(0).toInt, x(1) , x(2).toInt ))

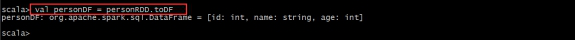

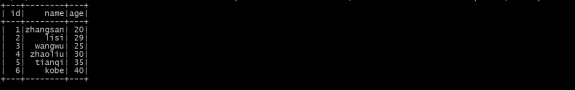

(5)将RDD转换成DataFrame

val personDF = personRDD.toDF

(6)对DataFrame进行处理

personDF.show

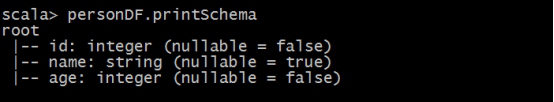

personDF.printSchema

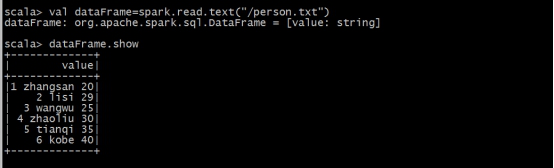

(7)、通过SparkSession构建DataFrame

使用spark-shell中已经初始化好的SparkSession对象spark生成DataFrame

val dataFrame=spark.read.text("/person.txt")

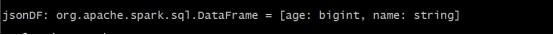

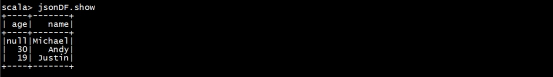

2.4.2 读取json文件创建DataFrame

(1)数据文件

使用spark安装包下的

/opt/bigdata/spark/examples/src/main/resources

/ people.json 文件

(2)在spark shell执行下面命令,读取数据

(3)接下来就可以使用DataFrame的函数操作

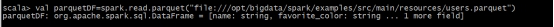

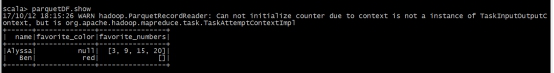

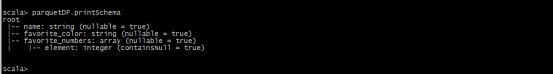

2.4.3 读取parquet列式存储格式文件创建DataFrame

(3)数据文件

使用spark安装包下的

/opt/bigdata/spark/examples/src/main/resources

/ users.parquet 文件

(2)在spark shell执行下面命令,读取数据

(3)接下来就可以使用DataFrame的函数操作

3.DataFrame常用操作

3.1. DSL风格语法

DataFrame提供了一个领域特定语言(DSL)以方便操作结构化数据。下面是一些使用示例

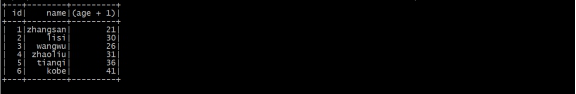

(1)查看DataFrame中的内容,通过调用show方法

personDF.show

(2)查看DataFrame部分列中的内容

查看name字段的数据

personDF.select(personDF.col("name")).show

查看name字段的另一种写法

查看 name 和age字段数据personDF.select(col("name")

, col("age")) .show

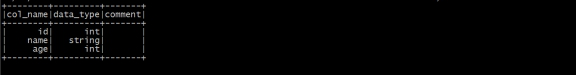

(3)打印DataFrame的Schema信息

personDF.printSchema

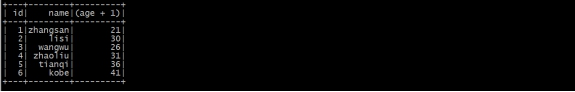

(4)查询所有的name和age,并将age+1

personDF.select(col("id"),

col("name"), col ("age") + 1)

. show

也可以这样:

personDF.select(personDF("id"),

personDF("name") , personDF("age")

+ 1) . show

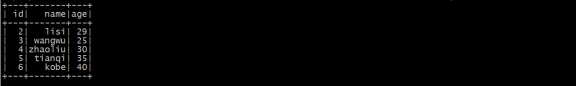

(5)过滤age大于等于25的,使用filter方法过滤

personDF.filter(col("age")

>= 25).show

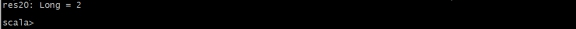

(6)统计年龄大于30的人数

personDF.filter(col("age")>30).count()

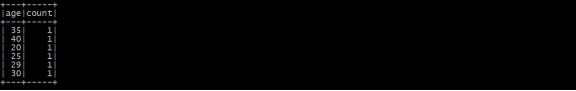

(7)按年龄进行分组并统计相同年龄的人数

personDF.groupBy("age").count().show

3.2. SQL风格语法

DataFrame的一个强大之处就是我们可以将它看作是一个关系型数据表,然后可以通过在程序中使用spark.sql()来执行SQL查询,结果将作为一个DataFrame返回。

如果想使用SQL风格的语法,需要将DataFrame注册成表,采用如下的方式:

personDF.registerTempTable("t_person")

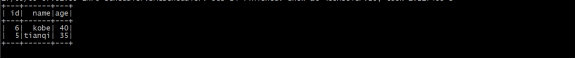

(1)查询年龄最大的前两名

spark.sql("select * from t_person

order by age desc limit 2" ). show

(2)显示表的Schema信息

spark.sql("desc t_person").show

(3)查询年龄大于30的人的信息

spark.sql("select * from t_personwhere

age > 30 ") .show

4.DataSet

4.1. 什么是DataSet

DataSet是分布式的数据集合。DataSet是在Spark1.6中添加的新的接口。它集中了RDD的优点(强类型和可以用强大lambda函数)以及Spark

SQL优化的执行引擎。DataSet可以通过JVM的对象进行构建,可以用函数式的转换( map/flatmap/filter

)进行多种操作。

4.2. DataFrame、DataSet、RDD的区别

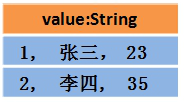

假设RDD中的两行数据长这样:

那么DataFrame中的数据长这样:

那么Dataset中的数据长这样:

或者长这样(每行数据是个Object):

DataSet包含了DataFrame的功能,Spark2.0中两者统一,DataFrame表示为DataSet[Row],即DataSet的子集。

(1)DataSet可以在编译时检查类型

(2)并且是面向对象的编程接口

相比DataFrame,Dataset提供了编译时类型检查,对于分布式程序来讲,提交一次作业太费劲了(要编译、打包、上传、运行),到提交到集群运行时才发现错误,这会浪费大量的时间,这也是引入Dataset的一个重要原因。

4.3. DataFrame与DataSet的互转

DataFrame和DataSet可以相互转化。

(1)DataFrame转为 DataSet

df.as[ElementType]这样可以把DataFrame转化为DataSet。

(2)DataSet转为DataFrame

ds.toDF()这样可以把DataSet转化为DataFrame。

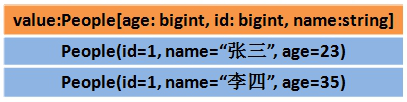

4.4. 创建DataSet

(1)通过spark.createDataset创建

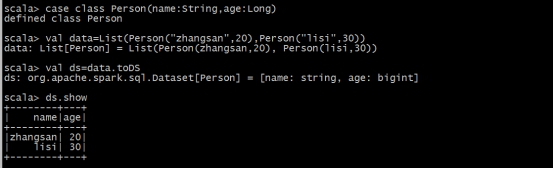

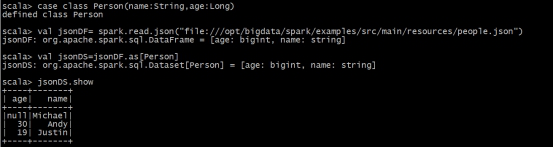

(2)通toDS方法生成DataSet

(3)通过DataFrame转化生成

使用as[]转换为DataSet

三、以编程方式执行Spark SQL查询

1.编写Spark SQL程序实现RDD转换DataFrame

前面我们学习了如何在Spark Shell中使用SQL完成查询,现在我们来实现在自定义的程序中编写Spark

SQL查询程序。

在Spark SQL中有两种方式可以在DataFrame和RDD进行转换,第一种方法是利用反射机制,推导包含某种类型的RDD,通过反射将其转换为指定类型的DataFrame,适用于提前知道RDD的schema。

第二种方法通过编程接口与RDD进行交互获取schema,并动态创建DataFrame,在运行时决定列及其类型。

首先在maven项目的pom.xml中添加Spark SQL的依赖

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-sql_2.11</artifactId>

<version>2.0.2</version>

</dependency> |

1.1.通过反射推断Schema

Scala支持使用case class类型导入RDD转换为DataFrame,通过case class创建schema,case

class的参数名称会被反射读取并成为表的列名。这种RDD可以高效的转换为DataFrame并注册为表。

代码如下:

package cn.itcast.sql

import org.apache.spark.SparkContext

import org.apache.spark.rdd.RDD

import org.apache.spark.sql.{DataFrame, SparkSession}

/**

* RDD转化成DataFrame:利用反射机制

*/

//todo:定义一个样例类Person

case class Person(id:Int,name:String,age:Int)

extends Serializable

object InferringSchema {

def main(args: Array[String]): Unit = {

//todo:1、构建sparkSession 指定

appName和master的地址

val spark: SparkSession = SparkSession.builder()

.appName("InferringSchema")

.master("local[2]").getOrCreate()

//todo:2、从sparkSession获取sparkContext对象

val sc: SparkContext = spark.sparkContext

sc.setLogLevel("WARN")//设置日志输出级别

//todo:3、加载数据

val dataRDD: RDD[String] = sc.textFile

("D:\\person.txt")

//todo:4、切分每一行记录

val lineArrayRDD: RDD[Array[String]] =

dataRDD.map(_.split(" "))

//todo:5、将RDD与Person类关联

val personRDD: RDD[Person] = lineArrayRDD.map(x=>Person(x(0).toInt,

x(1),x(2).toInt))

//todo:6、创建dataFrame,需要导入隐式转换

import spark.implicits._

val personDF: DataFrame = personRDD.toDF()

//todo

-------------------DSL语法操作 start--------------

//1、显示DataFrame的数据,默认显示20行

personDF.show()

//2、显示DataFrame的schema信息

personDF.printSchema()

//3、显示DataFrame记录数

println(personDF.count())

//4、显示DataFrame的所有字段

personDF.columns.foreach(println)

//5、取出DataFrame的第一行记录

println(personDF.head())

//6、显示DataFrame中name字段的所有值

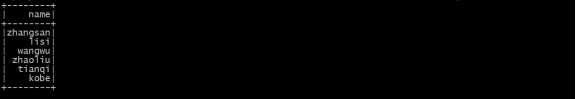

personDF.select("name").show()

//7、过滤出DataFrame中年龄大于30的记录

personDF.filter($"age" > 30).show()

//8、统计DataFrame中年龄大于30的人数

println(personDF.filter($"age">30).count())

//9、统计DataFrame中按照年龄进行分组,

求每个组的人数

personDF.groupBy("age").count().show()

//todo

-------------------DSL语法操作 end-------------

//todo

--------------------SQL操作风格 start-----------

//todo:将DataFrame注册成表

personDF.createOrReplaceTempView("t_person")

//todo:传入sql语句,进行操作

spark.sql("select * from t_person").show()

spark.sql("select * from t_person where

name='zhangsan'").show()

spark.sql("select * from t_person order

by age desc")

.show()

//todo

--------------------SQL操作风格 end-------------

sc.stop()

}

}

|

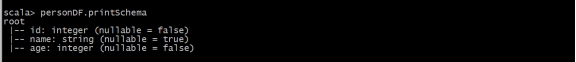

1.2.通过StructType直接指定Schema

当case class不能提前定义好时,可以通过以下三步通过代码创建DataFrame

(1)将RDD转为包含row对象的RDD

(2)基于structType类型创建schema,与第一步创建的RDD相匹配

(3)通过sparkSession的createDataFrame方法对第一步的RDD应用

schema创建DataFrame

package cn.itcast.sql

import org.apache.spark.SparkContext

import org.apache.spark.rdd.RDD

import org.apache.spark.sql.types.

{IntegerType,

StringType, StructField, StructType}

import org.apache.spark.sql.{DataFrame, Row,

SparkSession}

/**

* RDD转换成DataFrame:通过指定schema构建DataFrame

*/

object SparkSqlSchema {

def main(args: Array[String]): Unit = {

//todo:1、创建SparkSession,指定appName和master

val spark: SparkSession = SparkSession.builder()

.appName("SparkSqlSchema")

.master("local[2]")

.getOrCreate()

//todo:2、获取sparkContext对象

val sc: SparkContext = spark.sparkContext

//todo:3、加载数据

val dataRDD: RDD[String] = sc.textFile

("d:\\person.txt")

//todo:4、切分每一行

val dataArrayRDD: RDD[Array[String]] = dataRDD.map

(_.split("

"))

//todo:5、加载数据到Row对象中

val personRDD: RDD[Row] = dataArrayRDD.map(x=>Row(x(0).toInt,x(1),x(2).toInt))

//todo:6、创建schema

val schema:StructType= StructType(Seq(

StructField("id", IntegerType, false),

StructField("name", StringType, false),

StructField("age", IntegerType, false)

))

//todo:7、利用personRDD与schema创建DataFrame

val personDF: DataFrame = spark.createDataFrame

(personRDD,schema)

//todo:8、DSL操作显示DataFrame的数据结果

personDF.show()

//todo:9、将DataFrame注册成表

personDF.createOrReplaceTempView("t_person")

//todo:10、sql语句操作

spark.sql("select * from t_person").show()

spark.sql("select count(*) from t_person").show()

sc.stop()

}

} |

2.编写Spark SQL程序操作HiveContext

HiveContext是对应spark-hive这个项目,与hive有部分耦合, 支持hql,是SqlContext的子类,也就是说兼容SqlContext;

2.1.添加pom依赖

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-hive_2.11</artifactId>

<version>2.0.2</version>

</dependency>

|

2.2.代码实现

package itcast.sql

import org.apache.spark.sql.SparkSession

/**

* todo:支持hive的sql操作

*/

object HiveSupport {

def main(args: Array[String]): Unit = {

val warehouseLocation = "D:\\workSpace

_IDEA_NEW\\day2017-10-12\\spark-warehouse"

//todo:1、创建sparkSession

val spark: SparkSession = SparkSession.builder()

.appName("HiveSupport")

.master("local[2]")

.config("spark.sql.warehouse.dir",

warehouseLocation)

.enableHiveSupport() //开启支持hive

.getOrCreate()

spark.sparkContext.setLogLevel("WARN")

//设置日志输出级别

import spark.implicits._

import spark.sql

//todo:2、操作sql语句

sql("CREATE TABLE IF NOT EXISTS person

(id int, name string, age int) row format delimited

fields

terminated by ' '")

sql("LOAD DATA LOCAL INPATH '/person.txt'

INTO TABLE person")

sql("select * from person ").show()

spark.stop()

}

} |

四、数据源

1.JDBC

Spark SQL可以通过JDBC从关系型数据库中读取数据的方式创建DataFrame,通过对DataFrame一系列的计算后,还可以将数据再写回关系型数据库中。

1.1.SparkSql从MySQL中加载数据

1.1.1 通过IDEA编写SparkSql代码

package itcast.sql

import java.util.Properties

import org.apache.spark.sql.{DataFrame,

SparkSession}

/**

* todo:Sparksql从mysql中加载数据

*/

object DataFromMysql {

def main(args: Array[String]): Unit = {

//todo:1、创建sparkSession对象

val spark: SparkSession = SparkSession.builder()

.appName("DataFromMysql")

.master("local[2]")

.getOrCreate()

//todo:2、创建Properties对象,设置连接mysql

的用户名和密码

val properties: Properties =new Properties()

properties.setProperty("user","root")

properties.setProperty("password","123456")

//todo:3、读取mysql中的数据

val mysqlDF: DataFrame = spark.read.jdbc("jdbc:mysql

://192.168.200.150:3306/spark","iplocaltion",properties)

//todo:4、显示mysql中表的数据

mysqlDF.show()

spark.stop()

}

}

|

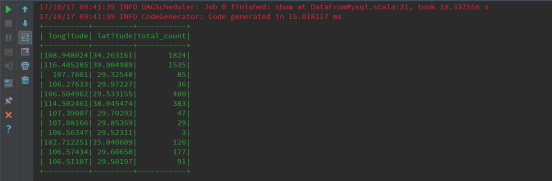

执行查看效果:

1.1.2 通过spark-shell运行

(1)、启动spark-shell(必须指定mysql的连接驱动包)

spark-shell

\

--master spark://hdp-node-01:7077 \

--executor-memory 1g\

--total-executor-cores 2\

--jars /opt/bigdata/hive/lib/mysql-connector-java-5.1.35.jar\

--driver-class-path/opt/bigdata/hive/lib/mysql

-connector-java-5.1.35.jar

|

(2)、从mysql中加载数据

val mysqlDF

= spark.read.format("jdbc").options

(Map("url"

-> "jdbc:mysql://192.168.200.100:3306/spark",

"driver" -> "com.mysql.jdbc.Driver",

"dbtable" -> "iplocaltion",

"user" -> "root", "password"

-> "123456")).load() |

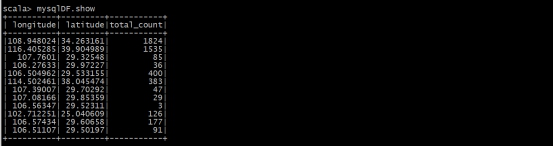

(3)、执行查询

1.2.SparkSql将数据写入到MySQL中

1.2.1 通过IDEA编写SparkSql代码

(1)编写代码

package itcast.sql

import java.util.Properties

import org.apache.spark.rdd.RDD

import org.apache.spark.sql.{DataFrame, Dataset,

SaveMode, SparkSession}

/**

* todo:sparksql写入数据到mysql中

*/

object SparkSqlToMysql {

def main(args: Array[String]): Unit = {

//todo:1、创建sparkSession对象

val spark: SparkSession = SparkSession.builder()

.appName("SparkSqlToMysql")

.getOrCreate()

//todo:2、读取数据

val data: RDD[String] = spark.sparkContext

.textFile(args(0))

//todo:3、切分每一行,

val arrRDD: RDD[Array[String]] = data.map(_.split("

"))

//todo:4、RDD关联Student

val studentRDD: RDD[Student] = arrRDD.map

(x=>Student(x(0).

toInt,x(1),x(2).toInt))

//todo:导入隐式转换

import spark.implicits._

//todo:5、将RDD转换成DataFrame

val studentDF: DataFrame = studentRDD.toDF()

//todo:6、将DataFrame注册成表

studentDF.createOrReplaceTempView("student")

//todo:7、操作student表 ,按照年龄进行降序排列

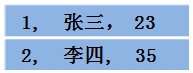

val resultDF: DataFrame = spark.sql("select

*

from student order by age desc")

//todo:8、把结果保存在mysql表中

//todo:创建Properties对象,配置连接mysql的

用户名和密码

val prop =new Properties()

prop.setProperty("user","root")

prop.setProperty("password","123456")

resultDF.write.jdbc("jdbc:mysql://192.168.200.150:3306/

spark","student",prop)

//todo:写入mysql时,可以配置插入mode,overwrite覆盖,

append追加,

ignore忽略,error默认表存在报错

//resultDF.write.mode(SaveMode.Overwrite).jdbc

("jdbc:mysql://192.168.200.150:3306/spark",

"student",prop)

spark.stop()

}

}

//todo:创建样例类Student

case class Student(id:Int,name:String,age:Int)

|

(2)用maven将程序打包

通过IDEA工具打包即可

(3)将Jar包提交到spark集群

spark-submit

\

--class itcast.sql.SparkSqlToMysql \

--master spark://hdp-node-01:7077 \

--executor-memory 1g \

--total-executor-cores 2 \

--jars/opt/bigdata/hive/lib/mysql-connector-java-5.1.35.jar

\

--driver-class-path /opt/bigdata/hive/lib/mysql-connector-java-5.1.35.jar\

/root/original-spark-2.0.2.jar /person.txt

|

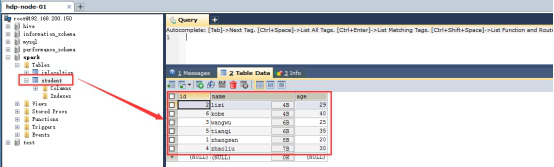

(4)查看mysql中表的数据

|