| 编辑推荐: |

| 本文来源Charlotte77,本文将简单介绍通过缩小卷积核大小来构建更深的网络,希望对您的学习有帮助。 |

|

Vgg网络结构

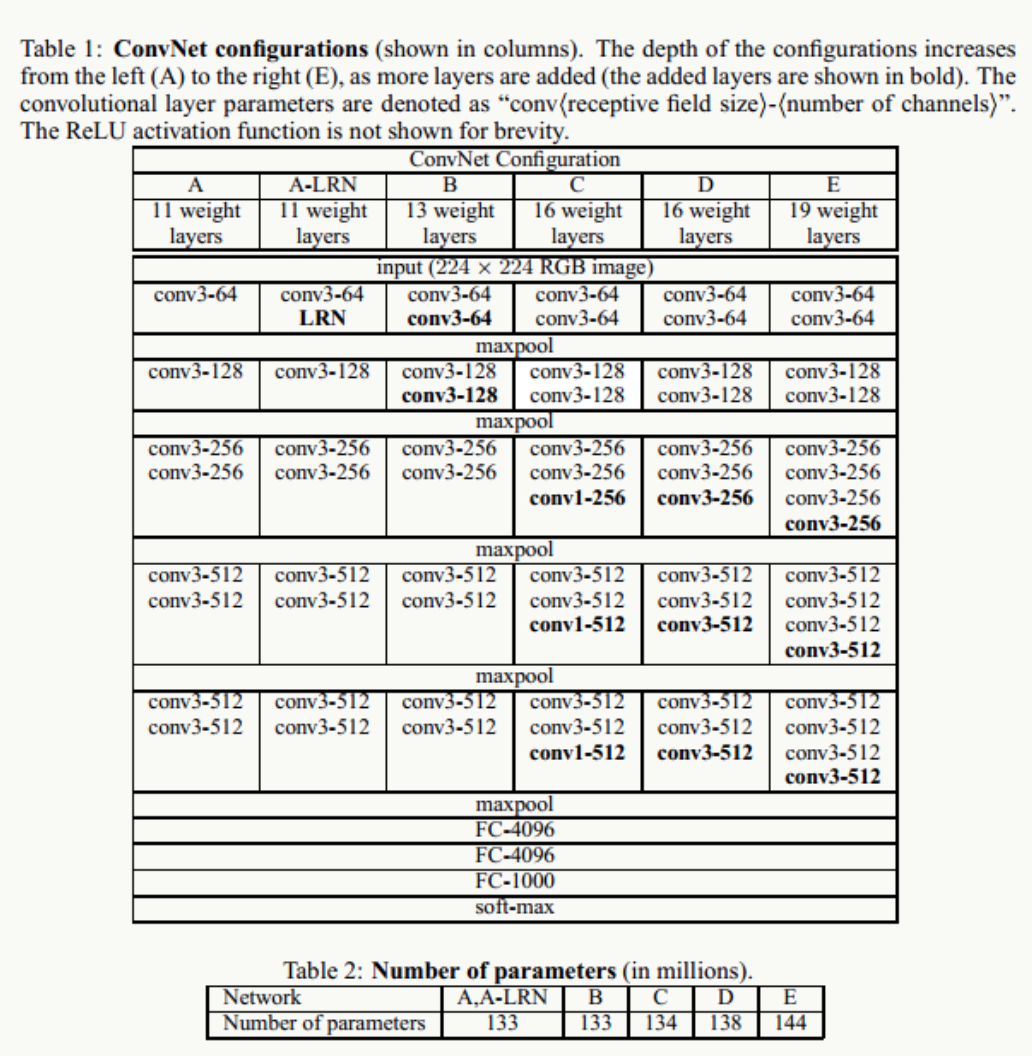

VGGnet是Oxford的Visual Geometry Group的team,在ILSVRC

2014上的主要工作是证明了增加网络的深度能够在一定程度上影响网络最终的性能,如下图,文章通过逐步增加网络深度来提高性能,虽然看起来有一点小暴力,没有特别多取巧的,但是确实有效,很多pretrained的方法就是使用VGG的model(主要是16和19),VGG相对其他的方法,参数空间很大,所以train一个vgg模型通常要花费更长的时间,不过公开的pretrained

model让我们很方便的使用,paper中的几种模型如下:

图1 vgg网络结构

图中D和E分别为VGG-16和VGG-19,参数分别是138m和144m,是文中两个效果最好的网络结构,VGG网络结构可以看做是AlexNet的加深版,VGG在图像检测中效果很好(如:Faster-RCNN),这种传统结构相对较好的保存了图片的局部位置信息(不像GoogLeNet中引入Inception可能导致位置信息的错乱)。

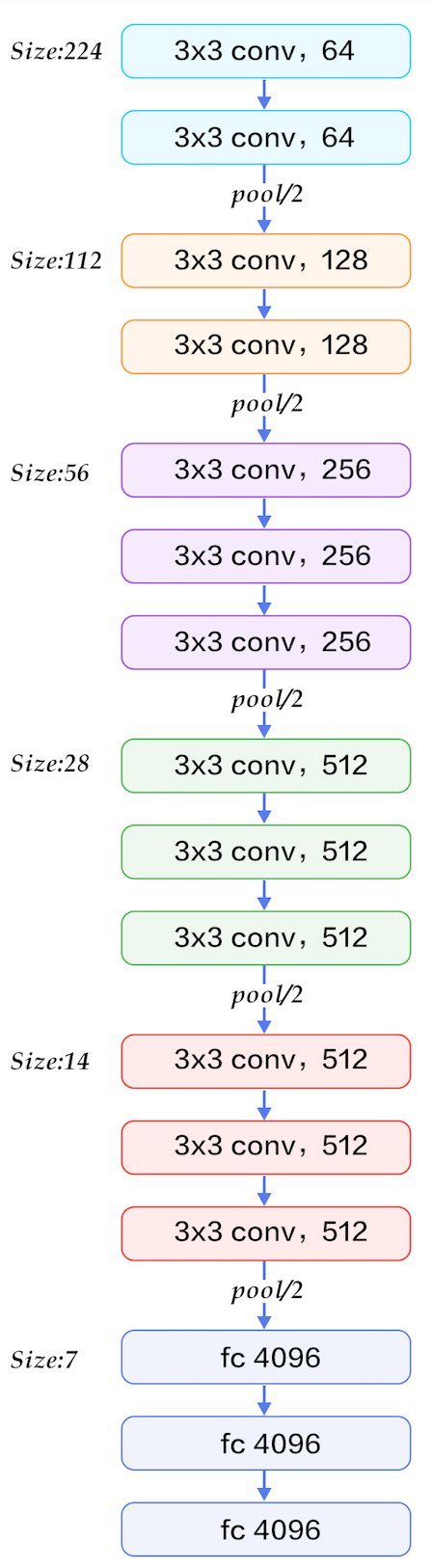

我们来仔细看一下vgg16的网络结构:

图2 vgg16网络结构

从图中可以看到,每个卷积层都使用更小的3×3卷积核对图像进行卷积,并把这些小的卷积核排列起来作为一个卷积序列。通俗点来讲就是对原始图像进行3×3卷积,然后再进行3×3卷积,连续使用小的卷积核对图像进行多次卷积。

在alexnet里我们一开始的时候是用11*11的大卷积核网络,为什么在这里要用3*3的小卷积核来对图像进行卷积呢?并且还是使用连续的小卷积核?VGG一开始提出的时候刚好与LeNet的设计原则相违背,因为LeNet相信大的卷积核能够捕获图像当中相似的特征(权值共享)。AlexNet在浅层网络开始的时候也是使用9×9、11×11卷积核,并且尽量在浅层网络的时候避免使用1×1的卷积核。但是VGG的神奇之处就是在于使用多个3×3卷积核可以模仿较大卷积核那样对图像进行局部感知。后来多个小的卷积核串联这一思想被GoogleNet和ResNet等吸收。

从图1的实验结果也可以看到,VGG使用多个3x3卷积来对高维特征进行提取。因为如果使用较大的卷积核,参数就会大量地增加、运算时间也会成倍的提升。例如3x3的卷积核只有9个权值参数,使用7*7的卷积核权值参数就会增加到49个。因为缺乏一个模型去对大量的参数进行归一化、约减,或者说是限制大规模的参数出现,因此训练核数更大的卷积网络就变得非常困难了。

VGG相信如果使用大的卷积核将会造成很大的时间浪费,减少的卷积核能够减少参数,节省运算开销。虽然训练的时间变长了,但是总体来说预测的时间和参数都是减少的了。

Vgg的优势

与AlexNet相比:

相同点

1.整体分5层

2.除softmax层外,最后几层为全连接层;

3.五层之间通过max pooling连接。

不同点

1.使用3×3的小卷积核代替7×7大卷积核,网络构建的比较深;

2.由于LRN太耗费计算资源,性价比不高,所以被去掉;

3.采用了更多的feature map,能够提取更多的特征,从而能够做更多特征的组合。

用PaddlePaddle实现Vgg

1.网络结构

|

1 #coding:utf-8

2 '''

3 Created by huxiaoman 2017.12.12

4 vggnet.py:用vgg网络实现cifar-10分类

5 '''

6

7 import paddle.v2 as paddle

8

9 def vgg(input):

10 def conv_block(ipt, num_filter, groups, dropouts,

num_channels=None):

11 return paddle.networks.img_conv_group(

12 input=ipt,

13 num_channels=num_channels,

14 pool_size=2,

15 pool_stride=2,

16 conv_num_filter=[num_filter] * groups,

17 conv_filter_size=3,

18 conv_act=paddle.activation.Relu(),

19 conv_with_batchnorm=True,

20 conv_batchnorm_drop_rate=dropouts,

21 pool_type=paddle.pooling.Max())

22

23 conv1 = conv_block(input, 64, 2, [0.3, 0],

3)

24 conv2 = conv_block(conv1, 128, 2, [0.4, 0])

25 conv3 = conv_block(conv2, 256, 3, [0.4, 0.4,

0])

26 conv4 = conv_block(conv3, 512, 3, [0.4, 0.4,

0])

27 conv5 = conv_block(conv4, 512, 3, [0.4, 0.4,

0])

28

29 drop = paddle.layer.dropout(input=conv5,

dropout_rate=0.5)

30 fc1 = paddle.layer.fc(input=drop, size=512,

act=paddle.activation.Linear())

31 bn = paddle.layer.batch_norm(

32 input=fc1,

33 act=paddle.activation.Relu(),

34 layer_attr=paddle.attr.Extra(drop_rate=0.5))

35 fc2 = paddle.layer.fc(input=bn, size=512,

act=paddle.activation.Linear())

36 return fc2 |

2.训练模型

|

1 #coding:utf-8

2 '''

3 Created by huxiaoman 2017.12.12

4 train_vgg.py:训练vgg16对cifar10数据集进行分类

5 '''

6

7 import sys, os

8 import paddle.v2 as paddle

9 from vggnet import vgg

10

11 with_gpu = os.getenv('WITH_GPU', '0') !=

'1'

12

13 def main():

14 datadim = 3 * 32 * 32

15 classdim = 10

16

17 # PaddlePaddle init

18 paddle.init(use_gpu=with_gpu, trainer

_count=8)

19

20 image = paddle.layer.data(

21 name="image", type=paddle.data_type.

dense_vector(datadim))

22

23 net = vgg(image)

24

25 out = paddle.layer.fc(

26 input=net, size=classdim, act=paddle.

activation.Softmax())

27

28 lbl = paddle.layer.data(

29 name="label", type=paddle.data_type.integer_value(classdim))

30 cost = paddle.layer.classification_cost

(input=out, label=lbl)

31

32 # Create parameters

33 parameters = paddle.parameters.create(cost)

34

35 # Create optimizer

36 momentum_optimizer = paddle.optimizer.

Momentum(

37 momentum=0.9,

38 regularization=paddle.optimizer.L2Regular

ization(rate=0.0002

* 128),

39 learning_rate=0.1 / 128.0,

40 learning_rate_decay_a=0.1,

41 learning_rate_decay_b=50000 * 100,

42 learning_rate_schedule='discexp')

43

44 # End batch and end pass event handler

45 def event_handler(event):

46 if isinstance(event, paddle.event.EndIteration):

47 if event.batch_id % 100 == 0:

48 print "\nPass %d, Batch %d, Cost %f,

%s" % (

49 event.pass_id, event.batch_id, event.cost,

event.metrics)

50 else:

51 sys.stdout.write('.')

52 sys.stdout.flush()

53 if isinstance(event, paddle.event.EndPass):

54 # save parameters

55 with open('params_pass_%d.tar' % event.pass

_id, 'w') as f:

56 parameters.to_tar(f)

57

58 result = trainer.test(

59 reader=paddle.batch(

60 paddle.dataset.cifar.test10(), batch_size=128),

61 feeding={'image': 0,

62 'label': 1})

63 print "\nTest with Pass %d, %s"

% (event.pass_id, result.metrics)

64

65 # Create trainer

66 trainer = paddle.trainer.SGD(

67 cost=cost, parameters=parameters, update_equation=momentum_optimizer)

68

69 # Save the inference topology to protobuf.

70 inference_topology = paddle.topology.Topology

(layers=out)

71 with open("inference_topology.pkl",

'wb') as f:

72 inference_topology.serialize_for_inference(f)

73

74 trainer.train(

75 reader=paddle.batch(

76 paddle.reader.shuffle(

77 paddle.dataset.cifar.train10(), buf_size

=50000),

78 batch_size=128),

79 num_passes=200,

80 event_handler=event_handler,

81 feeding={'image': 0,

82 'label': 1})

83

84 # inference

85 from PIL import Image

86 import numpy as np

87 import os

88

89 def load_image(file):

90 im = Image.open(file)

91 im = im.resize((32, 32), Image.ANTIALIAS)

92 im = np.array(im).astype(np.float32)

93 im = im.transpose((2, 0, 1)) # CHW

94 im = im[(2, 1, 0), :, :] # BGR

95 im = im.flatten()

96 im = im / 255.0

97 return im

98

99 test_data = []

100 cur_dir = os.path.dirname(os.path.realpath

(__file__))

101 test_data.append((load_image(cur_dir + '/

image/dog.png'),

))

102

103 probs = paddle.infer(

104 output_layer=out, parameters=parameters,

input=test_data)

105 lab = np.argsort(-probs) # probs and lab

are the results

of one batch data

106 print "Label of image/dog.png is: %d"

%

lab[0][0]

107

108

109 if __name__ == '__main__':

110 main() |

3.训练结果

View Code

从训练结果来看,开了7个线程,8个Tesla K80,迭代200次,耗时16h21min,相比于之前训练的lenet和alexnet的几个小时来说,时间消耗很高,但是结果很好,准确率是89.11%,在同设备和迭代次数情况下,比lenet的和alexnet的精度都要高。

用Tensorflow实现vgg

1.网络结构

|

1 def inference_op(input_op, keep_prob):

2 p = []

3 # 第一块 conv1_1-conv1_2-pool1

4 conv1_1 = conv_op(input_op, name='conv1_1',

kh=3, kw=3,

5 n_out = 64, dh = 1, dw = 1, p = p)

6 conv1_2 = conv_op(conv1_1, name='conv1_2',

kh=3, kw=3,

7 n_out = 64, dh = 1, dw = 1, p = p)

8 pool1 = mpool_op(conv1_2, name = 'pool1',

kh = 2, kw = 2,

9 dw = 2, dh = 2)

10 # 第二块 conv2_1-conv2_2-pool2

11 conv2_1 = conv_op(pool1, name='conv2_1',

kh=3, kw=3,

12 n_out = 128, dh = 1, dw = 1, p = p)

13 conv2_2 = conv_op(conv2_1, name='conv2_2',

kh=3, kw=3,

14 n_out = 128, dh = 1, dw = 1, p = p)

15 pool2 = mpool_op(conv2_2, name = 'pool2',

kh = 2, kw = 2,

16 dw = 2, dh = 2)

17 # 第三块 conv3_1-conv3_2-conv3_3-pool3

18 conv3_1 = conv_op(pool2, name='conv3_1',

kh=3, kw=3,

19 n_out = 256, dh = 1, dw = 1, p = p)

20 conv3_2 = conv_op(conv3_1, name='conv3_2',

kh=3, kw=3,

21 n_out = 256, dh = 1, dw = 1, p = p)

22 conv3_3 = conv_op(conv3_2, name='conv3_3',

kh=3, kw=3,

23 n_out = 256, dh = 1, dw = 1, p = p)

24 pool3 = mpool_op(conv3_3, name = 'pool3',

kh = 2, kw = 2,

25 dw = 2, dh = 2)

26 # 第四块 conv4_1-conv4_2-conv4_3-pool4

27 conv4_1 = conv_op(pool3, name='conv4_1',

kh=3, kw=3,

28 n_out = 512, dh = 1, dw = 1, p = p)

29 conv4_2 = conv_op(conv4_1, name='conv4_2',

kh=3, kw=3,

30 n_out = 512, dh = 1, dw = 1, p = p)

31 conv4_3 = conv_op(conv4_2, name='conv4_3',

kh=3, kw=3,

32 n_out = 512, dh = 1, dw = 1, p = p)

33 pool4 = mpool_op(conv4_3, name = 'pool4',

kh = 2, kw = 2,

34 dw = 2, dh = 2)

35 # 第五块 conv5_1-conv5_2-conv5_3-pool5

36 conv5_1 = conv_op(pool4, name='conv5_1',

kh=3, kw=3,

37 n_out = 512, dh = 1, dw = 1, p = p)

38 conv5_2 = conv_op(conv5_1, name='conv5_2',

kh=3, kw=3,

39 n_out = 512, dh = 1, dw = 1, p = p)

40 conv5_3 = conv_op(conv5_2, name='conv5_3',

kh=3, kw=3,

41 n_out = 512, dh = 1, dw = 1, p = p)

42 pool5 = mpool_op(conv5_3, name = 'pool5',

kh = 2, kw = 2,

43 dw = 2, dh = 2)

44 # 把pool5 ( [7, 7, 512] ) 拉成向量

45 shp = pool5.get_shape()

46 flattened_shape = shp[1].value * shp[2].value

* shp[3].value

47 resh1 = tf.reshape(pool5, [-1, flattened_shape],

name = 'resh1')

48

49 # 全连接层1 添加了 Droput来防止过拟合

50 fc1 = fc_op(resh1, name = 'fc1', n_out =

2048, p = p)

51 fc1_drop = tf.nn.dropout(fc1, keep_prob,

name = 'fc1_drop')

52

53 # 全连接层2 添加了 Droput来防止过拟合

54 fc2 = fc_op(fc1_drop, name = 'fc2', n_out

= 2048, p = p)

55 fc2_drop = tf.nn.dropout(fc2, keep_prob,

name = 'fc2_drop')

56

57 # 全连接层3 加一个softmax求给类别的概率

58 fc3 = fc_op(fc2_drop, name = 'fc3', n_out

= 1000, p = p)

59 softmax = tf.nn.softmax(fc3)

60 predictions = tf.argmax(softmax, 1)

61 return predictions, softmax, fc3, p

复制代码 |

2.训练网络结构

|

1 # -*- coding: utf-8 -*-

2 """

3 Created by huxiaoman 2017.12.12

4 vgg_tf.py:训练tensorflow版的vgg16网络,对cifar-10shuju进行分类

5 """

6 from datetime import datetime

7 import math

8 import time

9 import tensorflow as tf

10 import cifar10

11

12 batch_size = 128

13 num_batches = 200

14

15 # 定义函数对卷积层进行初始化

16 # input_op : 输入数据

17 # name : 该卷积层的名字,用tf.name_scope()来命名

18 # kh,kw : 分别是卷积核的高和宽

19 # n_out : 输出通道数

20 # dh,dw : 步长的高和宽

21 # p : 是参数列表,存储VGG所用到的参数

22 # 采用xavier方法对卷积核权值进行初始化

23 def conv_op(input_op, name, kh, kw, n_out,

dh, dw, p):

24 n_in = input_op.get_shape()[-1].value # 获得输入图像的通道数

25 with tf.name_scope(name) as scope:

26 kernel = tf.get_variable(scope+'w',

27 shape = [kh, kw, n_in, n_out], dtype = tf.float32,

28 initializer = tf.contrib.layers.xavier_initializer_conv2d())

29 # 卷积层计算

30 conv = tf.nn.conv2d(input_op, kernel, (1,

dh, dw, 1), padding = 'SAME')

31 bias_init_val = tf.constant(0.0, shape =

[n_out], dtype = tf.float32)

32 biases = tf.Variable(bias_init_val, trainable

= True, name = 'b')

33 z = tf.nn.bias_add(conv, biases)

34 activation = tf.nn.relu(z, name = scope)

35 p += [kernel, biases]

36 return activation

37

38 # 定义函数对全连接层进行初始化

39 # input_op : 输入数据

40 # name : 该全连接层的名字

41 # n_out : 输出的通道数

42 # p : 参数列表

43 # 初始化方法用 xavier方法

44 def fc_op(input_op, name, n_out, p):

45 n_in = input_op.get_shape()[-1].value

46

47 with tf.name_scope(name) as scope:

48 kernel = tf.get_variable(scope+'w',

49 shape = [n_in, n_out], dtype = tf.float32,

50 initializer = tf.contrib.layers.xavier_initializer())

51 biases = tf.Variable(tf.constant(0.1, shape

= [n_out],

52 dtype = tf.float32), name = 'b')

53 activation = tf.nn.relu_layer(input_op, kernel,

# ???????????????

54 biases, name = scope)

55 p += [kernel, biases]

56 return activation

57

58 # 定义函数 创建 maxpool层

59 # input_op : 输入数据

60 # name : 该卷积层的名字,用tf.name_scope()来命名

61 # kh,kw : 分别是卷积核的高和宽

62 # dh,dw : 步长的高和宽

63 def mpool_op(input_op, name, kh, kw, dh,

dw):

64 return tf.nn.max_pool(input_op, ksize = [1,kh,kw,1],

65 strides = [1, dh, dw, 1], padding = 'SAME',

name = name)

66

67 #---------------创建 VGG-16------------------

68

69 def inference_op(input_op, keep_prob):

70 p = []

71 # 第一块 conv1_1-conv1_2-pool1

72 conv1_1 = conv_op(input_op, name='conv1_1',

kh=3, kw=3,

73 n_out = 64, dh = 1, dw = 1, p = p)

74 conv1_2 = conv_op(conv1_1, name='conv1_2',

kh=3, kw=3,

75 n_out = 64, dh = 1, dw = 1, p = p)

76 pool1 = mpool_op(conv1_2, name = 'pool1',

kh = 2, kw = 2,

77 dw = 2, dh = 2)

78 # 第二块 conv2_1-conv2_2-pool2

79 conv2_1 = conv_op(pool1, name='conv2_1',

kh=3, kw=3,

80 n_out = 128, dh = 1, dw = 1, p = p)

81 conv2_2 = conv_op(conv2_1, name='conv2_2',

kh=3, kw=3,

82 n_out = 128, dh = 1, dw = 1, p = p)

83 pool2 = mpool_op(conv2_2, name = 'pool2',

kh = 2, kw = 2,

84 dw = 2, dh = 2)

85 # 第三块 conv3_1-conv3_2-conv3_3-pool3

86 conv3_1 = conv_op(pool2, name='conv3_1',

kh=3, kw=3,

87 n_out = 256, dh = 1, dw = 1, p = p)

88 conv3_2 = conv_op(conv3_1, name='conv3_2',

kh=3, kw=3,

89 n_out = 256, dh = 1, dw = 1, p = p)

90 conv3_3 = conv_op(conv3_2, name='conv3_3',

kh=3, kw=3,

91 n_out = 256, dh = 1, dw = 1, p = p)

92 pool3 = mpool_op(conv3_3, name = 'pool3',

kh = 2, kw = 2,

93 dw = 2, dh = 2)

94 # 第四块 conv4_1-conv4_2-conv4_3-pool4

95 conv4_1 = conv_op(pool3, name='conv4_1',

kh=3, kw=3,

96 n_out = 512, dh = 1, dw = 1, p = p)

97 conv4_2 = conv_op(conv4_1, name='conv4_2',

kh=3, kw=3,

98 n_out = 512, dh = 1, dw = 1, p = p)

99 conv4_3 = conv_op(conv4_2, name='conv4_3',

kh=3, kw=3,

100 n_out = 512, dh = 1, dw = 1, p = p)

101 pool4 = mpool_op(conv4_3, name = 'pool4',

kh = 2, kw = 2,

102 dw = 2, dh = 2)

103 # 第五块 conv5_1-conv5_2-conv5_3-pool5

104 conv5_1 = conv_op(pool4, name='conv5_1',

kh=3, kw=3,

105 n_out = 512, dh = 1, dw = 1, p = p)

106 conv5_2 = conv_op(conv5_1, name='conv5_2',

kh=3, kw=3,

107 n_out = 512, dh = 1, dw = 1, p = p)

108 conv5_3 = conv_op(conv5_2, name='conv5_3',

kh=3, kw=3,

109 n_out = 512, dh = 1, dw = 1, p = p)

110 pool5 = mpool_op(conv5_3, name = 'pool5',

kh = 2, kw = 2,

111 dw = 2, dh = 2)

112 # 把pool5 ( [7, 7, 512] ) 拉成向量

113 shp = pool5.get_shape()

114 flattened_shape = shp[1].value * shp[2].value

* shp[3].value

115 resh1 = tf.reshape(pool5, [-1, flattened_shape],

name = 'resh1')

116

117 # 全连接层1 添加了 Droput来防止过拟合

118 fc1 = fc_op(resh1, name = 'fc1', n_out =

2048, p = p)

119 fc1_drop = tf.nn.dropout(fc1, keep_prob,

name = 'fc1_drop')

120

121 # 全连接层2 添加了 Droput来防止过拟合

122 fc2 = fc_op(fc1_drop, name = 'fc2', n_out

= 2048, p = p)

123 fc2_drop = tf.nn.dropout(fc2, keep_prob,

name = 'fc2_drop')

124

125 # 全连接层3 加一个softmax求给类别的概率

126 fc3 = fc_op(fc2_drop, name = 'fc3', n_out

= 1000, p = p)

127 softmax = tf.nn.softmax(fc3)

128 predictions = tf.argmax(softmax, 1)

129 return predictions, softmax, fc3, p

130

131 # 定义评测函数

132

133 def time_tensorflow_run(session, target,

feed, info_string):

134 num_steps_burn_in = 10

135 total_duration = 0.0

136 total_duration_squared = 0.0

137

138 for i in range(num_batches + num_steps_burn_in):

139 start_time = time.time()

140 _ = session.run(target, feed_dict = feed)

141 duration = time.time() - start_time

142 if i >= num_steps_burn_in:

143 if not i % 10:

144 print('%s: step %d, duration = %.3f' %

145 (datetime.now(), i-num_steps_burn_in, duration))

146 total_duration += duration

147 total_duration_squared += duration * duration

148 mean_dur = total_duration / num_batches

149 var_dur = total_duration_squared / num_batches

- mean_dur * mean_dur

150 std_dur = math.sqrt(var_dur)

151 print('%s: %s across %d steps, %.3f +/-

%.3f sec / batch' %(datetime.now(), info_string,

num_batches, mean_dur, std_dur))

152

153

154 def train_vgg16():

155 with tf.Graph().as_default():

156 image_size = 224 # 输入图像尺寸

157 # 生成随机数测试是否能跑通

158 #images = tf.Variable(tf.random_normal([batch_size,

image_size, image_size, 3], dtype=tf.float32,

stddev=1e-1))

159 with tf.device('/cpu:0'):

160 images, labels = cifar10.distorted_inputs()

161 keep_prob = tf.placeholder(tf.float32)

162 prediction,softmax,fc8,p = inference_op(images,keep_prob)

163 init = tf.global_variables_initializer()

164 sess = tf.Session()

165 sess.run(init)

166 time_tensorflow_run(sess, prediction,{keep_prob:1.0},

"Forward")

167 # 用以模拟训练的过程

168 objective = tf.nn.l2_loss(fc8) # 给一个loss

169 grad = tf.gradients(objective, p) # 相对于loss的

所有模型参数的梯度

170 time_tensorflow_run(sess, grad, {keep_prob:0.5},"Forward-backward")

171

172

173

174

175 if __name__ == '__main__':

176 train_vgg16() |

当然,我们也可以用tf.slim来简化一下网络结构

|

1 def vgg16(inputs):

2 with slim.arg_scope([slim.conv2d, slim.fully_connected],

3 activation_fn=tf.nn.relu,

4 weights_initializer=tf.truncated_normal_

initializer(0.0,

0.01),

5 weights_regularizer=slim.l2_regularizer

(0.0005)):

6 net = slim.repeat(inputs, 2, slim.conv2d,

64, [3, 3], scope='conv1')

7 net = slim.max_pool2d(net, [2, 2], scope='

pool1')

8 net = slim.repeat(net, 2, slim.conv2d, 128,

[3, 3], scope='conv2')

9 net = slim.max_pool2d(net, [2, 2], scope='

pool2')

10 net = slim.repeat(net, 3, slim.conv2d, 256,

[3, 3], scope='conv3')

11 net = slim.max_pool2d(net, [2, 2], scope='

pool3')

12 net = slim.repeat(net, 3, slim.conv2d, 512,

[3, 3], scope='conv4')

13 net = slim.max_pool2d(net, [2, 2], scope=

'pool4')

14 net = slim.repeat(net, 3, slim.conv2d, 512,

[3, 3], scope='conv5')

15 net = slim.max_pool2d(net, [2, 2], scope='

pool5')

16 net = slim.fully_connected(net, 4096,

scope='fc6')

17 net = slim.dropout(net, 0.5, scope='

dropout6')

18 net = slim.fully_connected(net, 4096,

scope='fc7')

19 net = slim.dropout(net, 0.5, scope='

dropout7')

20 net = slim.fully_connected(net, 1000, activation_fn=None,

scope='fc8') |

对比训练结果,在同等设备和环境下,迭代200tensorflow的训练结果是89.18%,耗时18h12min,对比paddlepaddle的效果,精度差不多,时间慢一点。其实可以对数据进行处理后再进行训练,转换成tfrecord多线程输入在训练,时间应该会快很多。

总结

通过论文的分析和实验的结果,我总结了几点:

1.LRN层太耗费计算资源,作用不大,可以舍去。

2.大卷积核可以学习更大的空间特征,但是需要的参数空间也更多,小卷积核虽然学习的空间特征有限,但所需参数空间更小,多层叠加训练可能效果更好。

3.越深的网络效果越好,但是要避免梯度消失的问题,选取relu的激活函数、batch_normalization等都可以从一定程度上避免。

4.小卷积核+深层网络的效果,在迭代相同次数时,比大卷积核+浅层网络效果更好,对于我们自己设计网络时可以有借鉴作用。但是前者的训练时间可能更长,不过可能比后者收敛速度更快,精确度更好。 |